Solving AI Data Challenges:

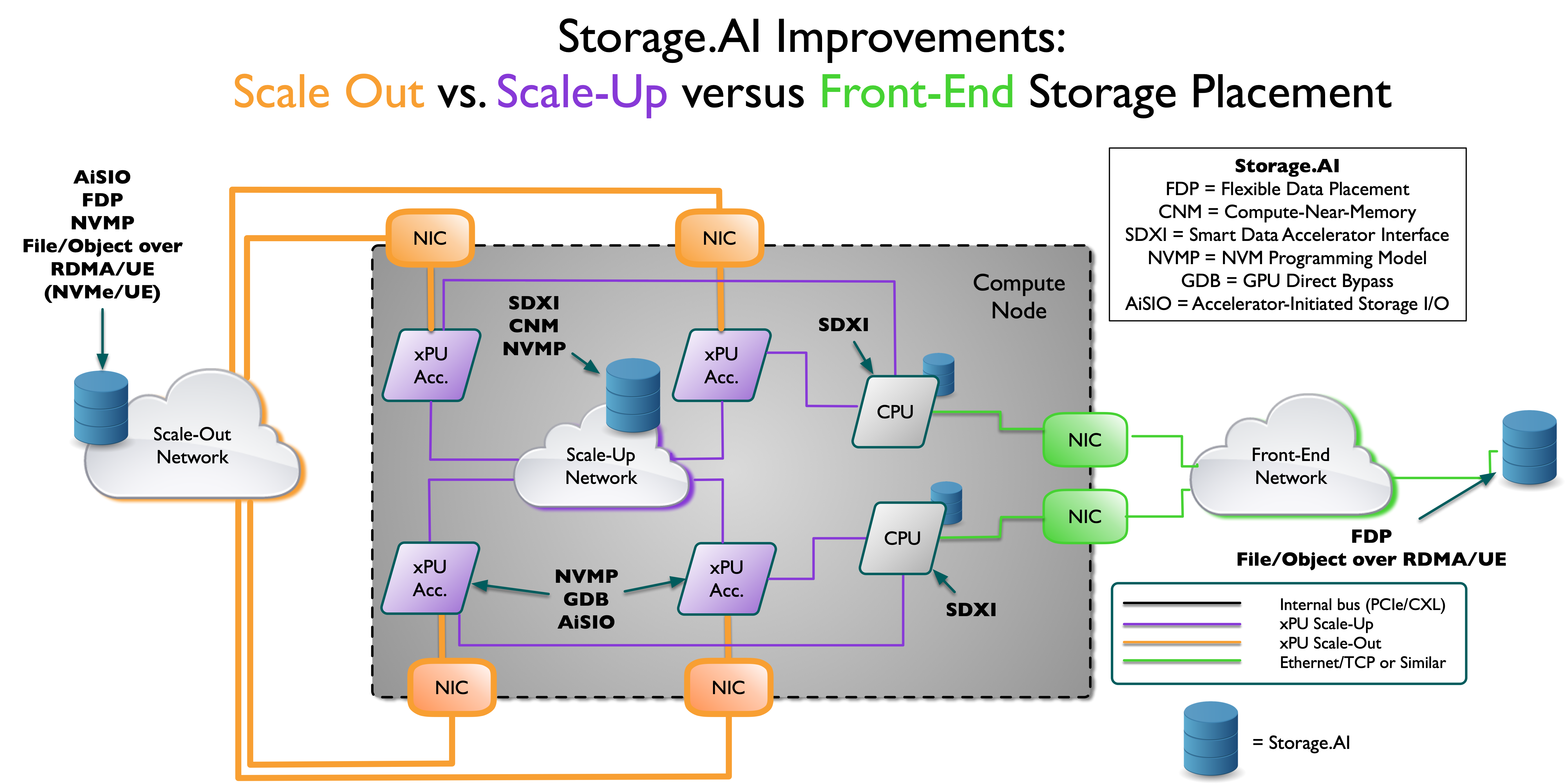

Storage.AI is an open standards project for efficient data services related to AI workloads. We are addressing the most difficult challenges related to AI workloads, closing existing gaps in processing and accessing data.

- AI exposed urgent problems in data services, especially around how storage interacts with compute.

- Data pipelines are inefficient— round-tripping between storage and compute is wasting power and performance.

- GPUs go idle when data isn’t available at the right place and time—and that’s incredibly expensive

Storage.AI will focus on vendor-neutral, interoperable solutions for issues like memory tiering, data movement, storage efficiency, and system-level latency that take advantage of GPUs and AI acceleration.

Ongoing SNIA Technical Work | Enables |

| SDXI (Smart Data Acceleration Interface) | Vendor-neutral DMA acceleration across CPU, GPU, DPU; memory-to-memory copies and transforms |

| Computational Storage API & Architecture | Allows SSDs and storage devices to perform computation (e.g. filtering, inferencing, format conversion) directly |

| NVM Programming Model | Unified software interface for accessing NVMe, SCM, and RDMA memory/storage tiers |

| Swordfish / Redfish Extensions | Unified, Redfish-compatible storage management for hybrid and disaggregated infrastructure |

| Object Drive Workgroup | Standard interfaces for object storage devices to operate in RDMA / hyperscale environments |

| Flexible Data Placement APIs | Works with computational storage and GPU-aware storage pipelines to optimize layout and streaming throughput |

Future Work Planned | |

| File/Object over RDMA | Enables hybrid file/object storage backends, allowing traditional applications to benefit from S3-like scalability, combined with the speed and efficiency of RDMA |

| GPU Direct Access Bypass | Bypasses CPU for data movement between GPU memory and RDMA/NVMe, enabling near-zero-latency I/O to accelerators |

| GPU-Initiated I/O | GPUs can initiate and complete their own I/O operations (e.g. checkpoint writes, data loading), reducing CPU bottlenecks. Also known as Accelerator-Initiated Storage I/O (AiSIO). |

Join Us!

Storage.AI is open to all! Join our open ecosystem with multiple working groups and organizations. Contact: storage.ai@snia.org