Software Defined Storage Q&A

Oct 25, 2019

Find a similar article by tags

Networked StorageSoftware Defined Storage Q&A

Oct 25, 2019

The SNIA Networking Storage Forum (NSF) recently hosted a live webcast, What Software Defined Storage Means for Storage Networking where our experts, Ted Vojnovich and Fred Bower explained what makes software defined storage (SDS) different from traditional storage. If you missed the live event, you can watch it on-demand at your convenience. We had several questions at the live event and here are our experts’ answers to them all:

Q. Are there cases where SDS can still work with legacy storage so that high priority flows, online transaction processing (OLTP) can use SAN for the legacy storage while not so high priority and backup data flows utilize the SDS infrastructure?

A. The simple answer is, yes. Like anything else, companies are using different methods and architectures to resolve their compute and storage requirements. Just like public cloud may be used for some non-sensitive/vital data and in-house cloud or traditional storage for sensitive data. Of course, this adds costs, so benefits need to be weighed against the additional expense.

Q. What is the best way to mitigate unpredictable network latency that can go out of the bounds of a storage required service level agreement (SLA)?

A. There are several ways to mitigate latency. Generally speaking, increased bandwidth contributes to better network speed because the “pipe” is essentially larger and more data can travel through it. There are other means as well to reduce latency such the use of offloads and accelerators. Remote Direct Memory Access (RDMA) is one of these and is being used by many storage companies to help handle the increased capacity and bandwidth needed in Flash storage environments. Edge computing should also be added to this list as it relocated key data processing and access points from the center of the network to the edge where it can be gathered and delivered more efficiently.

Q. Can you please elaborate on SDS scaling in comparison with traditional storage?

A. Most SDS solutions are designed to scale-out both performance and capacity to avoid bottlenecks whereas most traditional storage has always had limited scalability, scaling up in capacity only. This is because as a scale-up storage system begins to reach capacity, the controller becomes saturated and performance suffers. The workaround for this problem with traditional storage is to upgrade the storage controller or purchase more arrays, which can often lead to unproductive and hard to manage silos.

Q. You didn’t talk much about distributed storage management and namespaces (i.e. NFS or AFS)?

A. Storage management consists of monitoring and maintaining storage health, platform health, and drive health. It also includes storage provisioning such as creating each LUN /share/etc., or binding LUNs to controllers and servers. On top of that, storage management involves storage services like disk groups, snapshot, dedupe, replication, etc. This is true for both SDS and traditional storage (Converged Infrastructure and Hyper-Converged Infrastructure will leverage this ability in storage). NFS is predominately a non-Windows (Linux, Unix, VMware) file storage protocol while AFS is no longer popular in the data center and has been replaced as a file storage protocol by either NFS or SMB (in fact, it’s been a long time since somebody mentioned “AFS”).

Q. How does SDS affect storage networking? Are SAN vendors going to lose customers?

A. SAN vendors aren’t going anywhere because of the large existing installed base which isn’t going quietly into the night. Most SDS solutions focus on Ethernet connectivity (as diagrams state) while traditional storage is split between Fibre Channel and Ethernet; InfiniBand is more of a niche storage play for HPC and some AI or machine learning customers.

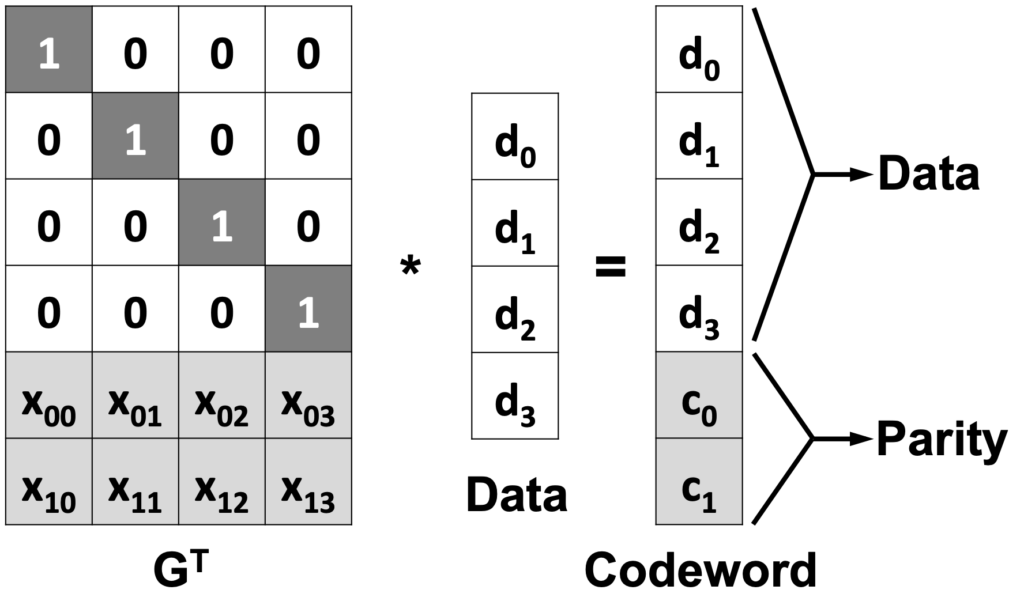

Q. Storage costs for SDS are highly dependent on scale and replication or erasure code. An erasure coded multi-petabyte solution can be significantly less than a traditional storage solution.

A. It’s a processing complexity vs. cost of additional space tradeoff. Erasure coding is processing intense but requires less storage capacity. Making copies uses less processing power but consumes more capacity. It is true to say replicating copies uses more network bandwidth. Erasure coding tends to be used more often for storage of large objects or files, and less often for latency-sensitive block storage.

If you have more questions on SDS, let us know in the comment box.

The SNIA Networking Storage Forum (NSF) recently hosted a live webcast, What Software Defined Storage Means for Storage Networking where our experts, Ted Vojnovich and Fred Bower explained what makes software defined storage (SDS) different from traditional storage. If you missed the live event, you can watch it on-demand at your convenience. We had several questions at the live event and here are our experts’ answers to them all:

Q. Are there cases where SDS can still work with legacy storage so that high priority flows, online transaction processing (OLTP) can use SAN for the legacy storage while not so high priority and backup data flows utilize the SDS infrastructure?

A. The simple answer is, yes. Like anything else, companies are using different methods and architectures to resolve their compute and storage requirements. Just like public cloud may be used for some non-sensitive/vital data and in-house cloud or traditional storage for sensitive data. Of course, this adds costs, so benefits need to be weighed against the additional expense.

Q. What is the best way to mitigate unpredictable network latency that can go out of the bounds of a storage required service level agreement (SLA)?

A. There are several ways to mitigate latency. Generally speaking, increased bandwidth contributes to better network speed because the “pipe” is essentially larger and more data can travel through it. There are other means as well to reduce latency such the use of offloads and accelerators. Remote Direct Memory Access (RDMA) is one of these and is being used by many storage companies to help handle the increased capacity and bandwidth needed in Flash storage environments. Edge computing should also be added to this list as it relocated key data processing and access points from the center of the network to the edge where it can be gathered and delivered more efficiently.

Q. Can you please elaborate on SDS scaling in comparison with traditional storage?

A. Most SDS solutions are designed to scale-out both performance and capacity to avoid bottlenecks whereas most traditional storage has always had limited scalability, scaling up in capacity only. This is because as a scale-up storage system begins to reach capacity, the controller becomes saturated and performance suffers. The workaround for this problem with traditional storage is to upgrade the storage controller or purchase more arrays, which can often lead to unproductive and hard to manage silos.

Q. You didn’t talk much about distributed storage management and namespaces (i.e. NFS or AFS)?

A. Storage management consists of monitoring and maintaining storage health, platform health, and drive health. It also includes storage provisioning such as creating each LUN /share/etc., or binding LUNs to controllers and servers. On top of that, storage management involves storage services like disk groups, snapshot, dedupe, replication, etc. This is true for both SDS and traditional storage (Converged Infrastructure and Hyper-Converged Infrastructure will leverage this ability in storage). NFS is predominately a non-Windows (Linux, Unix, VMware) file storage protocol while AFS is no longer popular in the data center and has been replaced as a file storage protocol by either NFS or SMB (in fact, it’s been a long time since somebody mentioned “AFS”).

Q. How does SDS affect storage networking? Are SAN vendors going to lose customers?

A. SAN vendors aren’t going anywhere because of the large existing installed base which isn’t going quietly into the night. Most SDS solutions focus on Ethernet connectivity (as diagrams state) while traditional storage is split between Fibre Channel and Ethernet; InfiniBand is more of a niche storage play for HPC and some AI or machine learning customers.

Q. Storage costs for SDS are highly dependent on scale and replication or erasure code. An erasure coded multi-petabyte solution can be significantly less than a traditional storage solution.

A. It’s a processing complexity vs. cost of additional space tradeoff. Erasure coding is processing intense but requires less storage capacity. Making copies uses less processing power but consumes more capacity. It is true to say replicating copies uses more network bandwidth. Erasure coding tends to be used more often for storage of large objects or files, and less often for latency-sensitive block storage.

If you have more questions on SDS, let us know in the comment box.

Leave a Reply

Learn the Latest on Persistence at the 2020 Persistent Memory Summit

Oct 21, 2019

The day before, on January 22, an expanded version of the SNIA Persistent Memory Hackathon will return, co-located again with the SNIA Annual Members Symposium. We’ll share Hackathon details in an upcoming SNIA Solid State blog.

For those who have already attended a Persistent Memory Summit, you will find significant changes in the makeup of the agenda. For those who have never attended, the new agenda might also be an opportunity to learn more about development options and experiences for persistent memory.

The focus of the 2020 Summit will be on tool and application development for systems with persistent memory. While there is significant momentum for applications, some companies and individuals are still hesitant. The recent Persistent Programming in Real Life (PIRL) conference in San Diego focused on corporate and academic development efforts, specifically diving into the experience of developing for persistent memory. A great example of the presentations at PIRL is one on ZUFS from Shachar Sharon of NetApp, a SNIA member company.

The Persistent Memory Summit will have several similar talks focusing on the experience of delivering applications for persistent memory. While obviously of benefit to developers and software management, the presentations will also serve the needs of hardware attendees by highlighting the process that applications will follow to utilize new hardware. Likewise, companies interested in exploring persistent memory in IT infrastructure can benefit from understanding the implementations that are available.

The day before, on January 22, an expanded version of the SNIA Persistent Memory Hackathon will return, co-located again with the SNIA Annual Members Symposium. We’ll share Hackathon details in an upcoming SNIA Solid State blog.

For those who have already attended a Persistent Memory Summit, you will find significant changes in the makeup of the agenda. For those who have never attended, the new agenda might also be an opportunity to learn more about development options and experiences for persistent memory.

The focus of the 2020 Summit will be on tool and application development for systems with persistent memory. While there is significant momentum for applications, some companies and individuals are still hesitant. The recent Persistent Programming in Real Life (PIRL) conference in San Diego focused on corporate and academic development efforts, specifically diving into the experience of developing for persistent memory. A great example of the presentations at PIRL is one on ZUFS from Shachar Sharon of NetApp, a SNIA member company.

The Persistent Memory Summit will have several similar talks focusing on the experience of delivering applications for persistent memory. While obviously of benefit to developers and software management, the presentations will also serve the needs of hardware attendees by highlighting the process that applications will follow to utilize new hardware. Likewise, companies interested in exploring persistent memory in IT infrastructure can benefit from understanding the implementations that are available.

The Summit will also feature some of the entries to the SNIA NVDIMM Programming Challenge announced at the SNIA Storage Developer Conference. Check out the “Show Your Persistent Stuff” blog for all the details. If you haven’t registered to participate, opportunities are still available.

Registration for the Persistent Memory summit is complimentary, and includes a demonstration area, lunch, and reception. Don’t miss this event!

The Summit will also feature some of the entries to the SNIA NVDIMM Programming Challenge announced at the SNIA Storage Developer Conference. Check out the “Show Your Persistent Stuff” blog for all the details. If you haven’t registered to participate, opportunities are still available.

Registration for the Persistent Memory summit is complimentary, and includes a demonstration area, lunch, and reception. Don’t miss this event!

Leave a Reply

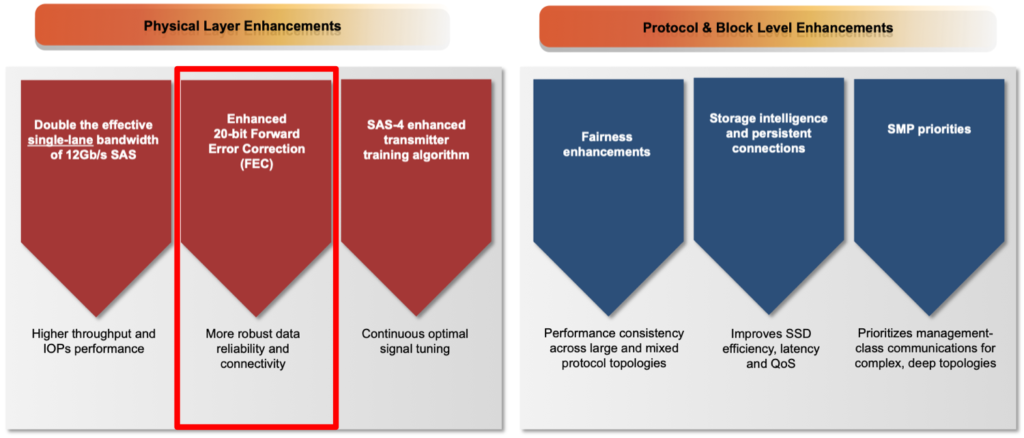

24G SAS Feature Focus: Forward Error Correction

Oct 21, 2019

Data transmission with 20-bit Forward Error Correction (FEC)

24G SAS Enhancements: 20-bit Forward Error Correction (FEC)

Find a similar article by tags

UncategorizedLeave a Reply

How Facebook & Microsoft Leverage NVMe™ Cloud Storage

Oct 9, 2019

- IOPs requirements for Hyperscalers

- Challenges when managing at scale

- Issues around form factors

- Need to allow for "rot in place"

- Remote debugging requirements

- Security needs

- Deployment success factors

Leave a Reply

How Facebook & Microsoft Leverage NVMe Cloud Storage

Oct 9, 2019

What do Hyperscalers like Facebook and Microsoft have in common? Find out in our next SNIA Networking Storage Forum (NSF) webcast, How Facebook and Microsoft Leverage NVMe Cloud Storage, on November 19, 2019 where you’ll hear how these cloud market leaders are using NVMe SSDs in their architectures.

Our expert presenters, Ross Stenfort, Hardware System Engineer at Facebook and Lee Prewitt, Principal Hardware Program Manager, Azure CSI at Microsoft, will provide a close up look into their application requirements and challenges, why they chose NVMe flash for storage, and how they are successfully deploying NVMe to fuel their businesses. You’ll learn:

What do Hyperscalers like Facebook and Microsoft have in common? Find out in our next SNIA Networking Storage Forum (NSF) webcast, How Facebook and Microsoft Leverage NVMe Cloud Storage, on November 19, 2019 where you’ll hear how these cloud market leaders are using NVMe SSDs in their architectures.

Our expert presenters, Ross Stenfort, Hardware System Engineer at Facebook and Lee Prewitt, Principal Hardware Program Manager, Azure CSI at Microsoft, will provide a close up look into their application requirements and challenges, why they chose NVMe flash for storage, and how they are successfully deploying NVMe to fuel their businesses. You’ll learn:

- IOPs requirements for Hyperscalers

- Challenges when managing at scale

- Issues around form factors

- Need to allow for “rot in place”

- Remote debugging requirements

- Security needs

- Deployment success factors

Leave a Reply

Show Your Persistent Stuff – and Win!

Oct 4, 2019

Persistent Memory software development has been a source of server development innovation for the last couple years. The availability of the open source PMDK libraries (http://pmem.io/pmdk/) has provided a common interface for developing across PM types as well as server architectures. Innovation beyond PMDK also continues to grow, as more experimentation yields open and closed source products and tools.

However, there is still hesitation to develop without physical systems. While systems are available from a variety of outlets, the costs of those systems and the memory can still be a barrier for small developers. Recognizing that there’s a need to grow both outlet and opportunity, Now, however, the Storage Networking Industry Association (SNIA) is announcing the availability of NVDIMM-based Persistent Memory systems for developers along with a programming challenge.

Interested developers can get credentials to access systems in the SNIA Technology Center in Colorado Springs, CO for development and testing of innovative applications or tools that can utilize persistent memory. The challenge is open to any developer or community interested in testing code.

Participants will have the opportunity to demonstrate their output to a panel of judges. The most innovative solutions will have a showcase opportunity at upcoming SNIA events in 2020. The first opportunity will be the SNIA Persistent Memory Summit. Judges will be looking for applications and tools that best highlight the values of persistent memory, including persistence in the memory tier, improved performance of applications using PM, and crash resilience and recovery of data across application or system restarts.

To register, contact SNIA at pmhackathon@snia.org. The challenge will be available starting immediately through at least the first half of 2020.

Check out the Persistent Programming in Real Life (PIRL) blog as well for information on this challenge and other upcoming activities.

Find a similar article by tags

Leave a Reply

Show Your Persistent Stuff

Oct 4, 2019

Innovation beyond PMDK also continues to grow, as more experimentation yields open and closed source products and tools.

However, there is still hesitation to develop without physical systems. While systems are available from a variety of outlets, the costs of those systems and the memory can still be a barrier for small developers. Recognizing that there’s a need to grow both outlet and opportunity, Now, however, the Storage Networking Industry Association (SNIA) is announcing the availability of NVDIMM-based Persistent Memory systems for developers along with a programming challenge.

Interested developers can get credentials to access systems in the SNIA Technology Center in Colorado Springs, CO for development and testing of innovative applications or tools that can utilize persistent memory. The challenge is open to any developer or community interested in testing code.

Participants will have the opportunity to demonstrate their output to a panel of judges. The most innovative solutions will have a showcase opportunity at upcoming SNIA events in 2020. The first opportunity will be the SNIA Persistent Memory Summit.

Innovation beyond PMDK also continues to grow, as more experimentation yields open and closed source products and tools.

However, there is still hesitation to develop without physical systems. While systems are available from a variety of outlets, the costs of those systems and the memory can still be a barrier for small developers. Recognizing that there’s a need to grow both outlet and opportunity, Now, however, the Storage Networking Industry Association (SNIA) is announcing the availability of NVDIMM-based Persistent Memory systems for developers along with a programming challenge.

Interested developers can get credentials to access systems in the SNIA Technology Center in Colorado Springs, CO for development and testing of innovative applications or tools that can utilize persistent memory. The challenge is open to any developer or community interested in testing code.

Participants will have the opportunity to demonstrate their output to a panel of judges. The most innovative solutions will have a showcase opportunity at upcoming SNIA events in 2020. The first opportunity will be the SNIA Persistent Memory Summit.  Judges will be looking for applications and tools that best highlight the values of persistent memory, including persistence in the memory tier, improved performance of applications using PM, and crash resilience and recovery of data across application or system restarts.

To register, contact SNIA at pmhackathon@snia.org. The challenge will be available starting immediately through at least the first half of 2020.

Check out the Persistent Programming in Real Life (PIRL) blog as well for information on this challenge and other upcoming activities.

Judges will be looking for applications and tools that best highlight the values of persistent memory, including persistence in the memory tier, improved performance of applications using PM, and crash resilience and recovery of data across application or system restarts.

To register, contact SNIA at pmhackathon@snia.org. The challenge will be available starting immediately through at least the first half of 2020.

Check out the Persistent Programming in Real Life (PIRL) blog as well for information on this challenge and other upcoming activities.

Find a similar article by tags

UncategorizedLeave a Reply

What Does Software Defined Storage Means for Storage Networking?

Sep 27, 2019

Software defined storage (SDS) is growing in popularity in both cloud and enterprise accounts. But why is it appealing to some customers and what is the impact on storage networking? Find out at our SNIA Networking Storage Forum webcast on October 22, 2019 “What Software Defined Storage Means for Storage Networking” where our experts will discuss:

- What makes SDS different from traditional storage arrays?

- Does SDS have different networking requirements than traditional storage appliances?

- Does SDS really save money?

- Does SDS support block, file and object storage access?

- How data availability is managed in SDS vs. traditional storage

- What are potential issues when deploying SDS?

Register today to save your spot on Oct. 22nd. This event is live, so as always, our SNIA experts will be on-hand to answer your questions.

Leave a Reply

Answering Your Kubernetes Storage Questions

Sep 25, 2019

Our recent SNIA Cloud Storage Technologies Initiative (CSTI) Kubernetes in the Cloud series generated a lot of interest, but also more than a few questions. The interest is a great indicator of Kubernetes rising profile in the world of computing.

Following the third episode in the series, we’ve chosen a few questions that might help to better explain (or bring additional context to) our presentation. This post is our answer to your very important questions.

If you’re new to this webcast series about running Kubernetes in the cloud, you can catch the three parts here:

- Kubernetes in the Cloud (Part 1)

- Kubernetes in the Cloud (Part 2)

- Kubernetes in the Cloud (Part 3) - (Almost) Everything You Need to Know about Stateful Workloads

The rest of this article includes your top questions from, and our answers to, Part 3:

Q. What databases are best suited to run on Kubernetes?

A. The databases better suited are the ones which have leaned into the container revolution (which has been happening over the past five years). Typically, those databases have a native clustering capability. For example, CockroachDB has great documentation and examples available showing how to set it up on Kubernetes.

On the other hand, Vitess provides clustering capabilities on top of MariaDB or MySQL to better enable them to run on Kubernetes. It has been accepted as an incubation project by the Cloud Native Computing Foundation (CNCF), so there is a lot of development expertise behind it to ensure it runs smoothly on Kubernetes.

Traditional relational databases like Postgres or single-node databases like Neo4j are fine for Kubernetes. The big caveat is that these are not designed to easily scale. That means the responsibility is on you to understand the limits of that DB, and any services it might support. Scaling these pre-cloud solutions tends to require sharding, or other similarly tricky techniques.

As long as you maintain a comfortable distance from the point where you’d need to scale a pre-cloud DB, you should be fine.

Q. When is it appropriate to run Kubernetes containerized applications on-premises versus in the cloud?

A. In the cloud you tend to benefit most from the managed service or elasticity. All of the major cloud providers have database offerings which run in Kubernetes environments. For example, Amazon Aurora, Google Cloud SQL, and Microsoft Azure DB. These offerings can be appropriate if you are a smaller shop without a lot of database architects.

The decision to run on-premises is usually dictated by external factors. Regulatory requirements like GDPR, country requirements, or customer requirements may require you to run on-premises. This is often where the concept of data gravity comes into effect – it is easier to move your compute to your data, versus moving your data to your compute.

This is one of the reasons why Kubernetes is popular. Wherever your data lives, you’ll find you can bring your compute closer (with minimal modifications), thanks to the consistency provided by Kubernetes and containers.

Q. Why did the third presentation feature old quotes about the challenges of Kubernetes and databases?

A. Part of that is simply my (the presenter’s) personal bias, and I apologize. I was primarily interested in finding a quote by recognizable brands. Kelsey Hightower and Uber are both highly visible in the container community, so the immediate interest was in finding the right words from them.

That does raise a relevant question, though. Has something changed recently? Are containers and Kubernetes becoming a better fit for stateful workloads? The answers is yes and no.

Running stateful workloads on Kubernetes has remained about the same for a while now. As an example, a text search for “stateful” on the 1.16 changelog returns no results. If a change was made for StatefulSets, or “Stateful workloads” we would hope to see a result. Of course, free text search is never quite that easy. Additional searches for “persist” show only results for CSI and ingress related changes.

The point is that stateful workloads are running the same way now, as they were with the prior versions. Part of the reason for the lack of change, is that stateful workloads are difficult to generalize. In the language of Fred Brooks, we could say that stateful workloads are essentially complex. In other words, the difficulty of generalizing is unavoidable and it must be addressed with a complex solution.

Solutions like operators are tackling some of this complexity, and some changes to storage components indicates progress is occurring elsewhere. A good example is in the 1.14 release (March 2019), where local persistent volumes graduated to general availability (GA). That’s a nice resolution to this blog from 2016, which said,

“Currently, we recommend using StatefulSets with remote storage. Therefore, you must be ready to tolerate the performance implications of network attached storage. Even with storage optimized instances, you won’t likely realize the same performance as locally attached, solid state storage media.”

Local persistent volumes fix some of the performance concerns around running stateful workloads on Kubernetes. Has Kubernetes made progress? Undeniably. Are stateful workloads still difficult? Absolutely.

Transitioning databases and complex, or stateful work to Kubernetes still requires a steep learning curve. Running a database through your current setup requires no additional knowledge. Your current database works, and you and your team already know everything you need to keep that going.

Running stateful applications on Kubernetes requires you attain knowledge of init containers, persistent volumes (PVs), persistent volume claims (PVCs), storage classes, service accounts, services, pods, config maps, and more. This large learning requirement has remained one of the biggest challenges. That means Kelsey and Uber’s cautionary notes remain relevant.

It’s a big undertaking to run stateful workloads on Kubernetes. It can be very rewarding, but it also comes with a large cost.

Q. If Kubernetes introduces so much complexity, why should we use it?

A. One of the main points of part three of our Kubernetes in the Cloud series, is that you can pick and choose. Kubernetes is flexible, and if you find running projects outside of it easier, you still have that option. Kubernetes is designed so that it’s easy to mix and match with other solutions.

Aside from the steep learning curve, it can seem like there are a number of other major challenges that come with Kubernetes. These challenges are often due to other design choices, like a move towards microservices, infrastructure as code, or etc. These other philosophies are shifts in perspective, that change how we and our teams have to think about infrastructure.

As an example, James Lewis and Martin Fowler note how a shift towards microservices will complicate storage:

“As well as decentralizing decisions about conceptual models, microservices also decentralize data storage decisions. While monolithic applications prefer a single logical database for persistent data, enterprises often prefer a single database across a range of applications - many of these decisions driven through vendor's commercial models around licensing. Microservices prefer letting each service manage its own database, either different instances of the same database technology, or entirely different database systems - an approach called Polyglot Persistence.”

Failing to move from a single enormous database to a unique datastore per service can lead to a distributed monolith. That is, an architecture which looks superficially like microservices, but furtively still contains many of the problems of a monolithic architecture.

Kubernetes and containers align well with newer cloud native philosophies like microservices. It’s no surprise then, that a project to move towards microservices will often involve Kubernetes. A lot of the apparent complexity of Kubernetes actually happens to be due to these accompanying philosophies. They’re often paired with Kubernetes, and anytime we stumble over a rough area, it can be easy to just blame Kubernetes for the issue.

Kubernetes and these newer philosophies are popular for a number of reasons. They’ve been proven to work at mega-scales, at companies like Google and Netflix (eg Borg and the microservice re-architecting on AWS). When done right, development teams also seem more productive.

If you are working at a larger scale and struggling, this probably sounds great. On the other hand, if you have yet to feel the pain of scaling, all of this Kubernetes and microservices stuff might seem a bit silly. There are a number of good reasons to avoid Kubernetes. There are also a number of great reasons to embrace it. We should be mindful that the value of Kubernetes and the associated philosophies is very dependent on where your business is on “feeling the pain of scaling.”

Q. Where can I learn more about Kubernetes?

A. Glad you asked! We provided more than 25 links to useful and informative resources during our live webcast. You can access all of them here.

Conclusion

Thanks for sending in your questions. In addition to the each of the webcasts being available on the SNIAVideo YouTube channel, you can also download PDFs of the presentations and check out the previous Q&A blogs from this series to learn more.

Leave a Reply