- What object storage is and what it does

- How to use object storage

- Essential characteristics of typical consumption

- Why object storage is important to data creators and consumers everywhere

Why Object Storage is Important

Jan 3, 2020

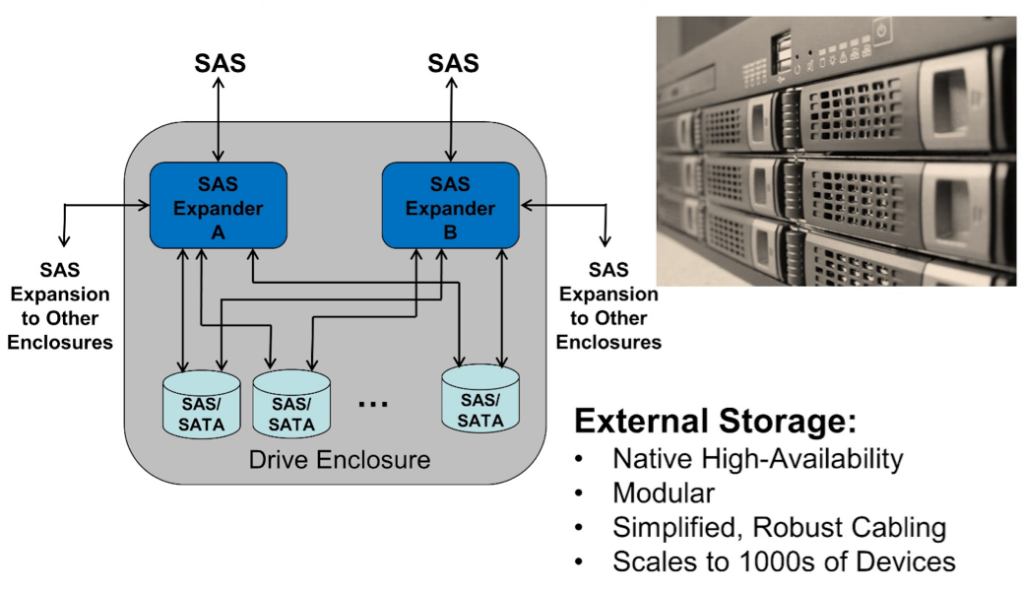

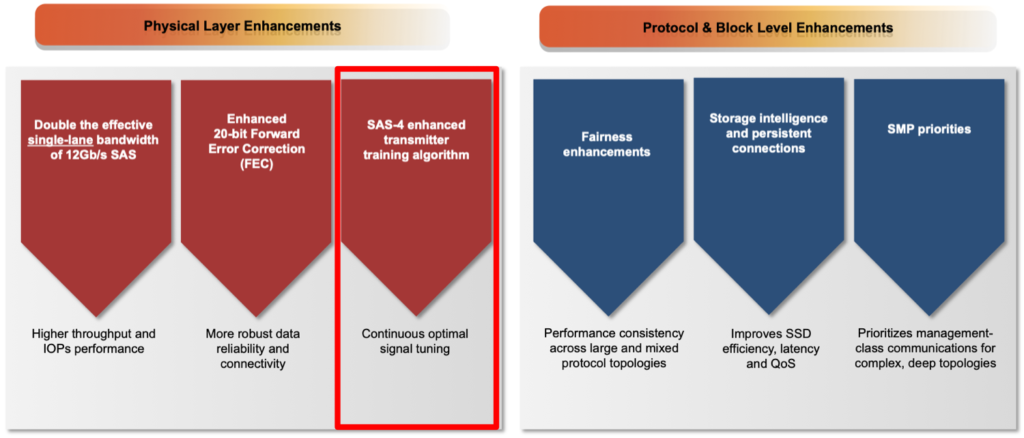

24G SAS Feature Focus: Inherent Scalability and Flexibility

Dec 12, 2019

Scalability in External Storage Architectures

- SSHD (Solid State Hard Drive) – hybridization of performance and capacity

- Storage Intelligence – SSD optimization, including garbage collection

- TDMR (Two-Dimensional Magnetic Recording) – faster writes

- Helium – capacity optimization

- SMR (Shingled Magnetic Recording) – capacity optimization

- MultiLink – bandwidth

- Multiple Actuator – IOPs per TB

- MAMR (Microwave-Assisted Magnetic Recording) – capacity optimization

- HAMR (Heat-Assisted Magnetic Recording) – capacity optimization

- Hybrid SMR – Configurable SMR vs. standard capacity; helps with SKU management

SAS – Preserving the Past, Creating the Future

SAS – Preserving the Past, Creating the FutureFind a similar article by tags

UncategorizedLeave a Reply

Hyperscalers Take on NVMe™ Cloud Storage Questions

Dec 2, 2019

A. Not clear yet if Ethernet is the right connection mechanism for storage disaggregation. CXL is becoming interesting.

Q. Thoughts on E3? What problems are being solved with E3? A. E3 is meant more for 2U servers. Q. ZNS has a lot of QoS implications as we load up so many dies on E1.L FF. Given the challenge how does ZNS address the performance requirements from regular cloud requirements? A. With QLC, the end to end systems need to be designed to meet the application’s requirements. This is not limited to the ZNS device itself, but needs to take into account the entire system. If you’re looking for more resources on any of the topics addressed in this blog, check out the SNIA Educational Library where you’ll find over 2,000 vendor-neutral presentations, white papers, videos, technical specifications, webcasts and more.Find a similar article by tags

Cloud Storage Hyperscalers Networked Storage NVMe Solid State Storage SSDLeave a Reply

Hyperscalers Take on NVMe™ Cloud Storage Questions

Dec 2, 2019

Our recent webcast on how Hyperscalers, Facebook and Microsoft are working together to merge their SSD drive requirements generated a lot of interesting questions. If you missed "How Facebook & Microsoft Leverage NVMe Cloud Storage" you can watch it on-demand. As promised at our live event. Here are answers to the questions we received.

Q. How does Facebook or Microsoft see Zoned Name Spaces being used?

A. Zoned Name Spaces are how we will consume QLC NAND broadly. The ability to write to the NAND sequentially in large increments that lay out nicely on the media allows for very little write amplification in the device.

Q. How high a priority is firmware malware? Are there automated & remote management methods for detection and fixing at scale?

A. Security in the data center is one of the highest priorities. There are tools to monitor and manage the fleet including firmware checking and updating.

Q. If I understood correctly, the need for NVMe rooted from the need of communicating at faster speeds with different components in the network. Currently, at which speed is NVMe going to see no more benefit with higher speed because of the latencies in individual components? Which component is most gating/concerning at this point?

A. In today's SSDs, the NAND latency dominates. This can be mitigated by adding backend channels to the controller and optimization of data placement across the media. There are applications that are direct connect to the CPU where performance scales very well with PCIe lane speeds and do not have to deal with network latencies.

Q. Where does zipline fit? Does Microsoft expect Azure to default to zipline at both ends of the Azure network?

A. Microsoft has donated the RTL for the Zipline compression ASIC to Open Compute so that multiple endpoints can take advantage of "bump in the wire" inline compression.

Q. What other protocols exist that are competing with NVMe? What are the pros and cons for these to be successful?

A. SATA and SAS are the legacy protocols that NVMe was designed to replace. These protocols still have their place in HDD deployments.

Q. Where do you see U.2 form factor for NVMe?

A. Many enterprise solutions use U.2 in their 2U offerings. Hyperscale servers are mostly focused on 1U server form factors were the compact heights of E1.S and E1.L allow for vertical placement on the front of the server.

Q. Is E1.L form factor too big (32 drives) for failure domain in a single node as a storage target?

A. E1.L allows for very high density storage. The storage application must take into account the possibility of device failure via redundancy (mirroring, erasure coding, etc.) and rapid rebuild. In the future, the ability for the SSD to slowly lose capacity over time will be required.

Q. What has been the biggest pain points in using NVMe SSD - since inception/adoption, especially, since Microsoft and Facebook started using this.

A. As discussed in the live Q&A, in the early days of NVMe the lack of standard drives for both Windows and Linux hampered adoption. This has since been resolved with standard in box drive offerings.

Q. Has FB or Microsoft considered allowing drives to lose data if they lose power on an edge server? if the server is rebuilt on a power down this can reduce SSD costs.

A. There are certainly interesting use cases where Power Loss Protection is not needed.

Q. Do zoned namespaces makes Denali spec obsolete or dropped by Microsoft? How does it impact/compete open channel initiatives by Facebook?

A. Zoned Name Spaces incorporates probably 75% of the Denali functionality in an NVMe standardized way.

Q. How stable is NVMe PCIe hot plug devices (unmanaged hot plug)?

A. Quite stable.

Q. How do you see Ethernet SSDs impacting cloud storage adoption?

A. Not clear yet if Ethernet is the right connection mechanism for storage disaggregation. CXL is becoming interesting.

Q. Thoughts on E3? What problems are being solved with E3?

A. E3 is meant more for 2U servers.

Q. ZNS has a lot of QoS implications as we load up so many dies on E1.L FF. Given the challenge how does ZNS address the performance requirements from regular cloud requirements?

A. With QLC, the end to end systems need to be designed to meet the application's requirements. This is not limited to the ZNS device itself, but needs to take into account the entire system.

If you're looking for more resources on any of the topics addressed in this blog, check out the SNIA Educational Library where you'll find over 2,000 vendor-neutral presentations, white papers, videos, technical specifications, webcasts and more.

Leave a Reply

SPDK in the NVMe-oF™ Landscape

Nov 25, 2019

. This includes software drivers and libraries for building NVMe over Fabrics (NVMe-oF) host and target solutions. On January 9, 2020, the SNIA Networking Storage Forum is going to kick-off its 2020 webcast program by diving into this topic with a live webcast “Where Does SPDK Fit in the NVMe-oF Landscape.”

In this presentation, SNIA members and technical leaders from SPDK, Jim Harris and Ben Walker, will provide an overview of the SPDK project, NVMe-oF use cases that are best suited for SPDK, and insights into how SPDK achieves its storage networking performance and efficiency, discussing:

. This includes software drivers and libraries for building NVMe over Fabrics (NVMe-oF) host and target solutions. On January 9, 2020, the SNIA Networking Storage Forum is going to kick-off its 2020 webcast program by diving into this topic with a live webcast “Where Does SPDK Fit in the NVMe-oF Landscape.”

In this presentation, SNIA members and technical leaders from SPDK, Jim Harris and Ben Walker, will provide an overview of the SPDK project, NVMe-oF use cases that are best suited for SPDK, and insights into how SPDK achieves its storage networking performance and efficiency, discussing:

- An overview of the SPDK project

- Key NVMe-oF use cases for SPDK

- Examples of NVMe-oF use cases not suited for SPDK

- NVMe-oF target architecture and design

- NVMe-oF host architecture and design

- Performance data

Leave a Reply

SAS Lives On and Here’s Why

Nov 22, 2019

With data creating so much economic value, it is putting pressure on IT requirements to support peak workloads, storage tiering, data mining, and running complex algorithms using on-premises or hybrid cloud environments.

Today, industry-talk is mostly around NVMe™ SSDs to help meet these stringent workload demands; however, not all drives and workloads are created equal. Over the decades, enterprise OEMs, channel partners, and ecosystem players have continued to support and utilize Serial-Attached SCSI (SAS) to address performance, reliability, availability, and data service challenges for traditional enterprise server and storage workloads.

SAS — A reliable, proven interface supporting high-availability storage environments

Originally debuting in 2004, SAS has evolved over the decades delivering distinguishing and proven enterprise features such as high reliability, fault tolerance (dual-port), speed, and efficiency. As a result, it has become a desirable and popular choice to run traditional, mission-critical enterprise applications such as OLTP and OLAP, hyper converged infrastructure (HCI) and software-defined storage (SDS) workloads. And it still has traction. According to a leading-industry analyst firm, IDC*, it projects SAS to drive SSD market demand over 24% CAGR in PB growth through 2022, contributing to a combined 52% CAGR growth for the data center SSD market as a whole.

“SAS continues to be a valued and trusted interface standard for enterprise storage customers worldwide,” said Jeff Janukowicz, vice president, IDC. “With a robust feature set, backward compatibility, and an evolving roadmap, SAS will remain vital storage interconnect for demanding mission-critical data center workloads today and into the future.

Capitalizing on its decades of innovation and SAS expertise, Western Digital is announcing the Ultrastar® DC SS540 SAS SSDs, its 6th generation of SAS SSDs that provide exceptional performance by delivering up to 470K/240K random read/write IOPS. The DC SS540 offers reliability of 2.5 million hours mean-time-between-failure (MTBF) with 96-layer 3D NAND from Western Digital’s joint venture with Intel. The new SAS SSD family is the ideal drive of choice for all-flash arrays (AFAs), caching tiers, HPC and SDS environments.

Ultrastar DC SS540 leverages existing SAS platform architecture, reliability, performance leadership, and various security and encryption options, which will lead to faster qualifications and time-to-market for enterprise and private cloud customers. The Ultrastar DC SS540 offers performance, enterprise-grade reliability, dual/single port, and multiple power options. It comes in capacities up to 15.36TB with soft SKU options to cover mainstream endurance choices and also reduce SKU management efforts for OEMs and channel customers. The Ultrastar DC SS540 is currently sampling and in qualification with select customers, with mass production scheduled for CYQ1 2020.

Learn More

Ultrastar SAS Series

With data creating so much economic value, it is putting pressure on IT requirements to support peak workloads, storage tiering, data mining, and running complex algorithms using on-premises or hybrid cloud environments.

Today, industry-talk is mostly around NVMe™ SSDs to help meet these stringent workload demands; however, not all drives and workloads are created equal. Over the decades, enterprise OEMs, channel partners, and ecosystem players have continued to support and utilize Serial-Attached SCSI (SAS) to address performance, reliability, availability, and data service challenges for traditional enterprise server and storage workloads.

SAS — A reliable, proven interface supporting high-availability storage environments

Originally debuting in 2004, SAS has evolved over the decades delivering distinguishing and proven enterprise features such as high reliability, fault tolerance (dual-port), speed, and efficiency. As a result, it has become a desirable and popular choice to run traditional, mission-critical enterprise applications such as OLTP and OLAP, hyper converged infrastructure (HCI) and software-defined storage (SDS) workloads. And it still has traction. According to a leading-industry analyst firm, IDC*, it projects SAS to drive SSD market demand over 24% CAGR in PB growth through 2022, contributing to a combined 52% CAGR growth for the data center SSD market as a whole.

“SAS continues to be a valued and trusted interface standard for enterprise storage customers worldwide,” said Jeff Janukowicz, vice president, IDC. “With a robust feature set, backward compatibility, and an evolving roadmap, SAS will remain vital storage interconnect for demanding mission-critical data center workloads today and into the future.

Capitalizing on its decades of innovation and SAS expertise, Western Digital is announcing the Ultrastar® DC SS540 SAS SSDs, its 6th generation of SAS SSDs that provide exceptional performance by delivering up to 470K/240K random read/write IOPS. The DC SS540 offers reliability of 2.5 million hours mean-time-between-failure (MTBF) with 96-layer 3D NAND from Western Digital’s joint venture with Intel. The new SAS SSD family is the ideal drive of choice for all-flash arrays (AFAs), caching tiers, HPC and SDS environments.

Ultrastar DC SS540 leverages existing SAS platform architecture, reliability, performance leadership, and various security and encryption options, which will lead to faster qualifications and time-to-market for enterprise and private cloud customers. The Ultrastar DC SS540 offers performance, enterprise-grade reliability, dual/single port, and multiple power options. It comes in capacities up to 15.36TB with soft SKU options to cover mainstream endurance choices and also reduce SKU management efforts for OEMs and channel customers. The Ultrastar DC SS540 is currently sampling and in qualification with select customers, with mass production scheduled for CYQ1 2020.

Learn More

Ultrastar SAS Series

Forward-Looking Statements Certain blog and other posts on this website may contain forward-looking statements, including statements relating to expectations for our product portfolio, the market for our products, product development efforts, and the capacities, capabilities and applications of our products. These forward-looking statements are subject to risks and uncertainties that could cause actual results to differ materially from those expressed in the forward-looking statements, including development challenges or delays, supply chain and logistics issues, changes in markets, demand, global economic conditions and other risks and uncertainties listed in Western Digital Corporation’s most recent quarterly and annual reports filed with the Securities and Exchange Commission, to which your attention is directed. Readers are cautioned not to place undue reliance on these forward-looking statements and we undertake no obligation to update these forward-looking statements to reflect subsequent events or circumstances. Source: *IDC, Worldwide Solid State Drive Forecast, 2019–2023, Doc #US43828819, May 2019

Find a similar article by tags

UncategorizedLeave a Reply

Judging Has Begun – Submit Your Entry for the NVDIMM Programming Challenge!

Nov 19, 2019

AgigA Tech, Intel, SMART Modular, and Supermicro, members of the SNIA Persistent Memory and NVDIMM SIG, have now placed persistent memory systems with NVDIMM-Ns into the SNIA Technology Center as the backbone of the first SNIA NVDIMM Programming Challenge.

Interested in participating? Send an email to PMhackathon@snia.org to get your credentials. And do so quickly, as the first round of review for the SNIA NVDIMM Programming Challenge is now open. Any entrants who have progressed to a point where they would like a review are welcome to contact SNIA at PMhackathon@snia.org to request a time slot. SNIA will be opening review times in December and January as well. Submissions that meet a significant amount

AgigA Tech, Intel, SMART Modular, and Supermicro, members of the SNIA Persistent Memory and NVDIMM SIG, have now placed persistent memory systems with NVDIMM-Ns into the SNIA Technology Center as the backbone of the first SNIA NVDIMM Programming Challenge.

Interested in participating? Send an email to PMhackathon@snia.org to get your credentials. And do so quickly, as the first round of review for the SNIA NVDIMM Programming Challenge is now open. Any entrants who have progressed to a point where they would like a review are welcome to contact SNIA at PMhackathon@snia.org to request a time slot. SNIA will be opening review times in December and January as well. Submissions that meet a significant amount  of the judging criteria described below, as determined by the panel, will be eligible for a demonstration slot to show the 400+ attendees at the January 23, 2020 Persistent Memory Summit in Santa Clara CA.

Your program or results should be able to be visually demonstrated using remote access to a PM-enabled server. Submissions will be judged by a panel of SNIA experts. Reviews will be scheduled at the convenience of the submitter and judges, and done via conference call.

NVDIMM Programming Challenge Judging Criteria include:

Use of multiple aspects of NVDIMM/PM capabilities, for example:

of the judging criteria described below, as determined by the panel, will be eligible for a demonstration slot to show the 400+ attendees at the January 23, 2020 Persistent Memory Summit in Santa Clara CA.

Your program or results should be able to be visually demonstrated using remote access to a PM-enabled server. Submissions will be judged by a panel of SNIA experts. Reviews will be scheduled at the convenience of the submitter and judges, and done via conference call.

NVDIMM Programming Challenge Judging Criteria include:

Use of multiple aspects of NVDIMM/PM capabilities, for example:

- Use of larger DRAM/NVDIMM memory sizes

- Use of the DRAM speed of NVDIMM PMEM for performance

- Speed-up of application shut down or restart using PM where appropriate

- Recovery from crash/failure

- Storage of data across application or system restarts

- Uses persistence to enable new features

- Appeals across multiple aspects of a system, beyond persistence

- Encourages the update of systems to broadly support PM

- Makes PM an incremental need in IT deployments

Leave a Reply

Judging Has Begun – Submit Your Entry for the NVDIMM Programming Challenge!

Nov 19, 2019

We’re 11 months in to the Persistent Memory Hackathon program, and over 150 software developers have taken the tutorial and tried their hand at programming to persistent memory systems.  AgigA Tech, Intel, SMART Modular, and Supermicro, members of the SNIA Persistent Memory and NVDIMM SIG, have now placed persistent memory systems with NVDIMM-Ns into the SNIA Technology Center as the backbone of the first SNIA NVDIMM Programming Challenge.

AgigA Tech, Intel, SMART Modular, and Supermicro, members of the SNIA Persistent Memory and NVDIMM SIG, have now placed persistent memory systems with NVDIMM-Ns into the SNIA Technology Center as the backbone of the first SNIA NVDIMM Programming Challenge.

Interested in participating? Send an email to PMhackathon@snia.org to get your credentials. And do so quickly, as the first round of review for the SNIA NVDIMM Programming Challenge is now open. Any entrants who have progressed to a point where they would like a review are welcome to contact SNIA at PMhackathon@snia.org to request a time slot. SNIA will be opening review times in December and January as well. Submissions that meet a significant amount  of the judging criteria described below, as determined by the panel, will be eligible for a demonstration slot to show the 400+ attendees at the January 23, 2020 Persistent Memory Summit in Santa Clara CA.

of the judging criteria described below, as determined by the panel, will be eligible for a demonstration slot to show the 400+ attendees at the January 23, 2020 Persistent Memory Summit in Santa Clara CA.

Your program or results should be able to be visually demonstrated using remote access to a PM-enabled server. Submissions will be judged by a panel of SNIA experts. Reviews will be scheduled at the convenience of the submitter and judges, and done via conference call.

NVDIMM Programming Challenge Judging Criteria include:

Use of multiple aspects of NVDIMM/PM capabilities, for example:

- Use of larger DRAM/NVDIMM memory sizes

- Use of the DRAM speed of NVDIMM PMEM for performance

- Speed-up of application shut down or restart using PM where appropriate

- Recovery from crash/failure

- Storage of data across application or system restarts

Demonstrates other innovative aspects for a program or tool, for example:

- Uses persistence to enable new features

- Appeals across multiple aspects of a system, beyond persistence

Advances the cause of PM in some obvious way:

- Encourages the update of systems to broadly support PM

- Makes PM an incremental need in IT deployments

Program or results apply to all types of NVDIMM/PM systems, though exact results may vary across memory types.

Questions? Contact Jim Fister, SNIA Hackathon Program Director, at pmhackathon@snia.org, and happy coding!

Leave a Reply

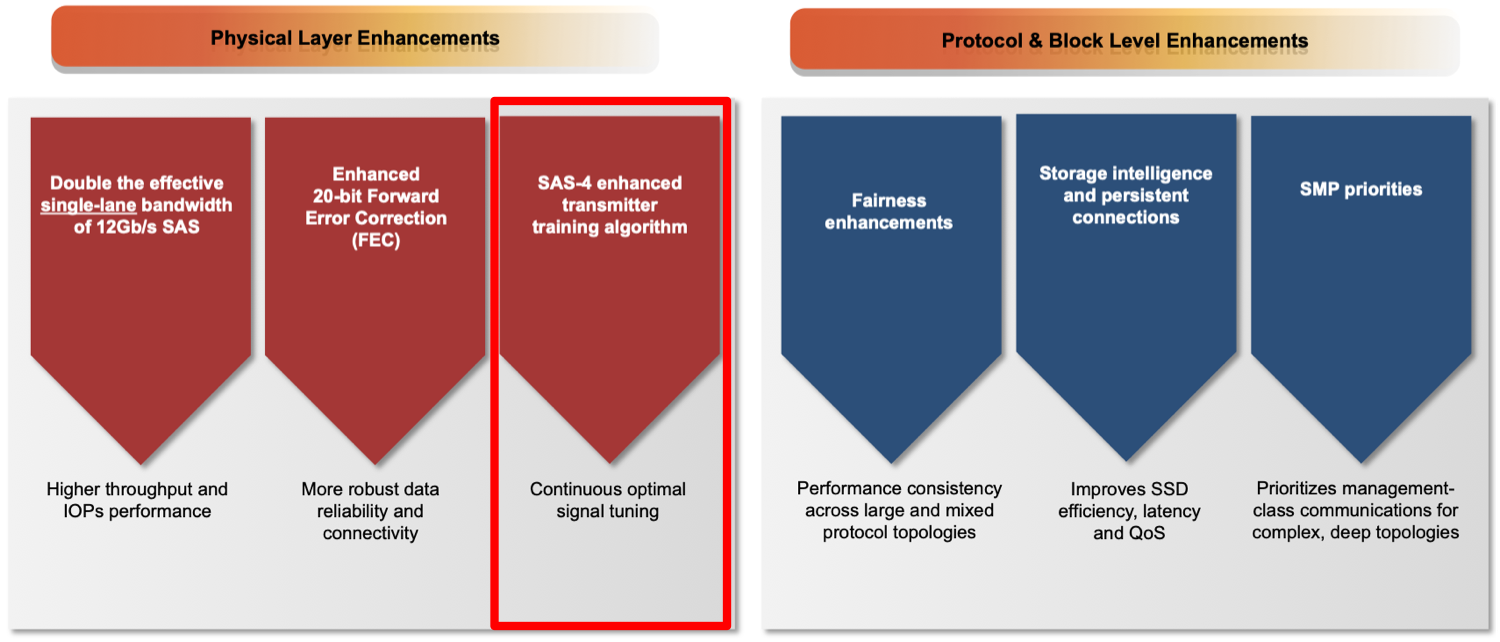

24G SAS Feature Focus: Active PHY Transmitter Adjustment

Nov 12, 2019

24G SAS Enhancements: Active PHY Transmitter Adjustment (APTA)

Find a similar article by tags

UncategorizedLeave a Reply

A Q&A to Better Understand Storage Security

Nov 1, 2019

Truly understanding storage security issues is no small task, but the SNIA Networking Storage Forum (NSF) is taking that task on in our Storage Networking Security Webcast Series. Earlier this month, we hosted the first in this series, “Understanding Storage Security and Threats” where my SNIA colleagues and I examined the big picture of storage security, relevant terminology and key concepts. If you missed the live event, you can watch it on-demand.

Our audience asked some great questions during the live event. Here are answers to them all.

Q. If I just deploy self-encrypting drives, doesn’t that take care of all my security concerns?

A. No it does not. Self-encrypted drives can protect if the drive gets misplaced or stolen, but they don’t protect if the operating system or application that accesses data on those drives is compromised.

Q. What does “zero trust” mean?

A. “Zero Trust” is a security model that works on the principal that organizations should not automatically trust anything inside their network (typically inside the firewalls). In fact they should not automatically trust any group of users, applications, or servers. Instead, all access should be authenticated and verified. What this typically means is granting the least amount of privileges and access needed based on who is asking for access, the context of the request, and the risk associated.

Q. What does white hat vs. black hat mean?

A. In the world of hackers, a “black hat” is a malicious actor that actually wants to steal your data or compromise your system for their own purposes. A “white hat” is an ethical (or reformed) hacker that attempts to compromise your security systems with your permission, in order to verify its security or find vulnerabilities so you can fix them. There are entire companies and industry groups that make looking for security vulnerabilities a full-time job.

Q. Do I need to hire someone to try to hack into my systems to see if they are secure?

A. To achieve the highest levels of information security, it is often helpful to hire a “white hat” hacker to scan your systems and try to break into them. Some organizations are required–by regulation–to do this periodically to verify the security of their systems. This is sometimes referred to as “penetration testing” or “ethical hacking” and can include physical as well as electronic testing of an infrastructure or even directing suspicious calls and emails to employees to test their security training. All known IT security vulnerabilities are eventually documented and published. You might have your own dedicated security team that regularly tests your operating systems, applications and network for known vulnerabilities and performs penetration testing, or you can hire independent 3rd parties to do this. Some security companies sell tools you can use to test your network and systems for known security vulnerabilities.

Q. Can you go over the difference between authorization and authentication again?

A. Authorization is a mechanism for verifying that a person or application has the authority to perform certain actions or access specific data. Authentication is a mechanism or process for verifying a person is who he or she claims to be. For example, use of passwords, secure tokens/badges, or fingerprint/iris scans upon login (or physical entry) can authenticate who a person is. After login or entry, the use of access control lists, color coded badges, or permissions tables can determine what that person is authorized to do.

Q. Can you explain what non-repudiation means, and how you can implement it?

A. Non-repudiation is a method or technology that guarantees the accuracy or authenticity of information or an action, preventing it from being repudiated (successfully disputed or challenged). For example, a hash could ensure that a retrieved file is authentic, or a combination of biometric authentication with an audit log could prove that a particular person was the one who logged into a system or accessed a file.

Q. Why would an attacker want to infiltrate data into a data center, as opposed to exfiltrating (stealing) data out of the data center?

A. Usually malicious actors (hackers) want to exfiltrate (remove) valuable data. But sometimes they want to infiltrate (insert) malware into the target’s data center so this malware can carry out other attacks.

Q. What is ransomware, and how does it work?

A. Ransomware typically encrypts, hides or blocks access to an organization’s critical data, then the malicious actor who sent it demands payment or action from the organization in return for sharing the password that will unlock the ransomed data.

Q. Can you suggest some ways to track and report attacking resources?

A. Continuous monitoring tools such as Splunk can be used.

Q. Does “trust nobody” mean, don’t trust root/admin user as well?

A. Trust nobody means there should be no presumption of trust, instead we should authenticate and authorize all users/requests. For example, it could mean changing the default root/admin password, requiring most administrative work to use specific accounts (instead of the root/admin account), and monitoring all users (including root/admin) to detect inappropriate behavior.

Q. How do I determine my greatest vulnerability or the weakest link in my security?

A. Activities such as Threat Models and Security Assessments can assist in determining weakest links.

Q. What does a ‘trust boundary’ mean?

A. Trust boundary is a boundary where program data or execution changes its level of “trust”. For example, Internet vs intranet.

We are busy planning out the rest of this webcast series. Please follow us Twitter @SNIANSF for notifications of dates and times for each presentation.

Truly understanding storage security issues is no small task, but the SNIA Networking Storage Forum (NSF) is taking that task on in our Storage Networking Security Webcast Series. Earlier this month, we hosted the first in this series, “Understanding Storage Security and Threats” where my SNIA colleagues and I examined the big picture of storage security, relevant terminology and key concepts. If you missed the live event, you can watch it on-demand.

Our audience asked some great questions during the live event. Here are answers to them all.

Q. If I just deploy self-encrypting drives, doesn’t that take care of all my security concerns?

A. No it does not. Self-encrypted drives can protect if the drive gets misplaced or stolen, but they don’t protect if the operating system or application that accesses data on those drives is compromised.

Q. What does “zero trust” mean?

A. “Zero Trust” is a security model that works on the principal that organizations should not automatically trust anything inside their network (typically inside the firewalls). In fact they should not automatically trust any group of users, applications, or servers. Instead, all access should be authenticated and verified. What this typically means is granting the least amount of privileges and access needed based on who is asking for access, the context of the request, and the risk associated.

Q. What does white hat vs. black hat mean?

A. In the world of hackers, a “black hat” is a malicious actor that actually wants to steal your data or compromise your system for their own purposes. A “white hat” is an ethical (or reformed) hacker that attempts to compromise your security systems with your permission, in order to verify its security or find vulnerabilities so you can fix them. There are entire companies and industry groups that make looking for security vulnerabilities a full-time job.

Q. Do I need to hire someone to try to hack into my systems to see if they are secure?

A. To achieve the highest levels of information security, it is often helpful to hire a “white hat” hacker to scan your systems and try to break into them. Some organizations are required–by regulation–to do this periodically to verify the security of their systems. This is sometimes referred to as “penetration testing” or “ethical hacking” and can include physical as well as electronic testing of an infrastructure or even directing suspicious calls and emails to employees to test their security training. All known IT security vulnerabilities are eventually documented and published. You might have your own dedicated security team that regularly tests your operating systems, applications and network for known vulnerabilities and performs penetration testing, or you can hire independent 3rd parties to do this. Some security companies sell tools you can use to test your network and systems for known security vulnerabilities.

Q. Can you go over the difference between authorization and authentication again?

A. Authorization is a mechanism for verifying that a person or application has the authority to perform certain actions or access specific data. Authentication is a mechanism or process for verifying a person is who he or she claims to be. For example, use of passwords, secure tokens/badges, or fingerprint/iris scans upon login (or physical entry) can authenticate who a person is. After login or entry, the use of access control lists, color coded badges, or permissions tables can determine what that person is authorized to do.

Q. Can you explain what non-repudiation means, and how you can implement it?

A. Non-repudiation is a method or technology that guarantees the accuracy or authenticity of information or an action, preventing it from being repudiated (successfully disputed or challenged). For example, a hash could ensure that a retrieved file is authentic, or a combination of biometric authentication with an audit log could prove that a particular person was the one who logged into a system or accessed a file.

Q. Why would an attacker want to infiltrate data into a data center, as opposed to exfiltrating (stealing) data out of the data center?

A. Usually malicious actors (hackers) want to exfiltrate (remove) valuable data. But sometimes they want to infiltrate (insert) malware into the target’s data center so this malware can carry out other attacks.

Q. What is ransomware, and how does it work?

A. Ransomware typically encrypts, hides or blocks access to an organization’s critical data, then the malicious actor who sent it demands payment or action from the organization in return for sharing the password that will unlock the ransomed data.

Q. Can you suggest some ways to track and report attacking resources?

A. Continuous monitoring tools such as Splunk can be used.

Q. Does “trust nobody” mean, don’t trust root/admin user as well?

A. Trust nobody means there should be no presumption of trust, instead we should authenticate and authorize all users/requests. For example, it could mean changing the default root/admin password, requiring most administrative work to use specific accounts (instead of the root/admin account), and monitoring all users (including root/admin) to detect inappropriate behavior.

Q. How do I determine my greatest vulnerability or the weakest link in my security?

A. Activities such as Threat Models and Security Assessments can assist in determining weakest links.

Q. What does a ‘trust boundary’ mean?

A. Trust boundary is a boundary where program data or execution changes its level of “trust”. For example, Internet vs intranet.

We are busy planning out the rest of this webcast series. Please follow us Twitter @SNIANSF for notifications of dates and times for each presentation.

Leave a Reply