Explore Interop’s Storage Track

Apr 18, 2016

Find a similar article by tags

UncategorizedSNIA’s Persistent Memory Education To Be Featured at Open Server Summit 2016

Apr 12, 2016

If you are in Silicon Valley or the Bay Area this week, SNIA welcomes you to join them and the Solid State Storage Initiative April 13-14 at the Santa Clara Convention Center for Open Server Summit 2016, the industry’s premier event that focuses on the design of next- generation servers with topics on data center efficiency, SSDs, core OS, cloud server design, the future of open server and open storage, and other efforts toward combining industry-standard hardware with open-source software.

If you are in Silicon Valley or the Bay Area this week, SNIA welcomes you to join them and the Solid State Storage Initiative April 13-14 at the Santa Clara Convention Center for Open Server Summit 2016, the industry’s premier event that focuses on the design of next- generation servers with topics on data center efficiency, SSDs, core OS, cloud server design, the future of open server and open storage, and other efforts toward combining industry-standard hardware with open-source software.

The SNIA NVDIMM Special Interest Group is featured at OSS 2016, and will host a panel Thursday April 14 on NVDIMM technology, moderated by Bill Gervasi of JEDEC and featuring SIG members Diablo Technology, Netlist, and SMART Modular. The panel will highlight the latest activities in the three “flavors” of NVDIMM , and offer a perspective on the future of persistent memory in systems. Also, SNIA board member Rob Peglar of Micron Technology will deliver a keynote on April 14, discussing how new persistent memory directions create new approaches for system architects and enable entirely new applications involving enormous data sets and real-time analysis.

SSSI will also be in booth 403 featuring demonstrations by the NVDIMM SIG, discussions on SSD data recovery and erase, and updates on solid state storage performance testing. SNIA members and colleagues can register for $100 off using the code SNIA at http://www.openserversummit.com.

Leave a Reply

Questions Aplenty on NVMe over Fabrics

Apr 12, 2016

Our live SNIA-ESF Webcast, “Under the Hood with NVMe over Fabrics,” generated more questions than we anticipated, proving to us that this topic is worthy of future discussions. Here are answers to both the questions we took during the live event as well as those we didn’t have time for.

Q. So fabric is an alternative to PCIe, for those of us familiar with PCIe-attached NVMe devices, yes?

A. Yes, fabric is the term used in the specification that represents a variety of physical interconnects and transports for NVM Express.

Q. How are the namespaces shared in a fabric?

A. Namespaces are NVM subsystem resources and are accessible by all controllers in the NVM subsystem. Multi-host access may be coordinated using reservations.

Q. If there are multiple subsystems accessing same NVMe devices over fabric then how is namespace shared?

A. The mapping of fabric NVM subsystem resources (namespaces and controllers) to PCIe NVMe device subsystems is implementation specific. They may be mapped 1 to 1 or N to 1, depends on the functionality of the NVMe bridge.

Q. Are namespace reservations similar to SCSI reservations?

A. Yes

Q. Are there plans for defining bindings for Intel Omni Path fabric?

A. Intel Omni-Path is a good candidate fabric for NVMe over Fabrics.

Q. Is hybrid attachment allowed? Could a single namespace be attached to a fabric and PCIe (through two controllers) concurrently?

A. At this moment, such hybrid configuration is not permitted within the specification

Q. Is a NVM sub-system purpose built or commodity server hardware?

A. This is a difficult question to answer. At the time of this writing there are not enough “off-the-shelf” commodity components to be able to construct NVMe over Fabric subsystems.

Q. Does NVMEoF use the same NVMe PCIe controller register map?

A. A subset of the NVMe controller register mapping was retained for fabrics but renamed to “Properties” to avoid confusion.

Q. So does NVMe over Fabric act like an extension of the PCIe bus? Meaning that I see the same MMIO registers and queues remotely? Or is it a completely different protocol that is solely message based? Will current NVMe host drivers work on the fabric or does it really require a different driver stack?

A. Fabrics is not an extension of PCIe, it’s an extension of NVMe. It uses the same NVMe Submission and Completion Queue model and Descriptors as the PCIe NVMe. Most of the original NVMe host driver stack is retained and shared between PCIe and Fabrics, the bottom side was modified to allow for multiple transports.

Q. Does NVMe over Fabrics support immediate data for writes, or must write data always be fetched by the NVMe controller?

A. Yes, immediate data is termed “in-capsule” and is used to send the NVMe command data with the NVMe submission entry.

Q. As far as I know, Linux introduced a multi-queue model at the block layer recently. Is it the same thing you are mentioning?

A. No, but NVMe uses the Linux Block-MQ layer. NVMe Multi-Queue is used between the host and the NVMe controller for both PCIe and fabric based controllers.

Q. Are there situations where you might want to have more than one queue pair per CPU? What are they?

A. Queue-Pairs are matched up by CPU cores, not CPUs, which allows the creation of multiple namespace entities per CPU. This, in turn, is very useful for virtualization and application separation.

Q. What are three mandatory commands? Do they refer to read/write/sync cache?

A. Actually, there are 13 required commands. Kevin Marks has a very good presentation from the Flash Memory Summit that provides a list of these commands within the broader NVMe context. You can download it here.

Q. Please talk about queue depths? Arbitrary? Limited?

A. Controller defined maximum queue depths up to a maximum of 64K entries.

Q. Where will SQs and CQs be physically located? Are they on host memory or SSD memory?

A. For fabrics, the SQ is located on the controller side to avoid the inefficiency of having to pull SQE’s across a fabric. CQ’s reside on the host.

Q. How do you create ordering guarantee when that is needed for correctness?

A. For commands that require sequencing, there is a concept called “Fused Commands” which get sent as a single unit.

Q. In NVMeoF how are devices discovered?

A. NVMeoF devices are discoverable via a couple of different means, depending on whether you are using Fibre Channel (which has its own discovery and login process) or an iSCSI-like name server. Mike Shapiro goes over the discovery mechanism in considerable detail in this BrightTALK Webcast.

Q. I guess all new drivers will be required for NVMeoF?

A. Yes, new drivers are being written and will be required for NVMeoF.

Q. Why can’t the doorbell+ plus communication model apply to PCIe? I mean, why doesn’t PCIe use doorbell+?

A. NVMe 1.2 defines controller resident buffers that can be used for pushing SQ Entries from the host to the controller. Doorbells are still required for PCIe to inform the controller about the new SQ entries.

Q. If there are two hosts connected to the same subsystem then will NVMe controller have two queues :- one for each host

A. Yes

Q. So with your command and data description, does NVMe over Fabric require RDMA or does it have a “Data Ready” type message to tell the host when to send write data?

A. Data transfer operations are fabric dependent. RDMA uses RDMA_READ, another transport may use some form of Data Ready model.

Q. Can you quantify the protocol translation overhead? In reality, that does not look like that big from performance perspective.

A. Submission Queue entries are 64bytes and Completion Queue entries are 16bytes. These are sufficiently small for block storage traffic which typically is in 4K+ size requests.

Q. Do Dual Port SSDs need to support two Admin Qs since they have two paths to the same host?

A. Dual-Port or multi-path capable NVM subsystems require using two NVMe controllers each with one AdminQ and one or more IO queues.

Q. For a Dual Port SSD, does each port need to have its Submission Q on a different CPU core in the host? I assume the SQs for the two ports cannot be on the same CPU core.

A. The mapping of controller queues to host CPU cores is typically per controller. If the host was connected to two controllers, there would be two queues per core. One queue to controller 1 and one queue to controller 2 per host core.

Q. As you mentioned currently there is an LBA addressing in standard. What will happen when Intel will go to market with new media (3D Point), which is announced to be byte addressable?

A. The NVMe NVM command set is block based and is independent of the type and access method of the NVM media used in a subsystem implementation.

Q. Is there a real benefit of this architecture in a NAS environment?

A. There is a natural advantage to making any storage access more efficient. A network-attached system still requires block access at the lower levels, and NVMe (either local or over a Fabric) can improve NAS design and flexibility immensely. This is particularly true for pNFS and scale-out SMB paradigms.

Q. How do you handle authentication across many servers (hosts) on the fabric? How do you decide what host can access what part of each device? Does it have to be namespace specific?

A. The fabrics specification defines an Authentication model and also defines the naming format for NVM subsystems and hosts. A target implementation can choose to provision NVM subsystems to specific host based on the naming format.

Q. Having same structure at all layers means at the transport layer of flash appliance also we should maintain the submission and completions Queue model and these mapped to physical Queue of NVMe sub controller?

A. The NVMe Submission Queue and Completion Queue entries are common between fabrics and PCIe NVMe. This simplifies the steps required to bridge between NVMe fabrics and NVMe PCIe. An implementation may choose to map the fabrics SQ directly to a PCIe NVMe SSD SQ to provide a very efficient simple NVMe transport bridge

Q. With an RDMA based transport, how will each host discover the NVME controller(s) that it has been granted access to?

A. Please see the answer above.

Q. Traditionally SAS supports SAS expander for scaling purpose. How does NVMe over fabric solve the issue as there is no expander concept in NVMe world?

A. Recall that SAS expanders compensate for SCSI’s inherent lack of scalability. NVMe perpetuates the multi-queue model (which does not exist for SCSI) natively, so SAS expander-like pieces are not required for scale-out.

Leave a Reply

Questions Aplenty on NVMe over Fabrics

Apr 12, 2016

Leave a Reply

NFS FAQ – Test Your Knowledge

Apr 7, 2016

How would you rate your NFS knowledge? That’s the question Alex McDonald and I asked our audience at our recent live Webcast, “What is NFS?.” From those who considered themselves to be an NFS expert to those who thought NFS was a bit of a mystery, we got some great questions. As promised, here are answers to all of them. If you think of additional questions, please comment in this blog and we’ll get back to you as soon as we can.

Q. I hope you touch on dNFS in your presentation

A. Oracle Direct NFS (dNFS) is a client built into Oracle’s database system that Oracle claims provides faster and more scalable access to NFS servers. As it’s proprietary, SNIA doesn’t really have much to say about it; we’re vendor neutral, and it’s not the only proprietary NFS client out there. But you can read more here if you wish at the Oracle site.

Q. Will you be talking about pNFS?

A. We did a series of NFS presentations that covered pNFS a little while ago. You can find them here.

Q. What is the difference between SMB vs. CIFS? And what is SAMBA? Is it a type of SMB protocol?

A. It’s best explained in this tutorial that covers SMB. Samba is the open source implementation of SMB for Linux. Information on Samba can be found here.

Q. Will you touch upon how file permissions are maintained when users come from an SMB or a non-SMB connection? What are best practices?

A. Although NFS and SMB share some common terminology for security (ACLs or Access Control Lists) the implementations are different. The ACL security model in SMB is richer than the NFS file security model. I touched on some of those differences during the Webcast, but my advice is; don’t expect the two security domains of SMB (or Samba, the open source equivalent) and NFS to perfectly overlap. Where possible, try to avoid the requirement, but if you do need the ability to file share, talk to your NFS server supplier. A Google search on “nfs smb mixed mode” will also bring up tips and best practices.

Q. How do you tune and benchmark NFSv4?

A. That’s a topic in its own right! This paper gives an overview and how-to of benchmarking NFS; but it doesn’t explain what you might do to tune the system. It’s too difficult to give generic advice here, except to say that vendors should be relied on to provide their experience. If it’s a commercial solution, they will have lots of experience based on a wide variety of use cases and workloads.

Q. Is using NFS to provide block storage a common use case?

A. No, it’s still fairly unusual. The most common use case is for files in directories. Object and block support are relatively new, and there are more NFS “personalities” being developed, see our ESF Webcast on NFSv4.2 for more information.

Q. Can you comment about file locking issues over NFS?

A. Locking is needed by NFS to maintain file consistency in the face of multiple readers and writers. Locking in NVSv3 was difficult to manage; if a server failed or clients went AWOL, then the lock manager would be left with potentially thousands of stale locks. They often required manual purging. NFSv4 simplifies that by being a stateful protocol, and by integrating the lock management functions and employing timeouts and state, it can manage client and server recovery much more gracefully. Locks are, in the main, automatically released or refreshed after a failure.

Q. Where do things like AFS come into play? Above NFS? Below NFS? Something completely different?

A. AFS is another distributed file system, but it is not POSIX compliant. It influenced but is not directly related to NFS. Its use is relatively small; SMB and NFS dominate. Wikipedia has a good overview.

Q. As you said NFSv4 can hide some of the directories when exporting to clients. Can this operation hide different folders for different clients?

A. Yes. It’s possible to maintain completely different exports to expose or hide whatever directories on the server you wish. The pseudo file system is built separately for each server export. So you can have export X with subdirectories A B and C; or export Y with subdirectories B and C only.

Q. Similar to DFS-N and DFS-R in combination, if a user moves to a different location, does NFS have a similar methodology?

A. I’m not sure what DFS-N and DFS-R do in terms of location transparency. NFS can be set up such that if you can contact a particular server, and if you have the correct permissions, you should be able to see the same exports regardless of where the client is running.

Q. Which daemons should be running on server side and client side for accessing filesystem over NFS?

A. This is NFS server and client specific. You need to look at the documentation that comes with each.

Q. Regarding VMware 6.0. Why use NFS over FC?

A. Good question but you’ll need to speak to VMware to get that question answered. It depends on the application, your infrastructure, your costs, and the workload.

Leave a Reply

Curious about Your Storage Knowledge? It's a Quick "Test" with SNIA Storage Foundations Certification Practice Exam

Apr 7, 2016

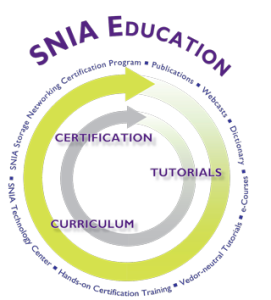

Many storage professionals choose to begin with the SNIA Storage Foundations Certification, according to Michael Meleedy, SNIA's Director of Education. "The SNIA Foundations Exam (S10-110), newly revised to integrate new technologies and industry practices, is the entry-level exam within the SNIA Storage Networking Certification Program (SNCP)," Meleedy explained. "It has been widely accepted by the storage industry as the benchmark for basic vendor-neutral storage credentials. In fact, vendors like Dell require this certification."

Try the Practice Exam!

We recommend considering Spring as the best time to test your skills - and a NEW SNIA Storage Foundations Certification Practice exam makes it very easy. This practice exam is short (easy to squeeze into your busy day) and the sample of questions from the real exam will help you quickly determine if you have the skills required to pass the industry’s only vendor-neutral certification exam. It's open to everyone free of charge with the results available immediately. Take the practice exam.

Why Should I Explore the SNCP?

Professionals often wonder about the real value of IT related certifications. Is it worth your time and money to become certified? "Yes, especially in today's global marketplace," said Paul Talbut, SNIA Global Education and Regional Affiliate Program Director. "SNIA certifications provide storage and data management practitioners worldwide with an industry recognised uniform standard by which individual knowledge and skill-sets can be judged. We're reaching a variety of professional audiences; for example, SNIA's Foundations Exam is available both in English and Japanese, and is offered at all Prometric testing centers worldwide."

Learn more about the new SNIA Foundations exam (S10–110) and study materials, the entire range of SNIA Certification Testing, and the six good reasons why you should be SNIA certified! Visit http://www.snia.org/education/certification.

Many storage professionals choose to begin with the SNIA Storage Foundations Certification, according to Michael Meleedy, SNIA's Director of Education. "The SNIA Foundations Exam (S10-110), newly revised to integrate new technologies and industry practices, is the entry-level exam within the SNIA Storage Networking Certification Program (SNCP)," Meleedy explained. "It has been widely accepted by the storage industry as the benchmark for basic vendor-neutral storage credentials. In fact, vendors like Dell require this certification."

Try the Practice Exam!

We recommend considering Spring as the best time to test your skills - and a NEW SNIA Storage Foundations Certification Practice exam makes it very easy. This practice exam is short (easy to squeeze into your busy day) and the sample of questions from the real exam will help you quickly determine if you have the skills required to pass the industry’s only vendor-neutral certification exam. It's open to everyone free of charge with the results available immediately. Take the practice exam.

Why Should I Explore the SNCP?

Professionals often wonder about the real value of IT related certifications. Is it worth your time and money to become certified? "Yes, especially in today's global marketplace," said Paul Talbut, SNIA Global Education and Regional Affiliate Program Director. "SNIA certifications provide storage and data management practitioners worldwide with an industry recognised uniform standard by which individual knowledge and skill-sets can be judged. We're reaching a variety of professional audiences; for example, SNIA's Foundations Exam is available both in English and Japanese, and is offered at all Prometric testing centers worldwide."

Learn more about the new SNIA Foundations exam (S10–110) and study materials, the entire range of SNIA Certification Testing, and the six good reasons why you should be SNIA certified! Visit http://www.snia.org/education/certification.

Find a similar article by tags

Leave a Reply

NFS FAQ – Test Your Knowledge

Apr 7, 2016

Leave a Reply

Ethernet RDMA Protocols Support for NVMe over Fabrics – Your Questions Answered

Mar 21, 2016

Our recent SNIA Ethernet Storage Forum Webcast on How Ethernet RDMA Protocols iWARP and RocE Support NVMe over Fabrics generated a lot of great questions. We didn’t have time to get to all of them during the live event, so as promised here are the answers. If you have additional questions, please comment on this blog and we’ll get back to you as soon as we can.

Q. Are there still actual (memory based) submission and completion queues, or are they just facades in front of the capsule transport?

A. On the host side, they’re “facades” as you call them. When running NVMe/F, host reads and writes do not actually use NVMe submission and completion queues. That data just comes from and to RNIC RDMA queues. On the target side, there could be real NVMe submissions and completion queues in play. But the more accurate answer is that it is “implementation dependent.”

Q. Who places the command from NVMe queue to host RDMA queue from software standpoint?

A. This is managed by the kernel host software in code written to the NVMe/F specification. The idea is that any existing application that thinks it is writing to the existing NVMe host software will in fact cause the SQE entry to be encapsulated and placed in an RDMA send queue.

Q. You say “most enterprise switches” support NVMe/F over RDMA, I guess those are ‘new’ ones, so what is the exact question to ask a vendor about support in an older switch?

A. For iWARP, any switch that can handle Internet traffic will do. Mellanox and Intel have different answers for RoCE / RoCEv2. Mellanox says that for RoCE, it is recommended, but not required, that the switch support Priority Flow Control (PFC). Most new enterprise switches support PFC, but you should check with your switch vendor to be sure. Intel believes RoCE was architected around DCB. The name itself, RoCE, stands for “RDMA over Converged Ethernet,” i.e., Ethernet with DCB. Intel believes RoCE in general will require PFC (or some future standard that delivers equivalent capabilities) for efficient RDMA over Ethernet.

Q. Can you comment on when one should use RoCEv2 vs. iWARP?

A. We gave a high-level overview of some of the deployment considerations on slide 30. We refer you to some of the vendor links on slide 32 for “non-vendor neutral” perspectives.

Q. If you take RDMA out of equation, what is the key advantage of NVMe/F over other protocols? Is it that they are transparent to any application?

A. NVMe/F allows the application to bypass the SCSI stack and uses native NVMe commands across a network. Most other block storage protocols require using the SCSI protocol layer, translating the NVMe commands into SCSI commands. With NVMe/F you also gain parallelism, simplicity of the command set, a separation between administrative sessions and data sessions, and a reduction of latency and processing required for NVMe I/O operations.

Q. Is ROCE v1 compatible with ROCE v2?

A. Yes. Adapters speaking RoCEv2 can also maintain RDMA connections with adapters speaking RoCEv1 because RoCEv2 ports are backwards interoperable with RoCEv1. Most of the currently shipping NICs supporting RoCE support both RoCEv1 and RoCEv2.

Q. Are RoCE and iWARP the only way to use Ethernet as a fabric for NMVe/F?

A. Initially yes; only iWARP and RoCE are supported for NVMe over Ethernet. But the NVM Express Working Group is also targeting FCoE. We should have probably been clearer about that, though it is noted on slide 11.

Q. What about doing NVMe over Fibre Channel? Is anyone looking at, or doing this?

A. Yes. This is not in scope for the first spec release, but the NVMe WG is collaborating with the FCIA on this. So NVMe over Fibre Channel is expected as another standard in the near future, to be promoted by T11.

Q. Do RoCE and iWARP both use just IP addresses for management or is there a higher level addressing mechanism, and management?

A. RoCEv2 uses the RoCE Connection Manager, and iWARP uses TCP connection management. They both use IP for addressing.

Q. Are there other fabrics to run NVMe over fabrics? Can you do this over OmniPath or Infiniband?

A. InfiniBand is in scope for the first spec release. Also, there is a related effort by the FCIA to support NVMe over Fibre Channel in a standard that will be promoted by T11.

Q. You indicated NVMe stack is in kernel while RDMA is a user level verb. How are NVMe SQ/ CQ entries transferred from NVMe to RDMA and vice versa? Also, could smaller transfers in NVMe (e.g. SGL of 512B) combined to larger sizes before being sent to RDMA entries and vice versa?

A. NVMe/F supports multiple scatter gather entries to combine multiple incontinuous transfers, nevertheless, the protocol doesn’t support chaining multiple NVMe commands on the same command capsule. A command capsule contains only a single NVMe command. Please also refer to slide 18 from the presentation.

Q. 1) How do implementers and adopters today test NVMe deployments? 2) Besides latency, what other key performance indicators do implements and adopters look for to determine whether the NVMe deployment is performing well or not?

A. 1) Like any other datacenter specification, testing is done by debugging, interop testing and plugfests. Local NVMe is well supported and can be tested by anyone. NVMe/F can be tested using pre-standard drivers or solutions from various vendors. UNH-IOH is an organization with an excellent reputation for helping here. 2) Latency, yes. But also sustained bandwidth, IOPS, and CPU utilization, i.e., the “usual suspects.”

Q. If RoCE CM supports ECN, why can’t it be used to implement a full solution without requiring PFC?

A. Explicit Congestion Notification (ECN) is an extension to TCP/IP defined by the IETF. First point is that it is a standard for congestion notification, not congestion management. Second point is that it operates at L3/L4. It does nothing to help make the L2 subnet “lossless.” Intel and Mellanox agree that generally speaking, all RDMA protocols perform better in a “lossless,” engineered fabric utilizing PFC (or some future standard that delivers equivalent capabilities). Mellanox believes PFC is recommended but not strictly required for RoCE, so RoCE can be deployed with PFC, ECN, or both. In contrast, Intel believes that for RoCE / RoCEv2 to deliver the “lossless” performance users expect from an RDMA fabric, PFC is in general required.

Q. How involved are Ethernet RDMA efforts with the SDN/OCP community? Is there a coming example of RoCE or iWarp on an SDN switch?

A. Good question, but neither RoCEv2 nor iWARP look any different to switch hardware than any other Ethernet packets. So they’d both work with any SDN switch. On the other hand, it should be possible to use SDN to provide special treatment with respect to say congestion management for RDMA packets. Regarding the Open Compute Project (OCP), there are various Ethernet NICs and switches available in OCP form factors.

Q. Is there a RoCE v3?

A. No. There is no RoCEv3.

Q. iWARP and RoCE both fall back to TCP/IP in the lowest communication sense? So they are somewhat compatible?

A. They can speak sockets to each other. In that sense they are compatible. However, for the usage model we’re considering here, NVMe/F, RDMA is required. Because of L3/L4 differences, RoCE and iWARP RNICs cannot speak RDMA to each other.

Q. So in case of RDMA (ROCE or iWARP), the NVMe controller’s fabric port is Ethernet?

A. Correct. But it must be RDMA-enabled Ethernet.

Q. What if I am using soft RoCE, do I still need an RNIC?

A. Functionally, soft RoCE or soft iWARP should work on a regular NIC. Whether the performance is sufficient to keep up with NVMe SSDs without the hardware offloads is a different matter.

Q. How would the NVMe controller know that a command is placed in the submission queue by the Fabric host driver? Is the fabric host driver responsible for notifying the NVMe controller through remote doorbell trigger or the Fabric target driver should trigger the doorbell?

A. No separate notification by the host required. The fabric’s host driver simply sends a command capsule to notify its companion subsystem driver that there is a new command to be processed. The way that the subsystem side notifies the backend NVMe drive is out of the scope of the protocol.

Q. I am chair of ETSI NFV working group on NFV acceleration. We are working on virtual RDMA and how VM can benefit from hardware independent RDMA. One corner stone of this is virtual-RDMA pseudo device. But there is not yet consensus on minimal set of verbs to be supported: Do you think this minimal verb set can be identified? Last, the transport address space is not consistent between IB, Ethernet. How supporting transport independent RDMA?

A. You know, the NVM Express Working Group is working on exactly these questions. They have to define a “minimal verb set” since NVMe/F generates the verbs. Similarly, I’d suggest looking to the spec to see how they resolve the transport address space differences.

Q. What’s the plan for Linux submission of NVMe over Fabric changes? What releases are being targeted?

A. The Linux Driver WG in the NVMe WG expects to submit code upstream within a quarter of the spec being finalized. At this time it looks like the most likely Linux target will be kernel 4.6, but it could end up being kernel 4.7.

Q. Are NVMe SQ/CQ transferred transparently to RDMA Queues or can they be modified?

A. The method defined in the NVMe/F specification entails a transparent transfer. If you wanted to modify an SQE or CQE, do so before initiating an NVMe/F operation.

Q. How common are rNICs for recent servers? i.e. What’s a quick check I can perform to find out if my NIC is an rNIC?

A. rNICs are offered by nearly all major server vendors. The best way to check is to ask your server or NIC vendor if your NIC supports iWARP or RoCE.

Q. This is most likely out of the scope of this talk but could you perhaps share about 30K level on the differences between “NVMe controller” hardware versus “NVMeF” hardware. It’s most likely a combination of R-NIC+NVMe controller, but would be great to get your take on this.

A goal of the NVMe/F spec is that it work with all existing NVMe controllers and all existing RoCE and iWARP RNICs. So on even a very low level, we can say “no difference.” That said, of course, nothing stops someone from combining NVMe controller and rNIC hardware into one solution.

Q. Are there any example Linux targets in the distros that exercise RDMA verbs? An iWARP or iSER target in a distro?

A. iSER allows this using a LIO or TGT SCSI target.

Q. Is there a standard or IP for RDMA NIC?

A. The various RNICs are based on IBTA, IETF, and IEEE standards are shown on slide 26.

Q. What is the typical additional latency introduced comparing NVMe over Fabric vs. local NVMe?

A. In the 2014 IDF demo, the prototype NVMe/F stack matched the bandwidth of local NVMe with a latency penalty of only 8µs over a local iWARP connection. Other demonstrations have shown an added fabric latency of 3µs to 15µs. The goal for the final spec is under 10µs.

Q. How well is NVME over RDMA supported for Windows ?

A. It is not currently supported, but then the spec isn’t even finished. Contract Microsoft if you are interested in their plans.

Q. RDMA over Ethernet would not support Layer 2 switching? How do you deal with TCP over head?

A. L2 switching is supported by both iWARP and RoCE. Both flavors of RNICs have MAC addresses, etc. iWARP had to deal with TCP/IP in hardware, a TCP/IP Offload Engine or TOE. The TOE used in an iWARP RNIC is significantly constrained compared to a general purpose TOE and therefore can operate with very high performance. See the Chelsio website for proof points. RoCE does not use TCP so does not need to deal with TCP overhead.

Q. Does RDMA not work with fibre channel?

A. They are totally different Transports (L4) and Networks (L3). That said, the FCIA is working with NVMe, Inc. on supporting NVMe over Fibre Channel in a standard to be promoted by T11.

Leave a Reply

Ethernet RDMA Protocols Support for NVMe over Fabrics – Your Questions Answered

Mar 21, 2016

Leave a Reply

Are Hard Drives or Flash Winning in Actual Density of Storage?

Mar 9, 2016

Leave a Reply