Cloud Object Storage – You’ve Got Questions, We’ve Got Answers

Aug 30, 2016

Cloud Object Storage – You’ve Got Questions, We’ve Got Answers

Aug 30, 2016

The SNIA Cloud Storage Initiative hosted a live Webcast “Cloud Object Storage 101.” Like any “101” type course, there were a lot of good questions. Here they all are – with our answers. If you have additional questions, please let us know by commenting on this blog.

Q. How do you envision the new role of tape (LTO) in this unstructured data growth?

A. Exactly the same way that tape has always played a part; it’s the storage medium that requires no power to store cold data and is cheap per bit. Although it has a limited shelf life, and although we believe that flash will eventually replace it, it still has a secure & growing foreseeable future.

Q. What are your thoughts on whether object storage can exist outside the bounds of supporting file systems? Block devices directly storing objects using the key as reference and removing the intervening file system? A hierarchy of objects instead of files?

A. All of these things. Objects can be objects identified by an ID in a flat non-hierarchical structure; or we can impose a hierarchy by key- to objectID translation; or indeed, an object may contain complete file systems or be treated like a block device. There are really no restrictions on how we can build meta data that describes all these things over the bytes of storage that makes up an object.

Q. Can you run write insensitive low latency apps on object storage, ex: virtual machines?

A. Yes. Object storage can be made up of the same stuff as other high performance storage systems; for instance, flash connect via high bandwidth and low latency networks. Or they could even be object stores built over PCIe and NVDIMM.

Q. Is erasure coding (EC) expensive in terms of networking and resources utilization (especially in case of rebuild)?

A. No, that’s one of the advantages of EC. Rebuilds take place by reading data from many disks and writing it to many disks; in traditional RAID rebuilds, the focus is normally on the one disk that’s being rebuilt.

Q. Is there any overhead for small files or object use cases? Do you have a recommended size?

A. Each system will have its own advantages and disadvantages for objects of specific sizes. In general, object stores are designed to store billions of objects, so the number of objects is usually not an issue.

Q. Can you comment on Internet bandwidth limitations on geographically dispersed erasure coded data?

A. Smart caching can make a big difference, but at the end of the day, a geographically EC dispersed object store won’t be faster than a local store. You can’t beat the speed of light.

Q. The suppliers all claim easy exit strategies from their systems. If we were to use one of the on-premise solutions such as ECS or Cleversafe, and then down the road decide to move off-premise, is the migration/egress typically as easy as claimed?

A. In general, any proprietary interface might lock you in. The SNIA’s CDMI is vendor neutral, and supported by a number of vendors. Amazon’s S3 is a popular and common interface. Ultimately, vendors want your data on their systems – and that means making it easy to get the data from a competing vendor’s system; lock-in is not what vendors want. Talk to your vendor and ask for other users’ experiences to get confirmation of their claims.

Q. Based on factual information, where are you seeing the most common use cases for Object Storage?

A. There are many, and each vendor of cloud storage has particular markets. Backup is a common case, as are systems in the healthcare space that treat data such as scans and X-rays as objects.

Q. NAS filers only scale up not out. They are hard to manage at scale. Why use them anymore?

A. There are many NAS systems that scale out as well as up. NFSv4 support high degrees of scale out and there are file systems like Gluster that provide very large-scale solutions indeed, into the multi-petabyte range.

Q. Are there any specific uses cases to avoid when considering object storage?

A. Yes. Many legacy applications will not generate any savings or gains if moved to object storage.

Q. Would you agree with industry statements that 80% of all data written today will NEVER be accessed again; and that we just don’t know WHICH 20% will be read again?

A. Yes to the first part, and no to the second. Knowing which 80% is cold is the trick. The industry is developing smart ways of analyzing data to help with the issue of ensuring cached data is hot data, and that cold data is placed correctly first time around.

Q. Is there also the possibility to bring “compliance” in the object storage? (thinking about banking, medical and other sensible data that needs to be tracked, retention, etc…)

A. Yes. Many object storage vendors provide software to do this.

Leave a Reply

Innovate Here - Storage Developer Conference Returns September 19-22 in Santa Clara CA

Aug 29, 2016

, and many more.

Check out our lineup of keynote speakers - they'll inspire you with their journeys! Dan Maslowski, Global Engineering Head at Citigroup CATE,will lead us on a dive down the software-defined storage rabbit hole to define cloud architecture in the data center. Gary Grider, HPC Director at the Los Alamos National Labs, will take us on a "Data Lake" cruise, and how to navigate requirements for long-term retention of mostly cold data. And Stephen Bates, Technical Director at Microsemi, will steer us on the Persistent Memory path, with the stones to step on and the boulders to avoid.

SDC also includes two plugfests. Participants at the Cloud Interoperability Plugfest will test the interoperability of well adopted cloud storage interfaces. We always have a large showing of CDMI implementations at this event, but are also looking for implementations of S3 and Swift (and Cinder/Manila) interfaces. You can register for the Cloud Plugfest here. The SMB3 Plugfest enables vendors to bring their implementations of SMB3 to test, identify, and fix bugs in a collaborative setting with the goal of providing a forum in which companies can develop interoperable products. You can sign up for the SMB3 Plugfest here.

Sound overwhelming? Don't worry, relax, there will be plenty of time to network with technical leaders representing the 160+ SNIA member companies and to tour the SDC exhibitors adjacent to the sessions for new applications and solutions.

SNIA Storage Developer Conference is September 19-22, 2016 at the Hyatt Regency Santa Clara. Check out the latest Agenda, read more about the Plugfests, and Register for SDC today!

, and many more.

Check out our lineup of keynote speakers - they'll inspire you with their journeys! Dan Maslowski, Global Engineering Head at Citigroup CATE,will lead us on a dive down the software-defined storage rabbit hole to define cloud architecture in the data center. Gary Grider, HPC Director at the Los Alamos National Labs, will take us on a "Data Lake" cruise, and how to navigate requirements for long-term retention of mostly cold data. And Stephen Bates, Technical Director at Microsemi, will steer us on the Persistent Memory path, with the stones to step on and the boulders to avoid.

SDC also includes two plugfests. Participants at the Cloud Interoperability Plugfest will test the interoperability of well adopted cloud storage interfaces. We always have a large showing of CDMI implementations at this event, but are also looking for implementations of S3 and Swift (and Cinder/Manila) interfaces. You can register for the Cloud Plugfest here. The SMB3 Plugfest enables vendors to bring their implementations of SMB3 to test, identify, and fix bugs in a collaborative setting with the goal of providing a forum in which companies can develop interoperable products. You can sign up for the SMB3 Plugfest here.

Sound overwhelming? Don't worry, relax, there will be plenty of time to network with technical leaders representing the 160+ SNIA member companies and to tour the SDC exhibitors adjacent to the sessions for new applications and solutions.

SNIA Storage Developer Conference is September 19-22, 2016 at the Hyatt Regency Santa Clara. Check out the latest Agenda, read more about the Plugfests, and Register for SDC today!

Find a similar article by tags

UncategorizedLeave a Reply

Containers, Docker and Storage: An Introduction

Aug 26, 2016

- Application deployment history

- Containers vs. virtual machines vs. bare metal

- Factors driving containers and common use cases

- Storage ecosystem and features

- Container storage table stakes (focus on Enterprise-class storage services)

- Introduction to Docker

- Key takeaways from DockerCon 2016

Find a similar article by tags

Cloud StorageLeave a Reply

Containers, Docker and Storage: An Introduction

Aug 26, 2016

Containers are the latest in what are new and innovative ways of packaging, managing and deploying distributed applications. On October 6th, the SNIA Cloud Storage Initiative will host a live webcast, “Intro to Containers, Container Storage Challenges and Docker.” Together with our guest speaker from Docker, Keith Hudgins, we’ll begin by introducing the concept of containers. You’ll learn what they are and the advantages they bring illustrated by use cases, why you might want to consider them as an app deployment model, and how they differ from VMs or bare metal deployment environments.

We’ll follow up with a look at what is required from a storage perspective, specifically when supporting stateful applications, using Docker, one of the leading software containerization platforms that provides a lightweight, open and secure environment for the deployment and management of containers. Finally, we’ll round out our Docker introduction by presenting a few key takeaways from DockerCon, the industry-leading event for makers and operators of distributed applications built on Docker, that recently took place in Seattle in June of this year.

Join us for this discussion on:

- Application deployment history

- Containers vs. virtual machines vs. bare metal

- Factors driving containers and common use cases

- Storage ecosystem and features

- Container storage table stakes (focus on Enterprise-class storage services)

- Introduction to Docker

- Key takeaways from DockerCon 2016

This event is live, so we’ll be on hand to answer your questions. Please register today. We hope to see you on Oct. 6th!

Leave a Reply

Join the Online Survey on Disaster Recovery

Aug 15, 2016

To start things off for the Disaster Recovery Special Interest Group (SIG) described in the previous blog post, the DPCO Committee has put together an online survey of how enterprises are doing data replication and Disaster Recovery and what issues they are encountering. Please join this effort by responding to this brief survey at: https://www.surveymonkey.com/r/W3DRKYD

THANK YOU in advance for doing this! It should take less than 5 minutes to complete.

Find a similar article by tags

Leave a Reply

Join the Online Survey on Disaster Recovery

Aug 15, 2016

To start things off for the Disaster Recovery Special Interest Group (SIG) described in the previous blog post, the DPCO Committee has put together an online survey of how enterprises are doing data replication and Disaster Recovery and what issues they are encountering. Please join this effort by responding to this brief survey at: https://www.surveymonkey.com/r/W3DRKYD

THANK YOU in advance for doing this! It should take less than 5 minutes to complete.

Find a similar article by tags

Leave a Reply

The Changing World of SNIA Technical Work - A Conversation with Technical Council Chair Mark Carlson

Aug 3, 2016

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

SNIA on Storage (SoS): Within SNIA, what is the most important activity of the SNIA Technical Council?

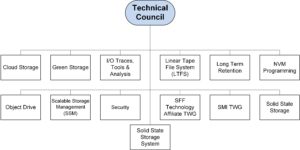

Mark Carlson (MC): The SNIA Technical Council works to coordinate and approve the technical work going on within SNIA. This includes both SNIA Architecture (standards) and SNIA Software. The work is conducted within 13 SNIA Technical Work Groups (TWGs). The members of the TC are elected from the voting companies of SNIA, and the Council also includes appointed members and advisors as well as SNIA regional affiliate advisors.

Mark Carlson is the current Chair of the SNIA Technical Council (TC). Mark has been a SNIA member and volunteer for over 18 years, and also wears many other SNIA hats. Recently, SNIA on Storage sat down with Mark to discuss his first nine months as the TC Chair and his views on the industry.

SNIA on Storage (SoS): Within SNIA, what is the most important activity of the SNIA Technical Council?

Mark Carlson (MC): The SNIA Technical Council works to coordinate and approve the technical work going on within SNIA. This includes both SNIA Architecture (standards) and SNIA Software. The work is conducted within 13 SNIA Technical Work Groups (TWGs). The members of the TC are elected from the voting companies of SNIA, and the Council also includes appointed members and advisors as well as SNIA regional affiliate advisors.  SoS: What has been your focus this first nine months of 2016?

MC: The SNIA Technical Council has overseen a major effort to integrate a new standard organization into SNIA. The creation of the new SNIA SFF Technology Affiliate (TA) Technical Work Group has brought in a very successful group of folks and standards related to storage connectors and transceivers. This work group, formed in June 2016, carries forth the longstanding SFF Committee work efforts that has operated since 1990 until mid-2016. In 2016, SFF Committee leaders transitioned the organizational stewardship to SNIA, to operate under a special membership class named Technology Affiliate, while retaining the long standing technical focus on specifications in a similar fashion as all SNIA TWGs do.

SoS: What changes did SNIA implement to form the new Technology Affiliate membership class and why?

MC: The SNIA Policy and Procedures were changed to account for this new type of membership. Companies can now join an Affiliate TWG without having to join SNIA as a US member. Current SNIA members who want to participate in a Technology Affiliate like SFF can join a Technology Affiliate and pay the separate dues. The SFF was a catalyst - we saw an organization looking for a new home as its membership evolved and its leadership transitioned. They felt SNIA could be this home but we needed to complete some activities to make it easier for them to seamlessly continue their work. The SFF is now fully active within SNIA and also working closely with T10 and T11, groups that SNIA members have long participated in.

SoS: Is forming this Technology Affiliate a one-time activity?

MC: Definitely not. The SNIA is actively seeking organizations who are looking for a structure that SNIA provides with IP policies, established infrastructure to conduct their work, and 160+ leading companies with volunteers who know storage and networking technology.

SoC: What are some of the customer pain points you see in the industry?

MC: Critical pain points the TC has started to address with new TWGs over the last 24 months include: performance of solid state storage arrays, where the SNIA Solid State Storage Systems (S4) TWG is working to identify, develop, and coordinate system performance standards for solid state storage systems; and object drives, where work is being done by the Object Drive TWG to identify, develop, and coordinate standards for object drives operating as storage nodes in scale out storage solutions. With the number of different future disk drive interfaces emerging that add value from external storage to in-storage compute, we want to make sure they can be managed at scale and are interoperable.

SoS: What has been your focus this first nine months of 2016?

MC: The SNIA Technical Council has overseen a major effort to integrate a new standard organization into SNIA. The creation of the new SNIA SFF Technology Affiliate (TA) Technical Work Group has brought in a very successful group of folks and standards related to storage connectors and transceivers. This work group, formed in June 2016, carries forth the longstanding SFF Committee work efforts that has operated since 1990 until mid-2016. In 2016, SFF Committee leaders transitioned the organizational stewardship to SNIA, to operate under a special membership class named Technology Affiliate, while retaining the long standing technical focus on specifications in a similar fashion as all SNIA TWGs do.

SoS: What changes did SNIA implement to form the new Technology Affiliate membership class and why?

MC: The SNIA Policy and Procedures were changed to account for this new type of membership. Companies can now join an Affiliate TWG without having to join SNIA as a US member. Current SNIA members who want to participate in a Technology Affiliate like SFF can join a Technology Affiliate and pay the separate dues. The SFF was a catalyst - we saw an organization looking for a new home as its membership evolved and its leadership transitioned. They felt SNIA could be this home but we needed to complete some activities to make it easier for them to seamlessly continue their work. The SFF is now fully active within SNIA and also working closely with T10 and T11, groups that SNIA members have long participated in.

SoS: Is forming this Technology Affiliate a one-time activity?

MC: Definitely not. The SNIA is actively seeking organizations who are looking for a structure that SNIA provides with IP policies, established infrastructure to conduct their work, and 160+ leading companies with volunteers who know storage and networking technology.

SoC: What are some of the customer pain points you see in the industry?

MC: Critical pain points the TC has started to address with new TWGs over the last 24 months include: performance of solid state storage arrays, where the SNIA Solid State Storage Systems (S4) TWG is working to identify, develop, and coordinate system performance standards for solid state storage systems; and object drives, where work is being done by the Object Drive TWG to identify, develop, and coordinate standards for object drives operating as storage nodes in scale out storage solutions. With the number of different future disk drive interfaces emerging that add value from external storage to in-storage compute, we want to make sure they can be managed at scale and are interoperable. SoS: What's upcoming for the next six months?

MC: The TC is currently working on a white paper to address data center drive requirements and the features and existing interface standards that satisfy some of those requirements. Of course, not all the solutions to these requirements will come from SNIA, but we think SNIA is in a unique position to bring in the data center customers that need these new features and work with the drive vendors to prototype solutions that then make their way into other standards efforts. Features that are targeted at the NVM Express, T10, and T13 committees would be coordinated with these customers.

SoS: Can non-members get involved with SNIA?

MC: Until very recently, if a company wanted to contribute to a software project within SNIA, they had to become a member. This was limiting to the community, and cut off contributions from those who were using the code, so SNIA has developed a convenient Contributor License Agreement (CLA) for contributions to individual projects. This allows external contributions but does not change the software licensing. The CLA is compatible with the IP protections that the SNIA IP Policy provides to our members. Our hope is that this will create a broader community of contributors to a more open SNIA, and facilitate open source project development even more.

SoS: Will you be onsite for the upcoming SNIA Storage Developer Conference (SDC)?

MC: Absolutely! I look forward to meeting SNIA members and colleagues September 19-22 at the Hyatt Regency Santa Clara. We have a great agenda, now online, that the TC has developed for this, our 18th conference, and registration is now open. SDC brings in more than 400 of the leading storage software and hardware developers, storage product and solution architects, product managers, storage product quality assurance engineers, product line CTOs, storage product customer support engineers, and in–house IT development staff from around the world. If technical professionals are not familiar with the education and knowledge that SDC can provide, a great way to get a taste is to check out the SDC Podcasts now posted, and the new ones that will appear leading up to SDC 2016.

SoS: What's upcoming for the next six months?

MC: The TC is currently working on a white paper to address data center drive requirements and the features and existing interface standards that satisfy some of those requirements. Of course, not all the solutions to these requirements will come from SNIA, but we think SNIA is in a unique position to bring in the data center customers that need these new features and work with the drive vendors to prototype solutions that then make their way into other standards efforts. Features that are targeted at the NVM Express, T10, and T13 committees would be coordinated with these customers.

SoS: Can non-members get involved with SNIA?

MC: Until very recently, if a company wanted to contribute to a software project within SNIA, they had to become a member. This was limiting to the community, and cut off contributions from those who were using the code, so SNIA has developed a convenient Contributor License Agreement (CLA) for contributions to individual projects. This allows external contributions but does not change the software licensing. The CLA is compatible with the IP protections that the SNIA IP Policy provides to our members. Our hope is that this will create a broader community of contributors to a more open SNIA, and facilitate open source project development even more.

SoS: Will you be onsite for the upcoming SNIA Storage Developer Conference (SDC)?

MC: Absolutely! I look forward to meeting SNIA members and colleagues September 19-22 at the Hyatt Regency Santa Clara. We have a great agenda, now online, that the TC has developed for this, our 18th conference, and registration is now open. SDC brings in more than 400 of the leading storage software and hardware developers, storage product and solution architects, product managers, storage product quality assurance engineers, product line CTOs, storage product customer support engineers, and in–house IT development staff from around the world. If technical professionals are not familiar with the education and knowledge that SDC can provide, a great way to get a taste is to check out the SDC Podcasts now posted, and the new ones that will appear leading up to SDC 2016.

Find a similar article by tags

data center Object Drive Scale-Out Storage Solid State Storage SSD Standards Storage TWGLeave a Reply

Flash Memory Summit Highlights SNIA Innovations in Persistent Memory & Flash

Jul 28, 2016

http://www.flashmemorysummit.com

On Monday, August 8, from 1:00pm – 5:00pm, a SNIA Education Afternoon will be open to the public in SCCC Room 203/204, where attendees can learn about multiple storage-related topics with five SNIA Tutorials on flash storage, combined service infrastructures, VDBench, stored-data encryption, and Non-Volatile DIMM (NVDIMM) integration from SNIA member speakers.

Following the Education Afternoon, the SSSI will host a reception and networking event in SCCC Room 203/204 from 5:30 pm - 7:00 pm with SSSI leadership providing perspectives on the persistent memory and SSD markets, SSD performance, NVDIMM, SSD data recovery/erase, and interface technology. Attendees will also be entered into a drawing to win solid state drives.

SNIA and SSSI members will also be featured during the conference in the following sessions:

http://www.flashmemorysummit.com

On Monday, August 8, from 1:00pm – 5:00pm, a SNIA Education Afternoon will be open to the public in SCCC Room 203/204, where attendees can learn about multiple storage-related topics with five SNIA Tutorials on flash storage, combined service infrastructures, VDBench, stored-data encryption, and Non-Volatile DIMM (NVDIMM) integration from SNIA member speakers.

Following the Education Afternoon, the SSSI will host a reception and networking event in SCCC Room 203/204 from 5:30 pm - 7:00 pm with SSSI leadership providing perspectives on the persistent memory and SSD markets, SSD performance, NVDIMM, SSD data recovery/erase, and interface technology. Attendees will also be entered into a drawing to win solid state drives.

SNIA and SSSI members will also be featured during the conference in the following sessions:

- Persistent Memory (Preconference Session C) NVDIMM presentation by Arthur Sainio, SNIA NVDIMM SIG Co-Chair (SMART Modular) Monday, August 8, 8:30am- 12:00 noon

- Data Recovery of SSDs (Session 102-A) SIG activity discussion by Scott Holewinski, SSSI Data Recovery/Erase SIG Chair (Gillware) Tuesday, August 9, 9:45 am - 10:50 am

- Persistent Memory – Beyond Flash sponsored by the SNIA SSSI (Forum R-21) Chairperson: Jim Pappas, SNIA Board of Directors Vice-Chair/SSSI Co-Chair (Intel); papers presented by SNIA members Rob Peglar (Symbolic IO), Rob Davis (Mellanox), Ken Gibson (Intel), Doug Voigt (HP), Neal Christensen (Microsoft) Wednesday, August 10, 8:30 am - 11:00 am

- NVDIMM Panel, organized by the SNIA NVDIMM SIG (Session 301-B) Chairperson: Jeff Chang SNIA NVDIMM SIG Co-Chair (AgigA Tech); papers presented by SNIA members Alex Fuqa (HP), Neal Christensen (Microsoft) Thursday, August 11, 8:30am - 9:45am

Leave a Reply

Flash Memory Summit Highlights SNIA Innovations in Persistent Memory & Flash

Jul 28, 2016

SNIA and the Solid State Storage Initiative (SSSI) invite you to join them at Flash Memory Summit 2016, August 8-11 at the Santa Clara Convention Center. SNIA members and colleagues receive $100 off any conference package using the code “SNIA16” by August 4 when registering for Flash Memory Summit at  http://www.flashmemorysummit.com

http://www.flashmemorysummit.com

On Monday, August 8, from 1:00pm – 5:00pm, a SNIA Education Afternoon will be open to the public in SCCC Room 203/204, where attendees can learn about multiple storage-related topics with five SNIA Tutorials on flash storage, combined service infrastructures, VDBench, stored-data encryption, and Non-Volatile DIMM (NVDIMM) integration from SNIA member speakers.

Following the Education Afternoon, the SSSI will host a reception and networking event in SCCC Room 203/204 from 5:30 pm – 7:00 pm with SSSI leadership providing perspectives on the persistent memory and SSD markets, SSD performance, NVDIMM, SSD data recovery/erase, and interface technology. Attendees will also be entered into a drawing to win solid state drives.

SNIA and SSSI members will also be featured during the conference in the following sessions:

- Persistent Memory (Preconference Session C)

NVDIMM presentation by Arthur Sainio, SNIA NVDIMM SIG Co-Chair (SMART Modular)

Monday, August 8, 8:30am- 12:00 noon - Data Recovery of SSDs (Session 102-A)

SIG activity discussion by Scott Holewinski, SSSI Data Recovery/Erase SIG Chair (Gillware)

Tuesday, August 9, 9:45 am – 10:50 am - Persistent Memory – Beyond Flash sponsored by the SNIA SSSI (Forum R-21) Chairperson: Jim Pappas, SNIA Board of Directors Vice-Chair/SSSI Co-Chair (Intel); papers presented by SNIA members Rob Peglar (Symbolic IO), Rob Davis (Mellanox), Ken Gibson (Intel), Doug Voigt (HP), Neal Christensen (Microsoft) Wednesday, August 10, 8:30 am – 11:00 am

- NVDIMM Panel, organized by the SNIA NVDIMM SIG (Session 301-B) Chairperson: Jeff Chang SNIA NVDIMM SIG Co-Chair (AgigA Tech); papers presented by SNIA members Alex Fuqa (HP), Neal Christensen (Microsoft) Thursday, August 11, 8:30am – 9:45am

Finally, don’t miss the SNIA SSSI in Expo booth #820 in Hall B and in the Solutions Showcase in Hall C on the FMS Exhibit Floor. Attendees can review a series of updated performance statistics on NVDIMM and SSD, see live NVDIMM demonstrations, access SSD data recovery/erase education, and preview a new white paper discussing erasure with regard to SSDs. SNIA representatives will also be present to discuss other SNIA programs such as certification, conformance testing, membership, and conferences.

Leave a Reply