- How many CPU cores are needed (I’m willing to give)?

- Optane SSD or 3D NAND SSD?

- How deep should the Queue-Depth be?

- Do I need to care about MTU?

Optimizing NVMe over Fabrics Performance with Different Ethernet Transports: Host Factors

Aug 11, 2020

Take 10 – Watch a Computational Storage Trilogy

Jul 31, 2020

We’re all busy these days, and the thought of scheduling even more content to watch can be overwhelming. Great technical content – especially from the SNIA Educational Library – delivers what you need to know, but often it needs to be consumed in long chunks. Perhaps it’s time to shorten the content so you have more freedom to watch.

With the tremendous interest in computational storage, SNIA is on the forefront of standards development – and education. The SNIA Computational Storage Special Interest Group (CS SIG) has just produced a video trilogy – informative, packed with detail, and consumable in under 10 minutes!

What Is Computational Storage?, presented by Eli Tiomkin, SNIA CS SIG Chair, emphasizes the need for common language and definition of computational storage terms, and discusses four distinct examples of computational storage deployments. It serves as a great introduction to the other videos.

Advantages of Reducing Data Movement frames computational storage advantages into two categories: saving time and saving money. JB Baker, SNIA CS SIG member, dives into a data filtering computational storage service example and an analytics benchmark, explaining how tasks complete more quickly using less power and fewer CPU cycles.

Eli Tiomkin returns to complete the trilogy with Computational Storage: Edge Compute Deployment. He discusses how an edge computing future might look, and how computational storage operates in a cloud, edge node, and edge device environment.

Each video in the Educational Library also has a

downloadable PDF of the slides that also link to additional resources that you

can view at your leisure. The SNIA Compute, Memory, and Storage Initiative

will be producing more of these short videos in the coming months on

computational storage, persistent memory, and other topics.

Check out each video and download the PDF of the

slides! Happy watching!

Leave a Reply

Data Reduction: Don’t Be Too Proud to Ask

Jul 29, 2020

It’s back! Our SNIA Networking Storage Forum (NSF) webcast series “Everything You Wanted to Know About Storage but Were Too Proud to Ask” will return on August 18, 2020. After a little hiatus, we are going to tackle the topic of data reduction.

Everyone knows data volumes are growing rapidly (25-35% per year according to many analysts), far faster than IT budgets, which are constrained to flat or minimal annual growth rates. One of the drivers of such rapid data growth is storing multiple copies of the same data. Developers copy data for testing and analysis. Users email and store multiple copies of the same files. Administrators typically back up the same data over and over, often with minimal to no changes.

To avoid a budget crisis and paying more than once to store the same data, storage vendors and customers can use data reduction techniques such as deduplication, compression, thin provisioning, clones, and snapshots.

On August 18th, our live webcast “Everything You Wanted to Know about Storage but Were Too Proud to Ask – Part Onyx” will focus on the fundamentals of data reduction, which can be performed in different places and at different stages of the data lifecycle. Like most technologies, there are related means to do this, but with enough differences to cause confusion. For that reason, we’re going to be looking at:

- How companies end up with so many copies of the same data

- Difference between deduplication and compression – when should you use one vs. the other?

- Where and when to reduce data: application-level, networked storage, backups, and during data movement. Is it best done at the client, the server, the storage, the network, or the backup?

- What are snapshots, clones, and thin provisioning, and how can they help?

- When to collapse the copies: real-time vs. post-process deduplication

- Performance considerations

Register today for this efficient and educational webcast, which will cover valuable concepts with minimal repetition or redundancy!

Find a similar article by tags

Data CompressionLeave a Reply

Data Reduction: Don’t Be Too Proud to Ask

Jul 29, 2020

- How companies end up with so many copies of the same data

- Difference between deduplication and compression – when should you use one vs. the other?

- Where and when to reduce data: application-level, networked storage, backups, and during data movement. Is it best done at the client, the server, the storage, the network, or the backup?

- What are snapshots, clones, and thin provisioning, and how can they help?

- When to collapse the copies: real-time vs. post-process deduplication

- Performance considerations

Leave a Reply

Think your Backup is Your Archive? Think Again!

Jul 28, 2020

The challenges of archiving structured and unstructured data

Traditionally, organizations had two electronic storage technologies: disk and tape. Whilst disk became the primary storage media, tape offered a cost-effective media to store infrequently accessed contents.

This led organizations to consider tape as not just a backup media but as the organization’s archive which then resulted in using monthly full system backups over extended durations to support archiving requirements.

Over time, legislative and regulatory bodies began to accept extended time delays for inquiries and investigations caused by tape restore limitations.

Since the beginning of this century, the following trends have impacted the IT industry:

- Single disk drive capacity has grown exponentially to multi-TB delivering cost effective performance levels.

- The exponential growth of unstructured data due to the introduction of social media networks, Internet of Things, etc. have exceeded all planned growth.

- The introduction of cloud storage (storage as a service) that offer an easy way to acquire storage services with incremental investment that fits any organization’s financial planning at virtually infinite scalability.

All the above have contributed to unprecedented growth of unstructured data that is straining all organizations’ IT budgets, which is not offset by the declining storage costs. As of today, all organizations are experiencing double digits and, in some instances, triple digits unstructured storage growth year over year. The response to this challenge is often to simply buy or license more capacity to accommodate growing unstructured data, however, this also creates an additional burden on IT to protect it.

What are some of the typical scenarios seen by business today?

- With the dynamic nature of IT industry, the employee churn rate is impacting IT organizations. Typically, when an employee moves on there is no process to retire their personal folders. Doing a simple scan can often reveal a large number of files that have not been accessed or used for fifteen or twenty years, but they continue consuming precious storage, backup license, and administrative time and money. Even when the time comes for a storage infrastructure refresh, these unused files become an integral part of the migration process simply since there is no decision process to retire data on storage.

- In many cases some of the data may need to be kept for many years in support of industry compliance (e.g. healthcare records, banking records, checks, etc.), this complicates the process to retire any data even further.

- Even with low-cost per GB storage provided by cloud providers, unjustified storage of non-business-related data can drive the storage cost higher if unmanaged and will be compounded by the need for multi-region and/or multi-zone data repositories in support of resilient access to stored data.

Industry response to managing unstructured data

Unstructured data growth is recognized across end user organizations, storage vendors and cloud providers alike. The response varies from offering extremely low-cost, virtually infinitely scalable storage platforms (e.g. object storage) or cloud object storage as a service with multi-tier pricing, coupled with varying performance and protection capabilities.

Tape vendors have also jumped on the bandwagon trying to revive tape as an even lower cost per GB archive storage, which is analogous to the historical usage of tape as an archive.

Can tape backup be the ultimate archive platform?

- LTO (Linear Tape Open or Ultrium) standards were initially released in early 1990 by a consortium of storage vendors as a replacement for DLT (Digital Linear tape technology) which was owned by a single vendor.

- Since 1990 LTO has undergone multiple generations, the current generation is LTO 8 (at 24TB per cartridge native capacity and up to 60TB compressed) with an approximate price of $100 per cartridge (0.0001 cents per GB) with infinite scalability since tapes can be removed after writing and kept on the shelf as archive.

- According to planned LTO roadmap, LTO 9 will be shipping Q4 2020.

- Since its inception, the LTO roadmap was to maintain one generation backward compatibility (e.g. LTO 8 can read and write to LTO 7).

Hence, IT shops need to plan tape migration around every five years just to maintain access to archive contents. Failure to refresh tape technology beyond two generations may result in inability to use the archive.

With tape solutions such as LTO 8 reaching 24TB per cartridge native capacity, with an approximate price of $100 per cartridge, it is clear that tape wins the price war hands down. However, there is a general trend to moving away from tape since it comes with the following burdens:

- The only way to write to tape is through backup software. Backup software regularly undergoes release updates similar to LTO media, to benefit from new processors, newer releases of operating systems, databases, newer libraries and new capabilities, and backup vendors maintain limited backward compatibility.

- There is no simple way to use data stored on tape since it is not in a usable format by applications or search engines until it is restored by the same backup mechanism that wrote it in the first place.

- There is always an extensive manual process to manage tapes which is prone to human errors and potential restore challenges.

- The impact of bit decay (bit rot): Like all forms of energy storage, stored bits experience gradual decay over time. A single bit decay is simply addressed by disk controllers and Cyclic Redundancy Check (CRC) on tapes but as the media ages to 15 years or more multiple bit errors may be experienced by the tape media that in many cases may render tape data unrestorable.

Based on the above challenges, tape is nearly always disqualified as a retention media, and the problem becomes more relevant with data retention periods exceeding 15 years.

Additionally, the challenge of managing billions of unstructured files by simple filesystem structures is a daunting task that will strain IT resources increasing the storage TCO exponentially.

Is the issue the same with structured data growth?

Structured data (e.g. ERP applications, HR, databases, etc.) is not experiencing similar growth rates compared to unstructured data, however it is still subject to the following:

- Compliance mandates: almost all ERP systems contain data that is subject to one or more compliance requirements (e.g. financial transactions, HR records, etc.).

- There is no simple way to retire or archive structured data since there is no granular way to deal with records within a database.

- Merger and acquisitions: there is always a need to consolidate, migrate databases and ERP systems, and while this may help control the spiraling cost of structured data storage, because of compliance and the need to access old financial records, organizations end up needing to keep the retired ERP system alive just to fulfill access to historical financial records. This comes with hefty cost of ERP and database licensing and the need to run the aged ERP system on systems that are out of maintenance support with the potential of permanent data loss due to stopping the backup process.

- Without an effective process to control, retire, and access aged records within the ERP systems, databases will continue to grow year over year, which in turn impacts the overall system performance and dramatically escalates ERP license cost along with backup licensing and disaster recovery planning.

- Coupled with storage growth, failure to retire ERP systems’ data in line with its regulatory mandates introduces severe compliance gaps and may result in a financial penalty in addition to unnecessary searches in response to an outdated investigation or data query.

- Almost all IT shops have experienced ERP refresh where the newly deployed ERP system is not backward compatible with the retired one, however access to compliance records within forces the organization to keep the retired application running until end of retention date, which in turn multiplies issues with managing the storage growth and licenses.

Specific Recommendations:

Organizations should consider the following:

- Create a corporate governance practice to enforce and manage data retention rules. Ideally, this practice should be sponsored by the CIO and must comprise representation of all divisions subject to compliance (e.g. legal, HR, finance, etc.) in addition to IT.

- Enforce data retention and retirement policies in strict alignment with regulations and/or corporate governance.

- Create a process and automated workflow to migrate data from live production to an archive platform and from archive to purge based on defined retention values with consideration to access frequency and critical nature of contents.

- Separate between structured and unstructured data archiving and challenge their incumbent vendors about structured data archiving technology capability.

- Investigate cost effective storage technologies that offer minimally managed, self-healing, resilient storage platforms where available.

- Consider tape as a viable option while tape continues to offer a cost-effective data storage medium, however many processes and strict rules will be required to avoid the well-known tape pitfalls mentioned previously.

About the SNIA Data Protection & Privacy Committee

The SNIA Data Protection & Privacy Committee (DPPC) exists to further the awareness and adoption of data protection technology, and to provide education, best practices and technology guidance on all matters related to the protection and privacy of data.

Within SNIA, data protection is defined as the assurance that data is usable and accessible for authorized purposes only, with acceptable performance and in compliance with applicable requirements. The technology behind data protection will remain a primary focus for the DPPC. However, there is now also a wider context which is being driven by increasing legislation to keep personal data private. The term data protection also extends into areas of resilience to cyber attacks and to threat management. To join the DPPC, login to SNIA and request to join the DPPC Governing Committee. Not a SNIA member? Learn all the benefits of SNIA membership.

Mounir Elmously, Governing Committee Member, SNIA Data Protection & Privacy Committee and Executive, Advisory Services Ernst & Young, LLP

Why did I join SNIA DPPC?

With my long history with storage and data protection technologies, along with my current job roles and responsibilities, I can bring my expertise to influence the storage industry technology education and drive industry awareness. During my days with storage vendors, I did not have the freedom to critique specific storage technologies or products. With SNIA I enjoy the freedom of independence to critique and use my knowledge and expertise to help others improve their understanding.

Leave a Reply

A Q&A on Protecting Data-at-Rest

Jul 28, 2020

Leave a Reply

Standards Watch: Blockchain Storage

Jul 24, 2020

Is there a standard for Blockchain today? Not really. What we are attempting to do is to build one.

Since 2008, when Satoshi Nakamoto’s White Paper was published and Bitcoin emerged, we have been learning about a new solution using a decentralized ledger and one of its applications: Blockchain.

Wikipedia defines Blockchain as follows: “A blockchain, originally block chain, is a growing list of records, called blocks, that are linked using cryptography. Each block contains a cryptographic hash of the previous block, a timestamp, and transaction data (generally represented as a Merkle tree).”

There are certain drawbacks which are significant in today’s applications for Blockchain solutions:

Reliability: There is a consistent issue in solutions today. In most cases, they are not scalable and cannot be adopted by the industry in their current format. Teams developing solutions need financial backing and support, and when the backing stops, the chain disappears even if technically it was a great solution. We aren’t even touching on certain solutions that, upon analyzing the underlying technical approach, are not viable for the industry overall. But their intentions are good, and there is such a thing as trial and error, so we are still learning what would be a good approach to blockchain solutions.

Interoperability is one of the major obstacles since the vast majority are chains with no interface to work across different chains, and that basically makes it an internal company product or a solution. You cannot use these in real-world applications if you want to create an exchange of data and information. Granted that there are some nascent solutions which try to address this problem, and I know that our group will analyze and work with these companies and teams to see if we can create an exchange of best practices.

Data Accuracy: When it comes to data in the blockchain the true or false relies on the immutability property of blockchain as a data storage. Having an improved storage medium which prevents careless or malicious actions to make them visible and proven the authenticity of the data will allow to remove false data and flag attempts for chain to be corrupted.

Latency is another aspect which is hindering adoption today. Regular transactions using databases and code are currently faster than utilization of the blockchain.

The new SNIA Blockchain Storage Technical Work Group (TWG) will focus on understanding the existing technical solutions and best practices. Why try to do something which has already been done? Instead, it’s better to add this to our knowledge of storage and networking to build new open source specification for blockchain protocols to work across blockchain networks. The TWG will be utilizing aspects of Proof of Capacity and Proof of Space protocols to address storage bottlenecks.

The Blockchain Storage TWG is currently supported by companies such as DELL EMC, IBM, Marvell, Western Digital, ActionSpot and Bankex. We come from different backgrounds, but our common goal is to build something great. For more information about this new technical work group, please contact membershipservices@snia.org.

Find a similar article by tags

Leave a Reply

The Role of AIOps in IT Modernization

Jul 23, 2020

Leave a Reply

The Role of AIOps in IT Modernization

Jul 23, 2020

Find a similar article by tags

UncategorizedLeave a Reply

Are We at the End of the 2.5-inch Disk Era?

Jul 20, 2020

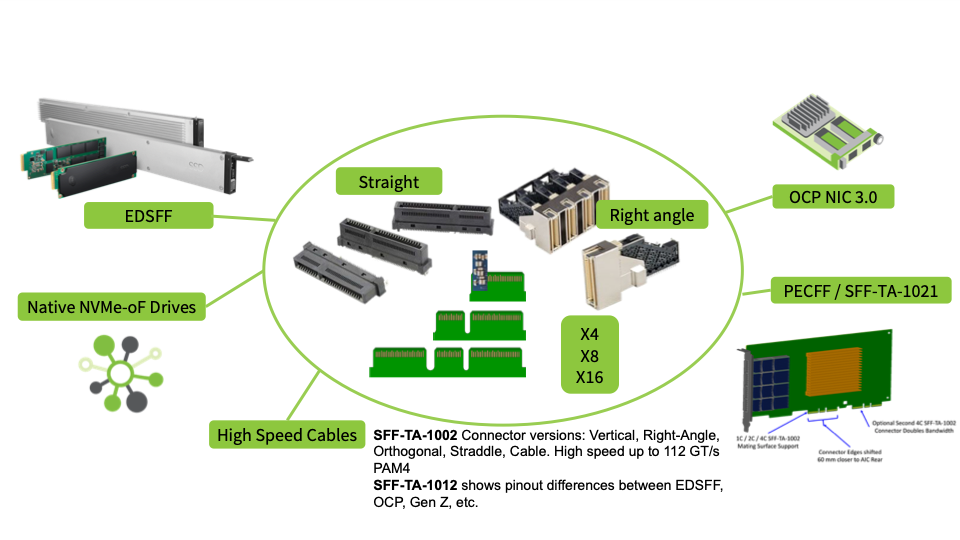

The SNIA Solid State Storage Special Interest Group (SIG) recently updated the Solid State Drive Form Factor page to provide detailed information on dimensions; mechanical, electrical, and connector specifications; and protocols. On our August 4, 2020 SNIA webcast, we will take a detailed look at one of these form factors - Enterprise and Data Center SSD Form Factor (EDSFF) – challenging an expert panel to consider if we are at the end of the 2.5-in disk era.

Enterprise and Data Center Form Factor (EFSFF) is designed natively for data center NVMe SSDs to improve thermal, power, performance, and capacity scaling. EDSFF has different variants for flexible and scalable performance, dense storage configurations, general purpose servers, and improved data center TCO. At the 2020 Open Compute Virtual Summit, OEMs, cloud service providers, hyperscale data center, and SSD vendors showcased products and their vision for how this new family of SSD form factors solves real data challenges.

During the webcast, our SNIA experts from companies that have been involved in EDSFF since the beginning will discuss how they will use the EDSFF form factor:

- Hyperscale data center and cloud service provider panelists Facebook and Microsoft will discuss how E1.S (SNIA specification SFF-TA-1006) helps solve performance scalability, serviceability, capacity, and thermal challenges for future NVMe SSDs and persistent memory in 1U servers.

- Server and storage system panelists Dell, HPE, Kioxia, and Lenovo will discuss their goals for the E3 family and the new updated version of the E3 specification (SNIA specification SFF-TA-1008)

We hope you can join us as we spend some time on this important topic. Register here to save your spot.

Leave a Reply