Got Questions on Container Storage? We’ve Got Answers!

Feb 27, 2019

What Drives SNIA Technical Work?

Feb 25, 2019

Mark Carlson (MC): SNIA does not just sit back and dream up work to do, but rather relies on industry input and requirements. A great example of how SNIA work is created is the 2018 launch of the Computational Storage Technical Work Group (TWG). It all started at the Flash Memory Summit industry event with a simple Birds-of-a-Feather on a thing called “computational storage”. Interest definitely was high – the room was packed - and the individuals assembled decided to use SNIA as the vehicle to do definition and standardization of this new concept.

SOS: What made them choose SNIA?

MC: The industry views SNIA as a place where they can come to get agreement on technical issues. SNIA is committed to developing and promoting standards in the broad and complex fields of digital storage and information management to allow solutions to be more easily produced. Technical Work Groups (TWGs) are collaborative technical groups within the SNIA that work on specific areas of technology development.

In the case of computational storage, it was clear that the industry saw SNIA as a catalyst to get things off and running with computational storage in the standards world because…six months after FMS we have a new Technical Work Group with 90 individuals from 30 member companies starting their work and evangelizing computational storage at events like March’s OCP Summit.

SOS: What are some of the changing storage industry trends that might create a need for new SNIA efforts?

MC: We see some exciting activity in the areas of composable infrastructure, next generation fabrics, and new data center innovations. Standard computer architectures in data centers today are essentially servers acting as the building blocks for assembling data center sized solutions. But imagine if CPUs, memory, storage, and networks were individual components on next generation fabrics, making more granular building blocks. The definition then of what a server is would be an assemblage on the fly of these components. Scalability of any individual platform instance would become a matter of combining sufficient components for the task.

SOS: What role would SNIA play?

MC: Composable infrastructure will now need to be managed in a different way than when using servers as a building block. Rather than having a single point of management for multiple components in a box, management now needs to be component-based and handled by the components themselves. Redfish from the DMTF is already architected for this very issue of scale out and SNIA’s Swordfish is continuing to extend this into storage architectures.

SNIA is already a pioneer in the area of storage management, and anticipating this coming possible disruption by moving its standards to modern protocols with alliance partners like DMTF and NVM Express. DMTF Redfish and SNIA Swordfish are examples of this work where Object Drive and other work items create a Redfish profile for NVMe drives. NVMe drives will be a storage component and a peer of the CPU either attached to the host or not attached and outside the box.

Next generation fabrics are enabling these composable infrastructures, making new data center innovation possible. But the new fabrics themselves need to be managed as well.

SOS: Where can folks learn about these activities in person?

MC: Check out the Computational Storage Birds-of-a-Feather at USENIX FAST this week. In March, SNIA will present Persistent Memory, including a PM Programming Tutorial and Hackathon at the Non Volatile Memory Workshop at UC San Diego; Computational Storage presentations at OCP Summit; and NVM alliance activities at NVM Annual Members Meeting and Developer Day.

SOS: Exciting news indeed! Thanks, Mark, and we’ll look forward to seeing SNIA at upcoming events.

Mark Carlson (MC): SNIA does not just sit back and dream up work to do, but rather relies on industry input and requirements. A great example of how SNIA work is created is the 2018 launch of the Computational Storage Technical Work Group (TWG). It all started at the Flash Memory Summit industry event with a simple Birds-of-a-Feather on a thing called “computational storage”. Interest definitely was high – the room was packed - and the individuals assembled decided to use SNIA as the vehicle to do definition and standardization of this new concept.

SOS: What made them choose SNIA?

MC: The industry views SNIA as a place where they can come to get agreement on technical issues. SNIA is committed to developing and promoting standards in the broad and complex fields of digital storage and information management to allow solutions to be more easily produced. Technical Work Groups (TWGs) are collaborative technical groups within the SNIA that work on specific areas of technology development.

In the case of computational storage, it was clear that the industry saw SNIA as a catalyst to get things off and running with computational storage in the standards world because…six months after FMS we have a new Technical Work Group with 90 individuals from 30 member companies starting their work and evangelizing computational storage at events like March’s OCP Summit.

SOS: What are some of the changing storage industry trends that might create a need for new SNIA efforts?

MC: We see some exciting activity in the areas of composable infrastructure, next generation fabrics, and new data center innovations. Standard computer architectures in data centers today are essentially servers acting as the building blocks for assembling data center sized solutions. But imagine if CPUs, memory, storage, and networks were individual components on next generation fabrics, making more granular building blocks. The definition then of what a server is would be an assemblage on the fly of these components. Scalability of any individual platform instance would become a matter of combining sufficient components for the task.

SOS: What role would SNIA play?

MC: Composable infrastructure will now need to be managed in a different way than when using servers as a building block. Rather than having a single point of management for multiple components in a box, management now needs to be component-based and handled by the components themselves. Redfish from the DMTF is already architected for this very issue of scale out and SNIA’s Swordfish is continuing to extend this into storage architectures.

SNIA is already a pioneer in the area of storage management, and anticipating this coming possible disruption by moving its standards to modern protocols with alliance partners like DMTF and NVM Express. DMTF Redfish and SNIA Swordfish are examples of this work where Object Drive and other work items create a Redfish profile for NVMe drives. NVMe drives will be a storage component and a peer of the CPU either attached to the host or not attached and outside the box.

Next generation fabrics are enabling these composable infrastructures, making new data center innovation possible. But the new fabrics themselves need to be managed as well.

SOS: Where can folks learn about these activities in person?

MC: Check out the Computational Storage Birds-of-a-Feather at USENIX FAST this week. In March, SNIA will present Persistent Memory, including a PM Programming Tutorial and Hackathon at the Non Volatile Memory Workshop at UC San Diego; Computational Storage presentations at OCP Summit; and NVM alliance activities at NVM Annual Members Meeting and Developer Day.

SOS: Exciting news indeed! Thanks, Mark, and we’ll look forward to seeing SNIA at upcoming events.

Leave a Reply

Hacking with the U

Feb 22, 2019

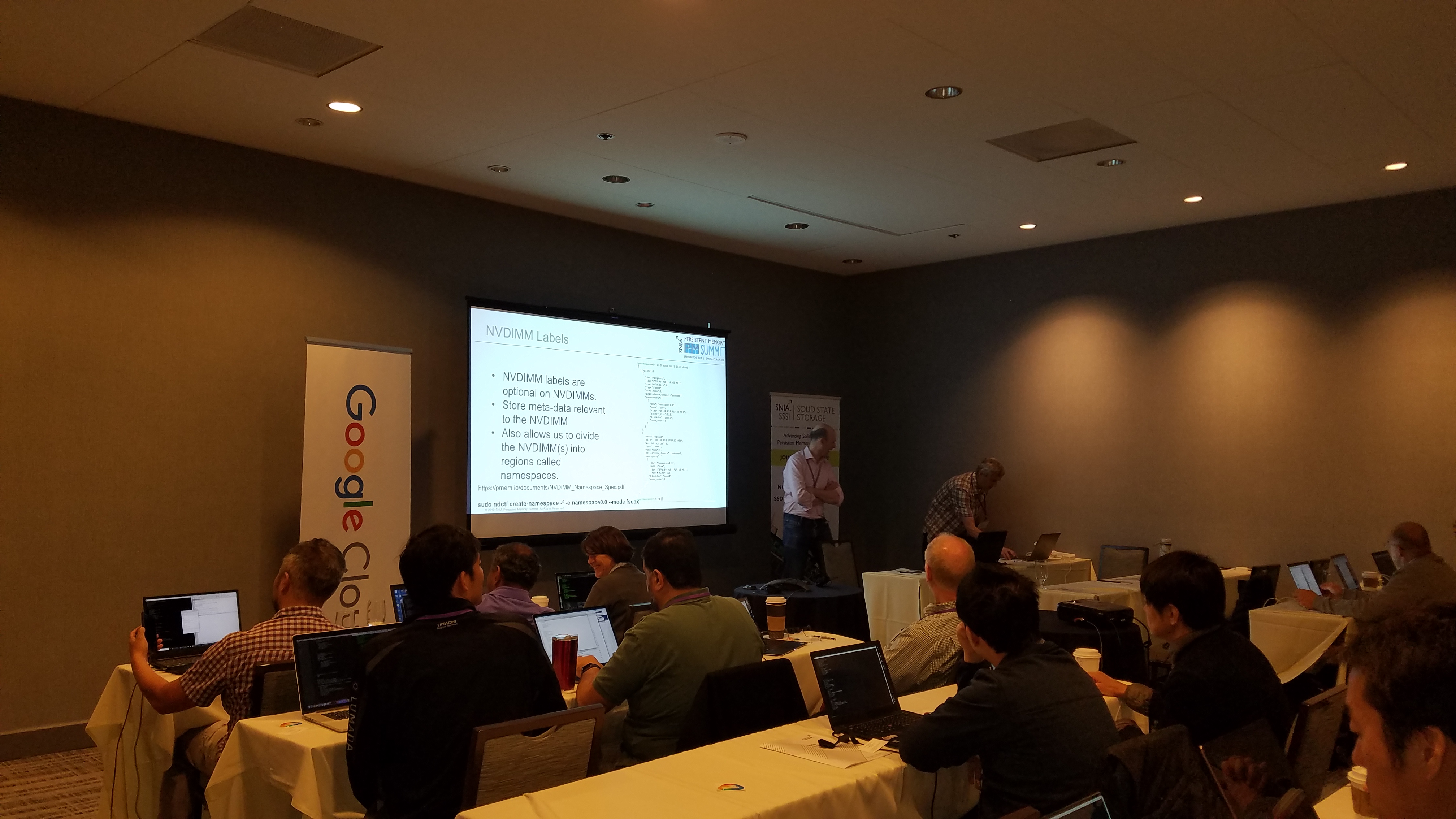

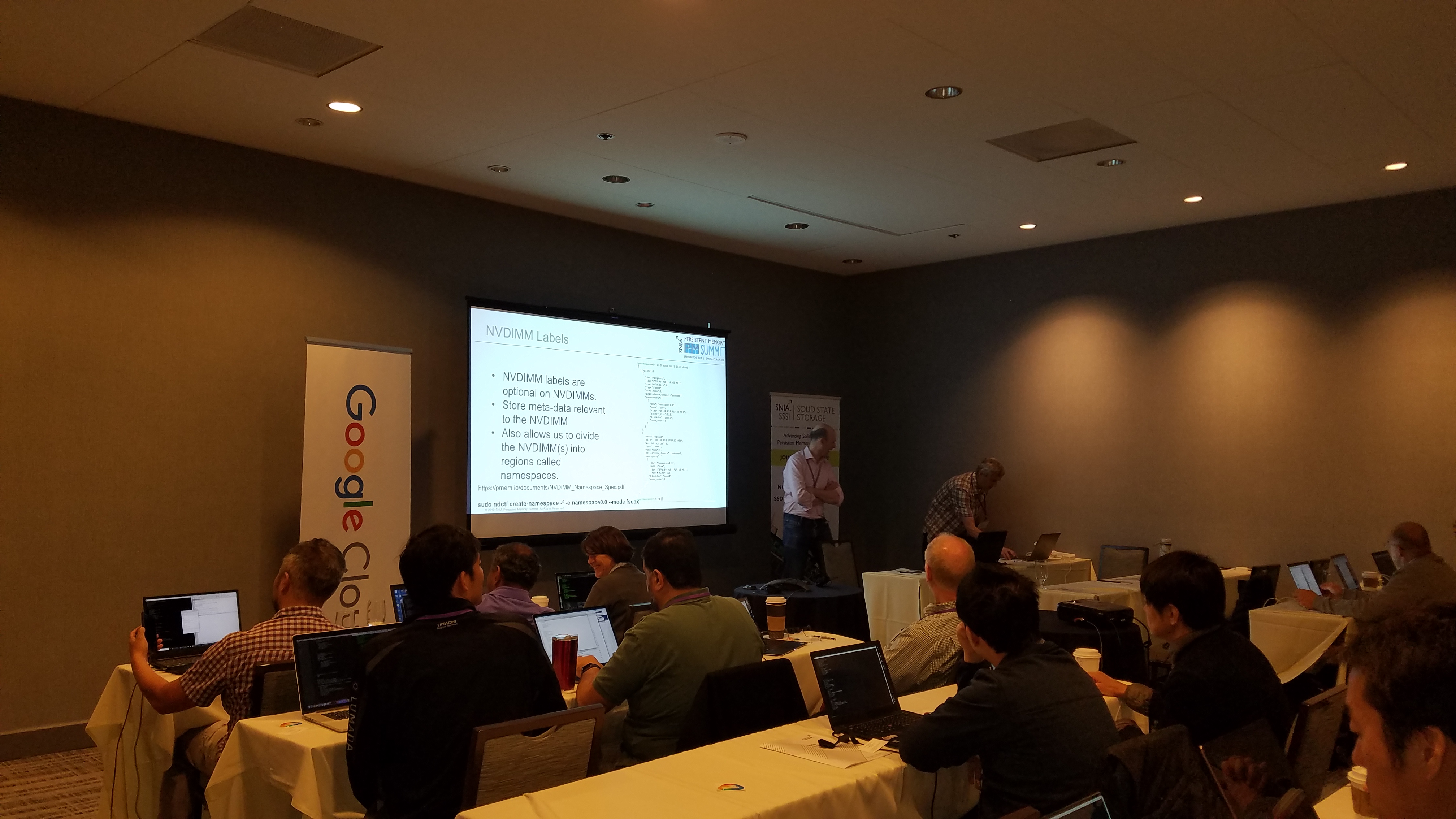

It’s now less than three weeks for the next SNIA Persistent Memory Hackathon and Workshop. Our next workshop will be held in conjunction with the 10th Annual Non- Volatile Memory Workshop (http://nvmw.ucsd.edu/) at the University of California, San Diego on Sunday, March 10th from 2:00pm to 5:30pm.

Volatile Memory Workshop (http://nvmw.ucsd.edu/) at the University of California, San Diego on Sunday, March 10th from 2:00pm to 5:30pm.

The Hackathon at NVMW19 provides software developers with an understanding of the different tiers and modes of persistent memory, and gives an overview of the standard software libraries that are available to access persistent memory. Attendees will have access to system configured with persistent memory, software libraries, and sample source code. A variety of mentors will be available to provide tutorials and guide participants in the development of code. Learn more here.

In the last workshop, the feedback from the attendees pointed to a desire to work longer on code after the tutorial ended. We will ensure that all the Hackathon attendees will have access to their environment through the length of the conference. So any participant in the Sunday session will be able to continue work until the conference completion on Tuesday afternoon. While there won’t be an opportunity for formal follow-up, we’re planning an informal meet-up the final day of the conference. Stay tuned for details.

While there won’t be an opportunity for formal follow-up, we’re planning an informal meet-up the final day of the conference. Stay tuned for details.

For those not familiar with NVMW, the program is replete with the latest in non-volatile memory research, which enables attendees to understand the practical advances in software development for persistence. The workshop facilitates the exchange of ideas and advances collaboration and knowledge of the use of persistent memory. Registration for the conference is affordable, and grants are available for university student attendees.

For those not familiar with NVMW, the program is replete with the latest in non-volatile memory research, which enables attendees to understand the practical advances in software development for persistence. The workshop facilitates the exchange of ideas and advances collaboration and knowledge of the use of persistent memory. Registration for the conference is affordable, and grants are available for university student attendees.

For those not able to get to San Diego in March, enjoy the weather that obviously won’t be anywhere near as nice where you live. Oh, sorry. For those not able to get to San Diego in March, SNIA is working on the next opportunities for a formal hackathon. But we can’t do it alone. If you have a group of programmers interested in learning persistent memory development, SNIA would consider coming to you with a Host a Hackathon. We can provide, or even train, mentors to get you started, and show you how to build your own cloud-based development environment. You’ll get an introduction to coding, and you’ll be left with some great examples to build your own applications. Contact SNIA at PMhackathon@snia.org for more details and visit our PM Programming Hackathon webpage for the latest updates.

Leave a Reply

Hacking with the U

Feb 22, 2019

It’s now less than three weeks for the next SNIA Persistent Memory Hackathon and Workshop. Our next workshop will be held in conjunction with the 10th Annual Non- Volatile Memory Workshop (http://nvmw.ucsd.edu/) at the University of California, San Diego on Sunday, March 10th from 2:00pm to 5:30pm.

Volatile Memory Workshop (http://nvmw.ucsd.edu/) at the University of California, San Diego on Sunday, March 10th from 2:00pm to 5:30pm.

The Hackathon at NVMW19 provides software developers with an understanding of the different tiers and modes of persistent memory, and gives an overview of the standard software libraries that are available to access persistent memory. Attendees will have access to system configured with persistent memory, software libraries, and sample source code. A variety of mentors will be available to provide tutorials and guide participants in the development of code. Learn more here.

In the last workshop, the feedback from the attendees pointed to a desire to work longer on code after the tutorial ended. We will ensure that all the Hackathon attendees will have access to their environment through the length of the conference. So any participant in the Sunday session will be able to continue work until the conference completion on Tuesday afternoon. While there won’t be an opportunity for formal follow-up, we’re planning an informal meet-up the final day of the conference. Stay tuned for details.

While there won’t be an opportunity for formal follow-up, we’re planning an informal meet-up the final day of the conference. Stay tuned for details.

For those not familiar with NVMW, the program is replete with the latest in non-volatile memory research, which enables attendees to understand the practical advances in software development for persistence. The workshop facilitates the exchange of ideas and advances collaboration and knowledge of the use of persistent memory. Registration for the conference is affordable, and grants are available for university student attendees.

For those not familiar with NVMW, the program is replete with the latest in non-volatile memory research, which enables attendees to understand the practical advances in software development for persistence. The workshop facilitates the exchange of ideas and advances collaboration and knowledge of the use of persistent memory. Registration for the conference is affordable, and grants are available for university student attendees.

For those not able to get to San Diego in March, enjoy the weather that obviously won’t be anywhere near as nice where you live. Oh, sorry. For those not able to get to San Diego in March, SNIA is working on the next opportunities for a formal hackathon. But we can’t do it alone. If you have a group of programmers interested in learning persistent memory development, SNIA would consider coming to you with a Host a Hackathon. We can provide, or even train, mentors to get you started, and show you how to build your own cloud-based development environment. You’ll get an introduction to coding, and you’ll be left with some great examples to build your own applications. Contact SNIA at PMhackathon@snia.org for more details and visit our PM Programming Hackathon webpage for the latest updates.

Leave a Reply

What Are the Networking Requirements for HCI?

Feb 20, 2019

Hyperconverged infrastructures (also known as “HCI”) are designed to be easy to set up and manage. All you need to do is add networking. In practice, the “add networking” part has been more difficult than most anticipated. That’s why the SNIA Networking Storage Forum (NSF) hosted a live webcast “The Networking Requirements for Hyperconverged Infrastructure” where we covered what HCI is, storage characteristics of HCI, and important networking considerations. If you missed it, it’s available on-demand.

We had some interesting questions during the live webcast and as we promised during the live presentation, here are answers from our expert presenters:

Q. An HCI configuration ought to exist out of 3 or more nodes, or have I misunderstood this? In an earlier slide I saw HCI with 1 and 2 nodes.

A. You are correct that HCI typically requires 3 or more nodes with resources pooled together to ensure data is distributed through the cluster in a durable fashion. Some vendors have released 2 node versions appropriate for edge locations or SMBs, but these revert to a more traditional failover approach between the two nodes rather than a true HCI configuration.

Q. NVMe-oF means running NVMe over Fibre Channel or something else?

A. The “F” in “NVMe-oF” stands for “Fabrics”. As of this writing, there are currently 3 different “official” Fabric transports explicitly outlined in the specification: RDMA-based (InfiniBand, RoCE, iWARP), TCP, and Fibre Channel. HCI, however, is a topology that is almost exclusively Ethernet-based, and Fibre Channel is a less likely storage networking transport for the solution.

The spec for NVMe-oF using TCP was recently ratified, and may gain traction quickly given the broad deployment of TCP and comfort level with the technology in IT. You can learn ore about NVMe-oF in the webinar “Under the Hood with NVMe over Fabrics” and NVMe/TCP in this NSF webcast “What NVMe

Hyperconverged infrastructures (also known as “HCI”) are designed to be easy to set up and manage. All you need to do is add networking. In practice, the “add networking” part has been more difficult than most anticipated. That’s why the SNIA Networking Storage Forum (NSF) hosted a live webcast “The Networking Requirements for Hyperconverged Infrastructure” where we covered what HCI is, storage characteristics of HCI, and important networking considerations. If you missed it, it’s available on-demand.

We had some interesting questions during the live webcast and as we promised during the live presentation, here are answers from our expert presenters:

Q. An HCI configuration ought to exist out of 3 or more nodes, or have I misunderstood this? In an earlier slide I saw HCI with 1 and 2 nodes.

A. You are correct that HCI typically requires 3 or more nodes with resources pooled together to ensure data is distributed through the cluster in a durable fashion. Some vendors have released 2 node versions appropriate for edge locations or SMBs, but these revert to a more traditional failover approach between the two nodes rather than a true HCI configuration.

Q. NVMe-oF means running NVMe over Fibre Channel or something else?

A. The “F” in “NVMe-oF” stands for “Fabrics”. As of this writing, there are currently 3 different “official” Fabric transports explicitly outlined in the specification: RDMA-based (InfiniBand, RoCE, iWARP), TCP, and Fibre Channel. HCI, however, is a topology that is almost exclusively Ethernet-based, and Fibre Channel is a less likely storage networking transport for the solution.

The spec for NVMe-oF using TCP was recently ratified, and may gain traction quickly given the broad deployment of TCP and comfort level with the technology in IT. You can learn ore about NVMe-oF in the webinar “Under the Hood with NVMe over Fabrics” and NVMe/TCP in this NSF webcast “What NVMe /TCP Means to Networked Storage.”

Q. In the past we have seen vendors leverage RDMA within the host but not take it to the fabric i.e. RDMA yes, RDMA over fabric may be not. Within HCI, do you see fabrics being required to be RDMA aware and if so, who do you think will ultimately decide this, HCI vendor, applications vendor, the customer, or someone else?

A. The premise of HCI systems is that there is an entire ecosystem “under one roof,” so to speaker. Vendors with HCI solutions on the market have their choice of networking protocols that best works with their levels of virtualization and abstraction.

To that end, it may be possible that RDMA-capable fabrics will become more common as workload demands on the network increase, and IT looks for various ways to optimize traffic. Hyperconverged infrastructure, with lots of east-west traffic between nodes, can take advantage of RDMA and NVMe-oF to improve performance and alleviate certain bottlenecks in the solution. It is, however, only one component piece of the overall picture. The HCI solution needs to know how to take advantage of these fabrics, as do switches, etc. for an end-to-end solution, and in some cases other transport forms may be more appropriate.

Q. What is a metadata network? I had never heard that term before.

A. Metadata is the data about the data. That is, HCI systems need to know where the data is located, when it was written, how to access it. That information about the data is called metadata.

As systems grow over time, the amount of metadata that exists in the system grows as well. In fact, it is not uncommon for the metadata quantity and traffic to exceed the data traffic. For that reason, some vendors recommend establishing a completely separate network for handling the metadata traffic that traverses the system.

/TCP Means to Networked Storage.”

Q. In the past we have seen vendors leverage RDMA within the host but not take it to the fabric i.e. RDMA yes, RDMA over fabric may be not. Within HCI, do you see fabrics being required to be RDMA aware and if so, who do you think will ultimately decide this, HCI vendor, applications vendor, the customer, or someone else?

A. The premise of HCI systems is that there is an entire ecosystem “under one roof,” so to speaker. Vendors with HCI solutions on the market have their choice of networking protocols that best works with their levels of virtualization and abstraction.

To that end, it may be possible that RDMA-capable fabrics will become more common as workload demands on the network increase, and IT looks for various ways to optimize traffic. Hyperconverged infrastructure, with lots of east-west traffic between nodes, can take advantage of RDMA and NVMe-oF to improve performance and alleviate certain bottlenecks in the solution. It is, however, only one component piece of the overall picture. The HCI solution needs to know how to take advantage of these fabrics, as do switches, etc. for an end-to-end solution, and in some cases other transport forms may be more appropriate.

Q. What is a metadata network? I had never heard that term before.

A. Metadata is the data about the data. That is, HCI systems need to know where the data is located, when it was written, how to access it. That information about the data is called metadata.

As systems grow over time, the amount of metadata that exists in the system grows as well. In fact, it is not uncommon for the metadata quantity and traffic to exceed the data traffic. For that reason, some vendors recommend establishing a completely separate network for handling the metadata traffic that traverses the system.

Find a similar article by tags

ethernet Hyperconverged Infrastructure Hyperconverged Infrastructure Hyperconverged Infrastructure Networked Storage Storage NetworkingLeave a Reply

Persistently Fun Once Again – SNIA’s 7th Persistent Memory Summit is a Wrap!

Jan 28, 2019

Leave it to Rob Peglar, SNIA Board Member and the MC of SNIA’s 7th annual Persistent Memory Summit to capture the Summit day as persistently fun with a metric boatload of great presentations and speakers! And indeed it was a great day, with fourteen sessions presented by 23 speakers covering the breadth of where PM is in 2019 – real world, application-focused, and supported by multiple operating systems. Find a great recap on the Forbes blog by Tom Coughlin of Coughlin Associates.

Attendees enjoyed live demos of Persistent Memory technologies from AgigA Tech, Intel,

Leave it to Rob Peglar, SNIA Board Member and the MC of SNIA’s 7th annual Persistent Memory Summit to capture the Summit day as persistently fun with a metric boatload of great presentations and speakers! And indeed it was a great day, with fourteen sessions presented by 23 speakers covering the breadth of where PM is in 2019 – real world, application-focused, and supported by multiple operating systems. Find a great recap on the Forbes blog by Tom Coughlin of Coughlin Associates.

Attendees enjoyed live demos of Persistent Memory technologies from AgigA Tech, Intel,  SMART Modular, the SNIA Solid State Storage Initiative, and Xilinx. Learn more about what they presented here.

And for the first time as a part of the Persistent Memory Summit, SNIA hosted a Persistent Memory Programming Hackathon sponsored by Google Cloud, where SNIA PM experts

SMART Modular, the SNIA Solid State Storage Initiative, and Xilinx. Learn more about what they presented here.

And for the first time as a part of the Persistent Memory Summit, SNIA hosted a Persistent Memory Programming Hackathon sponsored by Google Cloud, where SNIA PM experts  mentored software developers to do live coding to understand the various tiers and modes of PM and what existing methods are available to access them. Upcoming SNIA SSSI on Solid State Storage blogs will give details and insights into "PM Hacking". Also sign up for the SNIAMatters monthly newsletter to learn more, and stay tuned for upcoming Hackathons – next one is March 10-11 in San Diego.

Missed out on the live sessions? Not to worry, each session was videotaped and can be found on the SNIA Youtube Channel. Download the slides for each session on the PM Summit agenda at www.snia.org/pm-summit. Thanks to our presenters from Advanced Computation and Storage, Arm, Avalanche Technology, Calypso Systems, Coughlin Associates, Dell, Everspin Technologies, In-Cog Solutions, Intel, Mellanox Technologies, MemVerge, Microsoft, Objective Analysis, Sony Semiconductor Solutions Corporation, Tencent Cloud, Western Digital, and Xilinx. And thanks also to our great audience and their questions – your enthusiasm and support will keep us persistently having even more fun!

mentored software developers to do live coding to understand the various tiers and modes of PM and what existing methods are available to access them. Upcoming SNIA SSSI on Solid State Storage blogs will give details and insights into "PM Hacking". Also sign up for the SNIAMatters monthly newsletter to learn more, and stay tuned for upcoming Hackathons – next one is March 10-11 in San Diego.

Missed out on the live sessions? Not to worry, each session was videotaped and can be found on the SNIA Youtube Channel. Download the slides for each session on the PM Summit agenda at www.snia.org/pm-summit. Thanks to our presenters from Advanced Computation and Storage, Arm, Avalanche Technology, Calypso Systems, Coughlin Associates, Dell, Everspin Technologies, In-Cog Solutions, Intel, Mellanox Technologies, MemVerge, Microsoft, Objective Analysis, Sony Semiconductor Solutions Corporation, Tencent Cloud, Western Digital, and Xilinx. And thanks also to our great audience and their questions – your enthusiasm and support will keep us persistently having even more fun!

Find a similar article by tags

Case StudiesLeave a Reply

Persistently Fun Once Again – SNIA’s 7th Persistent Memory Summit is a Wrap!

Jan 28, 2019

Leave it to Rob Peglar, SNIA Board Member and the MC of SNIA’s 7th annual Persistent Memory Summit to capture the Summit day as persistently fun with a metric boatload of great presentations and speakers! And indeed it was a great day, with fourteen sessions presented by 23 speakers covering the breadth of where PM is in 2019 – real world, application-focused, and supported by multiple operating systems. Find a great recap on the Forbes blog by Tom Coughlin of Coughlin Associates.

Attendees enjoyed live demos of Persistent Memory technologies from AgigA Tech, Intel,

Leave it to Rob Peglar, SNIA Board Member and the MC of SNIA’s 7th annual Persistent Memory Summit to capture the Summit day as persistently fun with a metric boatload of great presentations and speakers! And indeed it was a great day, with fourteen sessions presented by 23 speakers covering the breadth of where PM is in 2019 – real world, application-focused, and supported by multiple operating systems. Find a great recap on the Forbes blog by Tom Coughlin of Coughlin Associates.

Attendees enjoyed live demos of Persistent Memory technologies from AgigA Tech, Intel,  SMART Modular, the SNIA Solid State Storage Initiative, and Xilinx. Learn more about what they presented here.

And for the first time as a part of the Persistent Memory Summit, SNIA hosted a Persistent Memory Programming Hackathon sponsored by Google Cloud, where SNIA PM experts

SMART Modular, the SNIA Solid State Storage Initiative, and Xilinx. Learn more about what they presented here.

And for the first time as a part of the Persistent Memory Summit, SNIA hosted a Persistent Memory Programming Hackathon sponsored by Google Cloud, where SNIA PM experts  mentored software developers to do live coding to understand the various tiers and modes of PM and what existing methods are available to access them. Upcoming SNIA SSSI on Solid State Storage blogs will give details and insights into “PM Hacking”. Also sign up for the SNIAMatters monthly newsletter to learn more, and stay tuned for upcoming Hackathons – next one is March 10-11 in San Diego.

Missed out on the live sessions? Not to worry, each session was videotaped and can be found on the SNIA Youtube Channel. Download the slides for each session on the PM Summit agenda at www.snia.org/pm-summit. Thanks to our presenters from Advanced Computation and Storage, Arm, Avalanche Technology, Calypso Systems, Coughlin Associates, Dell, Everspin Technologies, In-Cog Solutions, Intel, Mellanox Technologies, MemVerge, Microsoft, Objective Analysis, Sony Semiconductor Solutions Corporation, Tencent Cloud, Western Digital, and Xilinx. And thanks also to our great audience and their questions – your enthusiasm and support will keep us persistently having even more fun!

mentored software developers to do live coding to understand the various tiers and modes of PM and what existing methods are available to access them. Upcoming SNIA SSSI on Solid State Storage blogs will give details and insights into “PM Hacking”. Also sign up for the SNIAMatters monthly newsletter to learn more, and stay tuned for upcoming Hackathons – next one is March 10-11 in San Diego.

Missed out on the live sessions? Not to worry, each session was videotaped and can be found on the SNIA Youtube Channel. Download the slides for each session on the PM Summit agenda at www.snia.org/pm-summit. Thanks to our presenters from Advanced Computation and Storage, Arm, Avalanche Technology, Calypso Systems, Coughlin Associates, Dell, Everspin Technologies, In-Cog Solutions, Intel, Mellanox Technologies, MemVerge, Microsoft, Objective Analysis, Sony Semiconductor Solutions Corporation, Tencent Cloud, Western Digital, and Xilinx. And thanks also to our great audience and their questions – your enthusiasm and support will keep us persistently having even more fun!

Leave a Reply

Experts Answer Virtualization and Storage Networking Questions

Jan 25, 2019

This is a 10:1 oversubscription ratio; the "fan-in" part refers to the number of host bandwidth in comparison to the target bandwidth.

Block storage protocols like Fibre Channel, FCoE, and iSCSI have much lower fan-in ratios than file storage protocols such as NFS, and deterministic storage protocols (like FC) have lower than non-deterministic (like iSCSI). The true arbiter of what the appropriate fan-in ratio is determined by the application. Highly transactional applications, such as databases, often require very low ratios.

Q. If there's a mismatch in the MTU between server and switch will the highest MTU between the two get negotiated or else will a mismatch persist?

A. No, the lowest value will be used, but there's a caveat to this. The switch and the network in the path(s) can have MTU set higher than the hosts, but the hosts cannot have a higher MTU than the network. For example, if your hosts are set to 1500 and all the network switches in the path are set to 9k, then the hosts will communicate over 1500.

However, what can, and usually does, happen is someone sets the host(s) or target(s) to 9k but never changes the rest of the network. When this happens, you end up with unreliable or even loss of connectivity. Take a look at the graphic below:

This is a 10:1 oversubscription ratio; the "fan-in" part refers to the number of host bandwidth in comparison to the target bandwidth.

Block storage protocols like Fibre Channel, FCoE, and iSCSI have much lower fan-in ratios than file storage protocols such as NFS, and deterministic storage protocols (like FC) have lower than non-deterministic (like iSCSI). The true arbiter of what the appropriate fan-in ratio is determined by the application. Highly transactional applications, such as databases, often require very low ratios.

Q. If there's a mismatch in the MTU between server and switch will the highest MTU between the two get negotiated or else will a mismatch persist?

A. No, the lowest value will be used, but there's a caveat to this. The switch and the network in the path(s) can have MTU set higher than the hosts, but the hosts cannot have a higher MTU than the network. For example, if your hosts are set to 1500 and all the network switches in the path are set to 9k, then the hosts will communicate over 1500.

However, what can, and usually does, happen is someone sets the host(s) or target(s) to 9k but never changes the rest of the network. When this happens, you end up with unreliable or even loss of connectivity. Take a look at the graphic below:

A large ball can't fit through a hole smaller than itself. Consequently, a 9k frame cannot pass through a 1500 port. Unless you and your network admin both understand and use jumbo frames, there's no reason to implement in your environment.

Q. Can you implement port binding when using two NICs for all traffic including iSCSI?

A. Yes you can use two NICs for all traffic including iSCSI, many organizations use this configuration. The key to this is making sure you have enough bandwidth to support all the traffic/ IO that will use those NICs. You should, at the very least, use 10Gb NICs faster if possible.

Remember, now all your management, VM and storage traffic are using the same network devices. If you don't plan accordingly, everything can be impacted in your virtual environment. There are some hypervisors capable of granular network controls to manage which type of traffic uses which NIC, certain failover details and allow setting QoS limits on the different traffic types. Subsequently, you can ensure storage traffic gets the required bandwidth or priority in a dual NIC configuration.

Q. I've seen HBA drivers that by default set their queue depth to 128 but the target port only handles 512. So two HBAs would saturate one target port which is undesirable. Why don't the HBA drivers ask what the depth should be at installation?

A. There are a couple of possible reasons for this. One is that many do not know what it even means, and are likely to make a poor decision (higher is better, right?!). So vendors tend to set these things at defaults and let people change them if needed—and usually that means they have purpose to change them. Furthermore, every storage array handles these things differently, and that can make it more difficult to size these things. It is usually better to provide consistency—having things set uniformly makes it easier to support and will give more consistent expectations even across storage platforms.

Second, many environments are large—which means people usually are not clicking and type through installation. Things are templatized, or sysprepped, or automated, etc. During or after the deployment their automation tools can configure things uniformly in accordance with their needs.

In short, it is like most things: give defaults to keep one-off installations simple (and decrease the risks from people who may not know exactly what they are doing), complete the installations without having to research a ton of settings that may not ultimately matter, and yet still provide experienced/advanced users, or automaters, ways to make changes.

Q. A number of white papers show the storage uplinks on different subnets. Is there a reason to have each link on its own subnet/VLAN or can they share a common segment?

A. One reason is to reduce the number of logical paths. Especially in iSCSI, the number of paths can easily exceed supported limits if every port can talk to every target. Using multiple subnets or VLANs can drop this in half—and all you really use is logical redundancy, which doesn't really matter. Also, if everything is in the same subnet or VLAN and someone make some kind of catastrophic change to that subnet or VLAN (or some device in it causes other issues), it is less likely to affect both subnets/VLANs. This gives some management "oops" protection. One change will bring all storage connectivity down.

A large ball can't fit through a hole smaller than itself. Consequently, a 9k frame cannot pass through a 1500 port. Unless you and your network admin both understand and use jumbo frames, there's no reason to implement in your environment.

Q. Can you implement port binding when using two NICs for all traffic including iSCSI?

A. Yes you can use two NICs for all traffic including iSCSI, many organizations use this configuration. The key to this is making sure you have enough bandwidth to support all the traffic/ IO that will use those NICs. You should, at the very least, use 10Gb NICs faster if possible.

Remember, now all your management, VM and storage traffic are using the same network devices. If you don't plan accordingly, everything can be impacted in your virtual environment. There are some hypervisors capable of granular network controls to manage which type of traffic uses which NIC, certain failover details and allow setting QoS limits on the different traffic types. Subsequently, you can ensure storage traffic gets the required bandwidth or priority in a dual NIC configuration.

Q. I've seen HBA drivers that by default set their queue depth to 128 but the target port only handles 512. So two HBAs would saturate one target port which is undesirable. Why don't the HBA drivers ask what the depth should be at installation?

A. There are a couple of possible reasons for this. One is that many do not know what it even means, and are likely to make a poor decision (higher is better, right?!). So vendors tend to set these things at defaults and let people change them if needed—and usually that means they have purpose to change them. Furthermore, every storage array handles these things differently, and that can make it more difficult to size these things. It is usually better to provide consistency—having things set uniformly makes it easier to support and will give more consistent expectations even across storage platforms.

Second, many environments are large—which means people usually are not clicking and type through installation. Things are templatized, or sysprepped, or automated, etc. During or after the deployment their automation tools can configure things uniformly in accordance with their needs.

In short, it is like most things: give defaults to keep one-off installations simple (and decrease the risks from people who may not know exactly what they are doing), complete the installations without having to research a ton of settings that may not ultimately matter, and yet still provide experienced/advanced users, or automaters, ways to make changes.

Q. A number of white papers show the storage uplinks on different subnets. Is there a reason to have each link on its own subnet/VLAN or can they share a common segment?

A. One reason is to reduce the number of logical paths. Especially in iSCSI, the number of paths can easily exceed supported limits if every port can talk to every target. Using multiple subnets or VLANs can drop this in half—and all you really use is logical redundancy, which doesn't really matter. Also, if everything is in the same subnet or VLAN and someone make some kind of catastrophic change to that subnet or VLAN (or some device in it causes other issues), it is less likely to affect both subnets/VLANs. This gives some management "oops" protection. One change will bring all storage connectivity down.

Leave a Reply

Experts Answer Virtualization and Storage Networking Questions

Jan 25, 2019

This is a 10:1 oversubscription ratio; the “fan-in” part refers to the number of host bandwidth in comparison to the target bandwidth.

Block storage protocols like Fibre Channel, FCoE, and iSCSI have much lower fan-in ratios than file storage protocols such as NFS, and deterministic storage protocols (like FC) have lower than non-deterministic (like iSCSI). The true arbiter of what the appropriate fan-in ratio is determined by the application. Highly transactional applications, such as databases, often require very low ratios.

Q. If there’s a mismatch in the MTU between server and switch will the highest MTU between the two get negotiated or else will a mismatch persist?

A. No, the lowest value will be used, but there’s a caveat to this. The switch and the network in the path(s) can have MTU set higher than the hosts, but the hosts cannot have a higher MTU than the network. For example, if your hosts are set to 1500 and all the network switches in the path are set to 9k, then the hosts will communicate over 1500.

However, what can, and usually does, happen is someone sets the host(s) or target(s) to 9k but never changes the rest of the network. When this happens, you end up with unreliable or even loss of connectivity. Take a look at the graphic below:

This is a 10:1 oversubscription ratio; the “fan-in” part refers to the number of host bandwidth in comparison to the target bandwidth.

Block storage protocols like Fibre Channel, FCoE, and iSCSI have much lower fan-in ratios than file storage protocols such as NFS, and deterministic storage protocols (like FC) have lower than non-deterministic (like iSCSI). The true arbiter of what the appropriate fan-in ratio is determined by the application. Highly transactional applications, such as databases, often require very low ratios.

Q. If there’s a mismatch in the MTU between server and switch will the highest MTU between the two get negotiated or else will a mismatch persist?

A. No, the lowest value will be used, but there’s a caveat to this. The switch and the network in the path(s) can have MTU set higher than the hosts, but the hosts cannot have a higher MTU than the network. For example, if your hosts are set to 1500 and all the network switches in the path are set to 9k, then the hosts will communicate over 1500.

However, what can, and usually does, happen is someone sets the host(s) or target(s) to 9k but never changes the rest of the network. When this happens, you end up with unreliable or even loss of connectivity. Take a look at the graphic below:

A large ball can’t fit through a hole smaller than itself. Consequently, a 9k frame cannot pass through a 1500 port. Unless you and your network admin both understand and use jumbo frames, there’s no reason to implement in your environment.

Q. Can you implement port binding when using two NICs for all traffic including iSCSI?

A. Yes you can use two NICs for all traffic including iSCSI, many organizations use this configuration. The key to this is making sure you have enough bandwidth to support all the traffic/ IO that will use those NICs. You should, at the very least, use 10Gb NICs faster if possible.

Remember, now all your management, VM and storage traffic are using the same network devices. If you don’t plan accordingly, everything can be impacted in your virtual environment. There are some hypervisors capable of granular network controls to manage which type of traffic uses which NIC, certain failover details and allow setting QoS limits on the different traffic types. Subsequently, you can ensure storage traffic gets the required bandwidth or priority in a dual NIC configuration.

Q. I’ve seen HBA drivers that by default set their queue depth to 128 but the target port only handles 512. So two HBAs would saturate one target port which is undesirable. Why don’t the HBA drivers ask what the depth should be at installation?

A. There are a couple of possible reasons for this. One is that many do not know what it even means, and are likely to make a poor decision (higher is better, right?!). So vendors tend to set these things at defaults and let people change them if needed—and usually that means they have purpose to change them. Furthermore, every storage array handles these things differently, and that can make it more difficult to size these things. It is usually better to provide consistency—having things set uniformly makes it easier to support and will give more consistent expectations even across storage platforms.

Second, many environments are large—which means people usually are not clicking and type through installation. Things are templatized, or sysprepped, or automated, etc. During or after the deployment their automation tools can configure things uniformly in accordance with their needs.

In short, it is like most things: give defaults to keep one-off installations simple (and decrease the risks from people who may not know exactly what they are doing), complete the installations without having to research a ton of settings that may not ultimately matter, and yet still provide experienced/advanced users, or automaters, ways to make changes.

Q. A number of white papers show the storage uplinks on different subnets. Is there a reason to have each link on its own subnet/VLAN or can they share a common segment?

A. One reason is to reduce the number of logical paths. Especially in iSCSI, the number of paths can easily exceed supported limits if every port can talk to every target. Using multiple subnets or VLANs can drop this in half—and all you really use is logical redundancy, which doesn’t really matter. Also, if everything is in the same subnet or VLAN and someone make some kind of catastrophic change to that subnet or VLAN (or some device in it causes other issues), it is less likely to affect both subnets/VLANs. This gives some management “oops” protection. One change will bring all storage connectivity down.

A large ball can’t fit through a hole smaller than itself. Consequently, a 9k frame cannot pass through a 1500 port. Unless you and your network admin both understand and use jumbo frames, there’s no reason to implement in your environment.

Q. Can you implement port binding when using two NICs for all traffic including iSCSI?

A. Yes you can use two NICs for all traffic including iSCSI, many organizations use this configuration. The key to this is making sure you have enough bandwidth to support all the traffic/ IO that will use those NICs. You should, at the very least, use 10Gb NICs faster if possible.

Remember, now all your management, VM and storage traffic are using the same network devices. If you don’t plan accordingly, everything can be impacted in your virtual environment. There are some hypervisors capable of granular network controls to manage which type of traffic uses which NIC, certain failover details and allow setting QoS limits on the different traffic types. Subsequently, you can ensure storage traffic gets the required bandwidth or priority in a dual NIC configuration.

Q. I’ve seen HBA drivers that by default set their queue depth to 128 but the target port only handles 512. So two HBAs would saturate one target port which is undesirable. Why don’t the HBA drivers ask what the depth should be at installation?

A. There are a couple of possible reasons for this. One is that many do not know what it even means, and are likely to make a poor decision (higher is better, right?!). So vendors tend to set these things at defaults and let people change them if needed—and usually that means they have purpose to change them. Furthermore, every storage array handles these things differently, and that can make it more difficult to size these things. It is usually better to provide consistency—having things set uniformly makes it easier to support and will give more consistent expectations even across storage platforms.

Second, many environments are large—which means people usually are not clicking and type through installation. Things are templatized, or sysprepped, or automated, etc. During or after the deployment their automation tools can configure things uniformly in accordance with their needs.

In short, it is like most things: give defaults to keep one-off installations simple (and decrease the risks from people who may not know exactly what they are doing), complete the installations without having to research a ton of settings that may not ultimately matter, and yet still provide experienced/advanced users, or automaters, ways to make changes.

Q. A number of white papers show the storage uplinks on different subnets. Is there a reason to have each link on its own subnet/VLAN or can they share a common segment?

A. One reason is to reduce the number of logical paths. Especially in iSCSI, the number of paths can easily exceed supported limits if every port can talk to every target. Using multiple subnets or VLANs can drop this in half—and all you really use is logical redundancy, which doesn’t really matter. Also, if everything is in the same subnet or VLAN and someone make some kind of catastrophic change to that subnet or VLAN (or some device in it causes other issues), it is less likely to affect both subnets/VLANs. This gives some management “oops” protection. One change will bring all storage connectivity down.

Leave a Reply

The Ins and Outs of a Scale-Out File System Architecture

Jan 18, 2019

- General principles when architecting a scale-out file system storage solution

- Hardware and software design considerations for different workloads

- Storage challenges when serving a large number of compute nodes, e.g. name space consistency, distributed locking, data replication, etc.

- Use cases for scale-out file systems

- Common benchmark and performance analysis approaches

Leave a Reply