By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish™ storage management specification.

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish™ storage management specification. SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

- the Open Data Protocol (OData) from Oasis

- the Redfish Scalable Platforms Management API from the DMTF

- the Swordfish Scalable Storage Management API from the SNIA

- OData v4 .0 Protocol: Specifies a Restful use of HTTP operations on resources defined by an entity data model.

- OData v4 .0 URL Conventions: Specifies URL conventions for addressing resources and specifies a small set of query options.

- OData v4 .0 Common Schema Definition Language (CSDL): Specifies a Common Schema Definition Language (CSDL) for defining an entity data model.

- OData v4 .0 JSON Format: Representations for the OData requests and responses using the JavaScript Object Notation (JSON)

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson storage management specification.

storage management specification.

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson storage management specification.

storage management specification.

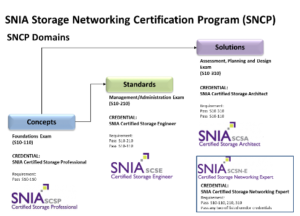

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it -

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it -

Leave a Reply