Storage Expert Takes on Hyperconverged Questions

Apr 17, 2017

Find a similar article by tags

ethernetStorage Expert Takes on Hyperconverged Questions

Apr 17, 2017

Find a similar article by tags

Compute ethernet Hyperconverged Infrastructure Hyperconverged Infrastructure Networked Storage StorageLeave a Reply

Managing Your Computing Ecosystem

Apr 12, 2017

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish™ storage management specification.

SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

- the Open Data Protocol (OData) from Oasis

- the Redfish Scalable Platforms Management API from the DMTF

- the Swordfish Scalable Storage Management API from the SNIA

One can build a management service that is conformant to the Redfish or Swordfish specifications that provides a comprehensive interface for the discovery of the managed physical infrastructure, as well as for the provisioning, monitoring, and management of the environmental, compute, networking, and storage resources provided by that infrastructure. That management service is an OData conformant data service.

These specifications are evolving and certainly are not complete in all aspects. Nevertheless, they are already sufficient to provide comprehensive management of most features of products in the computing ecosystem.

This post and the following two will provide a short overview of each.

This post and the following two will provide a short overview of each.

OData

The first effort is the definition of the Open Data Protocol (OData). OData v4 specifications are OASIS standards that have also begun the international standardization process with ISO.

Simply asserting that a data service has a Restful API does nothing to assure that it is interoperable with any other data service. More importantly, Rest by itself makes no guarantees that a client of one Restful data service will be able to discover or know how to even navigate around the Restful API presented by some other data service.

OData enables interoperable utilization of Restful data services. Such services allow resources, identified using Uniform Resource Locators (URLs) and defined in an Entity Data Model (EDM), to be published and edited by Web clients using simple HTTP messages. In addition to Redfish and Swordfish described below, a growing number of applications support OData data services, e.g. Microsoft Azure, SAP NetWeaver, IBM WebSphere, and Salesforce.

The OData Common Schema Definition Language (CSDL) specifies a standard metamodel used to define an Entity Data Model over which an OData service acts. The metamodel defined by CSDL is consistent with common elements of the UML v2.5 metamodel. This fact enables reliable translation to your programming language of your choice.

OData standardizes the construction of Restful APIs. OData provides standards for navigation between resources, for request and response payloads and for operation syntax. It specifies the discovery of the entity data model for the accessed data service. It also specifies how resources defined by the entity data model can be discovered. While it does not standardize the APIs themselves, OData does standardize how payloads are constructed and a set of query options and many other items that are often different across the many current Restful data services. OData specifications utilize standard HTTP, AtomPub, and JSON. Also, standard URIs are used to address and access resources.

The use of the OData protocol enables a client to access information from a variety of sources including relational databases, servers, storage systems, file systems, content management systems, traditional Web sites, and more.

Ubiquitous use will break down information silos and will enable interoperability between producers and consumers. This will significantly increase the ability to provide new and richer functionality on top of the OData services.

The OData specifications define:

- OData v4 .0 Protocol: Specifies a Restful use of HTTP operations on resources defined by an entity data model.

- OData v4 .0 URL Conventions: Specifies URL conventions for addressing resources and specifies a small set of query options.

- OData v4 .0 Common Schema Definition Language (CSDL): Specifies a Common Schema Definition Language (CSDL) for defining an entity data model.

- OData v4 .0 JSON Format: Representations for the OData requests and responses using the JavaScript Object Notation (JSON)

Conclusion:

While Rest is a useful architectural style, it is not a “standard” and the variances in Restful APIs to express similar functions means that there is no standard way to interact with different systems. OData is laying the groundwork for interoperable management by standardizing the construction of Restful APIs. Next up – Redfish.

Leave a Reply

Managing Your Computing Ecosystem

Apr 12, 2017

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish™ storage management specification.

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish™ storage management specification. SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

- the Open Data Protocol (OData) from Oasis

- the Redfish Scalable Platforms Management API from the DMTF

- the Swordfish Scalable Storage Management API from the SNIA

- OData v4 .0 Protocol: Specifies a Restful use of HTTP operations on resources defined by an entity data model.

- OData v4 .0 URL Conventions: Specifies URL conventions for addressing resources and specifies a small set of query options.

- OData v4 .0 Common Schema Definition Language (CSDL): Specifies a Common Schema Definition Language (CSDL) for defining an entity data model.

- OData v4 .0 JSON Format: Representations for the OData requests and responses using the JavaScript Object Notation (JSON)

Leave a Reply

Managing Your Computing Ecosystem

Apr 12, 2017

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish storage management specification.

storage management specification.

SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

- the Open Data Protocol (OData) from Oasis

- the Redfish Scalable Platforms Management API from the DMTF

- the Swordfish Scalable Storage Management API from the SNIA

One can build a management service that is conformant to the Redfish or Swordfish specifications that provides a comprehensive interface for the discovery of the managed physical infrastructure, as well as for the provisioning, monitoring, and management of the environmental, compute, networking, and storage resources provided by that infrastructure. That management service is an OData conformant data service.

These specifications are evolving and certainly are not complete in all aspects. Nevertheless, they are already sufficient to provide comprehensive management of most features of products in the computing ecosystem.

This post and the following two will provide a short overview of each.

This post and the following two will provide a short overview of each.

OData

The first effort is the definition of the Open Data Protocol (OData). OData v4 specifications are OASIS standards that have also begun the international standardization process with ISO.

Simply asserting that a data service has a Restful API does nothing to assure that it is interoperable with any other data service. More importantly, Rest by itself makes no guarantees that a client of one Restful data service will be able to discover or know how to even navigate around the Restful API presented by some other data service.

OData enables interoperable utilization of Restful data services. Such services allow resources, identified using Uniform Resource Locators (URLs) and defined in an Entity Data Model (EDM), to be published and edited by Web clients using simple HTTP messages. In addition to Redfish and Swordfish described below, a growing number of applications support OData data services, e.g. Microsoft Azure, SAP NetWeaver, IBM WebSphere, and Salesforce.

The OData Common Schema Definition Language (CSDL) specifies a standard metamodel used to define an Entity Data Model over which an OData service acts. The metamodel defined by CSDL is consistent with common elements of the UML v2.5 metamodel. This fact enables reliable translation to your programming language of your choice.

OData standardizes the construction of Restful APIs. OData provides standards for navigation between resources, for request and response payloads and for operation syntax. It specifies the discovery of the entity data model for the accessed data service. It also specifies how resources defined by the entity data model can be discovered. While it does not standardize the APIs themselves, OData does standardize how payloads are constructed and a set of query options and many other items that are often different across the many current Restful data services. OData specifications utilize standard HTTP, AtomPub, and JSON. Also, standard URIs are used to address and access resources.

The use of the OData protocol enables a client to access information from a variety of sources including relational databases, servers, storage systems, file systems, content management systems, traditional Web sites, and more.

Ubiquitous use will break down information silos and will enable interoperability between producers and consumers. This will significantly increase the ability to provide new and richer functionality on top of the OData services.

The OData specifications define:

- OData v4 .0 Protocol: Specifies a Restful use of HTTP operations on resources defined by an entity data model.

- OData v4 .0 URL Conventions: Specifies URL conventions for addressing resources and specifies a small set of query options.

- OData v4 .0 Common Schema Definition Language (CSDL): Specifies a Common Schema Definition Language (CSDL) for defining an entity data model.

- OData v4 .0 JSON Format: Representations for the OData requests and responses using the JavaScript Object Notation (JSON)

Conclusion:

While Rest is a useful architectural style, it is not a “standard” and the variances in Restful APIs to express similar functions means that there is no standard way to interact with different systems. OData is laying the groundwork for interoperable management by standardizing the construction of Restful APIs. Next up – Redfish.

Leave a Reply

Managing Your Computing Ecosystem

Apr 12, 2017

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

By George Ericson, Distinguished Engineer, Dell EMC; Member, SNIA Scalable Storage Management Technical Working Group, @GEricson

Introduction

This blog is part one of a three-part series recently published on “The Data Cortex”, which represents the thoughts and opinions from members of the CTO Team of Dell EMC’s Data Protection Division. The author, George Ericson, has been actively participating on the SNIA Scalable Storage Management Technical Working Group which has been developing the SNIA Swordfish storage management specification.

storage management specification.

SNIA Swordfish is an extension to the Distributed Management Task Force’s (DMTF’s) open industry Redfish® standard, and the combination offers a unified approach to managing storage

and servers in environments like hyperscale and cloud infrastructures. This makes having a single portal convenient for obtaining feedback on either specification. SNIA’s Storage Management Initiative (SMI) has set up swordfishforum.com as an easy link that goes to the Redfish Forum site. Please visit often and share your thoughts.

Overview

There is a very real opportunity to take a giant step towards universal and interoperable management interfaces that are defined in terms of what your clients want to achieve. In the process, the industry can evolve away from the current complex, proprietary and product specific interfaces.

You’ve heard this promise before, but it’s never come to pass. What’s different this time? Major players are converging storage and servers. Functionality is commoditizing. Customers are demanding it more than ever.

Three industry-led open standards efforts have come together to collectively provide an easy to use and comprehensive API for managing all of the elements in your computing ecosystem, ranging from simple laptops to geographically distributed data centers.

This API is specified by:

- the Open Data Protocol (OData) from Oasis

- the Redfish Scalable Platforms Management API from the DMTF

- the Swordfish Scalable Storage Management API from the SNIA

One can build a management service that is conformant to the Redfish or Swordfish specifications that provides a comprehensive interface for the discovery of the managed physical infrastructure, as well as for the provisioning, monitoring, and management of the environmental, compute, networking, and storage resources provided by that infrastructure. That management service is an OData conformant data service.

These specifications are evolving and certainly are not complete in all aspects. Nevertheless, they are already sufficient to provide comprehensive management of most features of products in the computing ecosystem.

This post and the following two will provide a short overview of each.

This post and the following two will provide a short overview of each.

OData

The first effort is the definition of the Open Data Protocol (OData). OData v4 specifications are OASIS standards that have also begun the international standardization process with ISO.

Simply asserting that a data service has a Restful API does nothing to assure that it is interoperable with any other data service. More importantly, Rest by itself makes no guarantees that a client of one Restful data service will be able to discover or know how to even navigate around the Restful API presented by some other data service.

OData enables interoperable utilization of Restful data services. Such services allow resources, identified using Uniform Resource Locators (URLs) and defined in an Entity Data Model (EDM), to be published and edited by Web clients using simple HTTP messages. In addition to Redfish and Swordfish described below, a growing number of applications support OData data services, e.g. Microsoft Azure, SAP NetWeaver, IBM WebSphere, and Salesforce.

The OData Common Schema Definition Language (CSDL) specifies a standard metamodel used to define an Entity Data Model over which an OData service acts. The metamodel defined by CSDL is consistent with common elements of the UML v2.5 metamodel. This fact enables reliable translation to your programming language of your choice.

OData standardizes the construction of Restful APIs. OData provides standards for navigation between resources, for request and response payloads and for operation syntax. It specifies the discovery of the entity data model for the accessed data service. It also specifies how resources defined by the entity data model can be discovered. While it does not standardize the APIs themselves, OData does standardize how payloads are constructed and a set of query options and many other items that are often different across the many current Restful data services. OData specifications utilize standard HTTP, AtomPub, and JSON. Also, standard URIs are used to address and access resources.

The use of the OData protocol enables a client to access information from a variety of sources including relational databases, servers, storage systems, file systems, content management systems, traditional Web sites, and more.

Ubiquitous use will break down information silos and will enable interoperability between producers and consumers. This will significantly increase the ability to provide new and richer functionality on top of the OData services.

The OData specifications define:

- OData v4 .0 Protocol: Specifies a Restful use of HTTP operations on resources defined by an entity data model.

- OData v4 .0 URL Conventions: Specifies URL conventions for addressing resources and specifies a small set of query options.

- OData v4 .0 Common Schema Definition Language (CSDL): Specifies a Common Schema Definition Language (CSDL) for defining an entity data model.

- OData v4 .0 JSON Format: Representations for the OData requests and responses using the JavaScript Object Notation (JSON)

Conclusion:

While Rest is a useful architectural style, it is not a “standard” and the variances in Restful APIs to express similar functions means that there is no standard way to interact with different systems. OData is laying the groundwork for interoperable management by standardizing the construction of Restful APIs. Next up – Redfish.

Leave a Reply

Q&A on All Things iSCSI

Apr 7, 2017

Leave a Reply

Q&A on All Things iSCSI

Apr 7, 2017

Leave a Reply

Your Questions Answered on Non-Volatile DIMMs

Apr 3, 2017

by Arthur Sainio, SNIA NVDIMM SIG Co-Chair, SMART Modular

SNIA’s Non-Volatile DIMM (NVDIMM) Special Interest Group (SIG) had a tremendous response to their most recent webcast: NVDIMM: Applications are

by Arthur Sainio, SNIA NVDIMM SIG Co-Chair, SMART Modular

SNIA’s Non-Volatile DIMM (NVDIMM) Special Interest Group (SIG) had a tremendous response to their most recent webcast: NVDIMM: Applications are Here! You can view the webcast on demand.

Viewers had many questions during the webcast. In this blog, the NVDIMM SIG answers those questions and shares the SIG’s knowledge of NVDIMM technology.

Have a question? Send it to nvdimmsigchair@snia.org.

1. What about 3DXpoint, how will this technology impact the market?

3DXPoint DIMMs will likely have a significant impact on the market. They are fast enough to use as a slower tier of memory between NAND and DRAM. It is still too early to tell though.

2. What are good benchmark tools for DAX and what are the differences between NVML applications and DAX aware applications?

For benchmark tools, please see the answer for (11).

NVML applications are written specifically for NVM (Non-Volatile Memory). They may use the open source NVML libraries (http://pmem.io/nvml) for their usage.

DAX is a File System feature that avoids the usage of Page Cache buffers. DAX aware applications are aware that the writes and reads would go directly to the underlying NVM without being cached.

3. On the slide talking about NUMA, there was a mention accessing NVDIMMs from a CPU on a different memory bus. The part about larger access times was clear enough. However, I came away with the impression that there is a correctness issue with handling of ADR signal as well. Please clarify.

If this question is asking whether the NUMA remote CPU will successfully flush ADR-protected buffers to memory connected to the NUMA near CPU then yes there is the potential for a problem in this area. However ADR is an Intel feature that is not specified in the JEDEC NVDIMM standard, so this is an Intel specific implementation question. The question needs to be posed to Intel.

4. How common is NVDIMM compatible BIOS? How would one check?

They are becoming more common all the time. There are at least 8 server/storage systems from Intel and 22 from Supermicro that support NVDIMMs. Several other motherboard vendors have systems that support NVDIMMs. Most of the NVDIMM vendors have the lists posted on their websites.

5. How does a system go in to save? How what exactly does the BIOS have to do to get a system before asserting save?

The BIOS does the initial checking of making sure the NVDIMM has backup supply on power loss, before it ARMs it. Also, the BIOS makes sure that any RESTORE of the previously saved data is properly done. This involves a set of operations by setting appropriate registers in the NVDIMM module – all that happens during the boot up initialization. On A/C Power Loss, the PCH (Platform Control Hub) detects the condition and initiates what is called the ADR (Asynchronous DRAM Refresh) sequence, terminating in the assertion of SAVE signal by the CPLD. Without the BIOS ARM-ing the NVDIMM module, the NVDIMM module will not respond to the SAVE signal on power loss situation.

6. Could you paint the picture of hardware costs at this point? How soon will NVDIMM-enabled systems be able to become “the rest of us”?

The NVDIMM use DRAM, NAND Flash, a controller and well as many other parts in addition to what are used on standard RDIMMs. On that basis the cost of NVDIMM-N is higher that standard RDIMMs. NVDIMM-enabled systems have been available for several years and are shipping now.

7. Does RHEL 7.3 easily support Linux Kernel 4.4?

RHEL 7.3 is still using the 3.10 version of the Linux Kernel. For RHEL related information, please, check with Red Hat.

You can also refer to: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/7.3_Release_Notes/index.html

The distribution has drivers to support the persistent memory. They have also packaged the libraries for the persistent memory.

8. What are the usual sizes for NVDIMMs available today?

4GB, 8GB, 16GB, 32GB

9. Are there any case studies of each of the NVDIMM-N applications mentioned?

You can find some examples of case studies at these websites: https://channel9.msdn.com/events/build/2016/p466 and https://msdn.com/events/build/2016/p470

10. What is the difference between pmem lib/pmfs in Linux and an DAX enabled files system (like ext-DAX)?

A DAX based File System avoids the usage of Kernel Page Cache Layer for caching its write data. This would make all its write operations go directly to the underlying storage unit. One important thing to understand is, a DAX File System can still use BLOCK DRIVERS for accessing its underlying storage.

PMFS is a File System that is optimized to use Persistent Memory, by completely avoiding the Page Cache and the Block Drivers. It is designed to provide efficient access to Persistent Memory that would be directly accessible via CPU load/store instructions.

Refer to this link: https://github.com/linux-pmfs/pmfs for more details. PMFS, as of now is only in experimental stages.

11. What tool is used to measure the performance?

The performance measurement depends on what kind of Application workload is to be characterized. This is a very complex topic. No single benchmarking tool is good for all the workload characteristics.

For File System performance, SpecFS, Bonnie++, IOZone, FFSB, FileBench etc., are good tools.

SysBench is good for a variety of performance measurements.

Phoronix Test Suite (http://www.phoronix.com/scan.php?page=home) has a variety of tools for Linux based performance measurements.

12. How similar do you expect the OS support for P to be to this support for –N? I don’t see a lot of need for differences at this level (though there certainly will be differences in the BIOS).

As of now, the open source libraries (http://pmem.io) are designed to be agnostic about the underlying memory types. They are simply classified as Persistent Memory, meaning, it could be “-N” or “-P” or something else. The libraries are written for User Space, and they assume that any underlying Kernel support should be transparent.

The “-P” type has been thought of supporting both the DRAM and the PERSISTENT access at the same time. This might need a separate set of drivers in the Kernel.

13. Does the PM-based file system appear to be block addressable from the Application?

A File System creates a layer of virtualization to support the logical entities such as VOLUMES, DIRECTORIES and FILES. Typically, an Application that is running in the User Space has no knowledge of the underlying mechanisms used by a File System for accessing its storage units such as the Persistent Memory. The access provided by a File System to an Application is typically a POSIX File System interface such as open, close, read, write, seek, etc.,

14. Is ADR a pin?

ADR stands for Asynchronous DRAM Refresh. ADR is a feature supported on Intel chipsets that triggers a hardware interrupt to the memory controller which will flush the write-protected data buffers and place the DRAM in self-refresh. This process is critical during a power loss event or system crash to ensure the data is in a “safe” state when the NVDIMM takes control of the DRAM to backup to Flash. Note that ADR does not flush the processor cache. In order to do so, an NMI routine would need to be executed prior to ADR.

Here! You can view the webcast on demand.

Viewers had many questions during the webcast. In this blog, the NVDIMM SIG answers those questions and shares the SIG’s knowledge of NVDIMM technology.

Have a question? Send it to nvdimmsigchair@snia.org.

1. What about 3DXpoint, how will this technology impact the market?

3DXPoint DIMMs will likely have a significant impact on the market. They are fast enough to use as a slower tier of memory between NAND and DRAM. It is still too early to tell though.

2. What are good benchmark tools for DAX and what are the differences between NVML applications and DAX aware applications?

For benchmark tools, please see the answer for (11).

NVML applications are written specifically for NVM (Non-Volatile Memory). They may use the open source NVML libraries (http://pmem.io/nvml) for their usage.

DAX is a File System feature that avoids the usage of Page Cache buffers. DAX aware applications are aware that the writes and reads would go directly to the underlying NVM without being cached.

3. On the slide talking about NUMA, there was a mention accessing NVDIMMs from a CPU on a different memory bus. The part about larger access times was clear enough. However, I came away with the impression that there is a correctness issue with handling of ADR signal as well. Please clarify.

If this question is asking whether the NUMA remote CPU will successfully flush ADR-protected buffers to memory connected to the NUMA near CPU then yes there is the potential for a problem in this area. However ADR is an Intel feature that is not specified in the JEDEC NVDIMM standard, so this is an Intel specific implementation question. The question needs to be posed to Intel.

4. How common is NVDIMM compatible BIOS? How would one check?

They are becoming more common all the time. There are at least 8 server/storage systems from Intel and 22 from Supermicro that support NVDIMMs. Several other motherboard vendors have systems that support NVDIMMs. Most of the NVDIMM vendors have the lists posted on their websites.

5. How does a system go in to save? How what exactly does the BIOS have to do to get a system before asserting save?

The BIOS does the initial checking of making sure the NVDIMM has backup supply on power loss, before it ARMs it. Also, the BIOS makes sure that any RESTORE of the previously saved data is properly done. This involves a set of operations by setting appropriate registers in the NVDIMM module – all that happens during the boot up initialization. On A/C Power Loss, the PCH (Platform Control Hub) detects the condition and initiates what is called the ADR (Asynchronous DRAM Refresh) sequence, terminating in the assertion of SAVE signal by the CPLD. Without the BIOS ARM-ing the NVDIMM module, the NVDIMM module will not respond to the SAVE signal on power loss situation.

6. Could you paint the picture of hardware costs at this point? How soon will NVDIMM-enabled systems be able to become “the rest of us”?

The NVDIMM use DRAM, NAND Flash, a controller and well as many other parts in addition to what are used on standard RDIMMs. On that basis the cost of NVDIMM-N is higher that standard RDIMMs. NVDIMM-enabled systems have been available for several years and are shipping now.

7. Does RHEL 7.3 easily support Linux Kernel 4.4?

RHEL 7.3 is still using the 3.10 version of the Linux Kernel. For RHEL related information, please, check with Red Hat.

You can also refer to: https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/7.3_Release_Notes/index.html

The distribution has drivers to support the persistent memory. They have also packaged the libraries for the persistent memory.

8. What are the usual sizes for NVDIMMs available today?

4GB, 8GB, 16GB, 32GB

9. Are there any case studies of each of the NVDIMM-N applications mentioned?

You can find some examples of case studies at these websites: https://channel9.msdn.com/events/build/2016/p466 and https://msdn.com/events/build/2016/p470

10. What is the difference between pmem lib/pmfs in Linux and an DAX enabled files system (like ext-DAX)?

A DAX based File System avoids the usage of Kernel Page Cache Layer for caching its write data. This would make all its write operations go directly to the underlying storage unit. One important thing to understand is, a DAX File System can still use BLOCK DRIVERS for accessing its underlying storage.

PMFS is a File System that is optimized to use Persistent Memory, by completely avoiding the Page Cache and the Block Drivers. It is designed to provide efficient access to Persistent Memory that would be directly accessible via CPU load/store instructions.

Refer to this link: https://github.com/linux-pmfs/pmfs for more details. PMFS, as of now is only in experimental stages.

11. What tool is used to measure the performance?

The performance measurement depends on what kind of Application workload is to be characterized. This is a very complex topic. No single benchmarking tool is good for all the workload characteristics.

For File System performance, SpecFS, Bonnie++, IOZone, FFSB, FileBench etc., are good tools.

SysBench is good for a variety of performance measurements.

Phoronix Test Suite (http://www.phoronix.com/scan.php?page=home) has a variety of tools for Linux based performance measurements.

12. How similar do you expect the OS support for P to be to this support for –N? I don’t see a lot of need for differences at this level (though there certainly will be differences in the BIOS).

As of now, the open source libraries (http://pmem.io) are designed to be agnostic about the underlying memory types. They are simply classified as Persistent Memory, meaning, it could be “-N” or “-P” or something else. The libraries are written for User Space, and they assume that any underlying Kernel support should be transparent.

The “-P” type has been thought of supporting both the DRAM and the PERSISTENT access at the same time. This might need a separate set of drivers in the Kernel.

13. Does the PM-based file system appear to be block addressable from the Application?

A File System creates a layer of virtualization to support the logical entities such as VOLUMES, DIRECTORIES and FILES. Typically, an Application that is running in the User Space has no knowledge of the underlying mechanisms used by a File System for accessing its storage units such as the Persistent Memory. The access provided by a File System to an Application is typically a POSIX File System interface such as open, close, read, write, seek, etc.,

14. Is ADR a pin?

ADR stands for Asynchronous DRAM Refresh. ADR is a feature supported on Intel chipsets that triggers a hardware interrupt to the memory controller which will flush the write-protected data buffers and place the DRAM in self-refresh. This process is critical during a power loss event or system crash to ensure the data is in a “safe” state when the NVDIMM takes control of the DRAM to backup to Flash. Note that ADR does not flush the processor cache. In order to do so, an NMI routine would need to be executed prior to ADR.

Find a similar article by tags

Case Studies NVM NVM Programming Model Open Source Performance Test Specification Solid State Storage SSD StandardsLeave a Reply

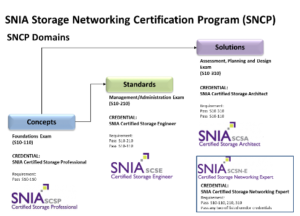

SNIA Ranked #2 for Storage Certifications - and Now You Can Take Exams at 900 Locations Worldwide

Mar 29, 2017

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it - CIO Magazine has cited SNIA Certified Storage Networking Expert (SCSN-E) as #2 of their top seven storage certifications - and the way to join an elite group of storage professionals at the top of their games.

SNIA now makes it even easier to take its three exams – Foundations; Management/Administration; and Assessment, Planning, and Design. Exams are now available for on-site test takers globally via a new relationship with Kryterion Testing Network. The Kryterion Testing Network utilizes over 900 Testing Centers in 120 countries to securely proctor exams worldwide for SNIA Certification Exam candidates. If you would like to know more about Kryterion or locate your nearest testing center please go to: www.kryteriononline.com/Locate-Test-Center . For more information about SNIA’s SNCP, visit https://www.snia.org/education/certification.

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it - CIO Magazine has cited SNIA Certified Storage Networking Expert (SCSN-E) as #2 of their top seven storage certifications - and the way to join an elite group of storage professionals at the top of their games.

SNIA now makes it even easier to take its three exams – Foundations; Management/Administration; and Assessment, Planning, and Design. Exams are now available for on-site test takers globally via a new relationship with Kryterion Testing Network. The Kryterion Testing Network utilizes over 900 Testing Centers in 120 countries to securely proctor exams worldwide for SNIA Certification Exam candidates. If you would like to know more about Kryterion or locate your nearest testing center please go to: www.kryteriononline.com/Locate-Test-Center . For more information about SNIA’s SNCP, visit https://www.snia.org/education/certification.

Leave a Reply