Questions on the 2017 Ethernet Roadmap for Networked Storage

Jan 9, 2017

Questions on the 2017 Ethernet Roadmap for Networked Storage

Jan 9, 2017

Last month, experts from Dell EMC, Intel, Mellanox and Microsoft convened to take a look ahead at what’s in store for Ethernet Networked Storage this year. It was a fascinating discussion of anticipated updates. If you missed the webcast, “2017 Ethernet Roadmap for Networked Storage,” it’s now available on-demand. We had a lot of great questions during the live event and we ran out of time to address them all, so here are answers from our speakers.

Q. What’s the future of twisted pair cable? What is the new speed being developed with twisted pair cable?

A. By twisted pair I assume you mean USTP CAT5,6,7 etc. The problem going forward with high speed signaling is the USTP stands for Un-Shielded and the signal radiates off the wire very quickly. At 25G and 50G this is a real problem and forces the line card end to have a big, power consuming and costly chip to dig the signal out of the noise. Anything can be done, but at what cost. 25G BASE-T is being developed but the reach is somewhere around 30 meters. Cost, size, power consumption are all going up and reach going down – all opposite to the trends in modern high speed data centers. BASE-T will always have a place for those applications that don’t need the faster rates.

Q. What do you think of RCx standards and cables?

A. So far, Amphenol, JAE and Volex are the suppliers who are members of the MSA. Very few companies have announced or discussed RCx. In addition to a smaller connector, not having an EEPROM eliminates steps in the cable assembly manufacture, hence helping with lowering the cost when compared to traditional DAC cabling. The biggest advantage of RCx is that it can help eliminate bulky breakout cables within a rack since a single RCx4 receptacle can accept a number of combinations of single lane, 2 lane or 4 lane cable with the same connector on the host. RCx ports can be connected to existing QSFP/SFP infrastructure with appropriate cabling. It remains to be seen, however, if it becomes a standard and popular product or remain as a custom solution.

Q. How long does AOC normally reach, 3m or 30m?

A. AOCs pick it up after DAC drops off about 3m. Most popular reaches are 3,5,and 10m and volume drops rapidly after 15,20,30,50, and100. We are seeing Ethernet connected HDD’s at 2.5GbE x 2 ports, and Ceph touting this solution. This seems to play well into the 25/50/100GbE standards with the massive parallelism possible.

Q. How do we scale PCIe lanes to support NVMe drives to scale, and to replace the capacity we see with storage arrays populated completely with HDDs?

A. With the advent of PCIe Gen 4, the per-lane rate of PCIe is going from 8 GT/s to 16GT/s. Scaling of PCIe is already happening.

Q. How many NVMe drives does it take to saturate 100GbE?

A. 3 or 4 depending on individual drives.

Q. How about the reliability of Ethernet? A lot of people think Fibre Channel has better reliability than Ethernet.

A. It’s true that Fibre Channel is a lossless protocol. Ethernet frames are sometimes dropped by the switch, however, network storage using TCP has built in error-correction facility. TCP was designed at a time when networks were less robust than today. Ethernet networks these days are far more reliable.

Q. Do the 2.5GbE and 5GbE refer to the client side Ethernet port or the server Ethernet port?

A. It can exist on both the client side and the server side Ethernet port.

Q. Are there any 25GbE or 50GbE NICs available on the market?

A. Yes, there are many that are on the market from a number of vendors, including Dell, Mellanox, Intel, and a number of others.

Q. Commonly used Ethernet speeds are either 10GbE or 40GbE. Do the new 25GbE and 50GbE require new switches?

A. Yes, you need new switches to support 25GbE and 50GbE. This is, in part, because the SerDes rate per lane at 25 and 50GbE is 25Gb/s, which is not supported by the 10 and 40GbE switches with a maximum SerDes rate of 10Gb/s.

Q. With a certain number of SerDes coming off the switch ASIC, which would you prefer to use 100G or 40G if assuming both are at the same cost?

A. Certainly 100G. You get 2.5X the bandwidth for the same cost under the assumptions made in the question.

Q. Are there any 100G/200G/400G switches and modulation available now?

A. There are many 100G Ethernet switches available on the market today include Dell’s Z9100 and S6100, Mellanox’s SN2700, and a number of others. The 200G and 400G IEEE standards are not complete as of yet. I’m sure all switch vendors will come out with switches supporting those rates in the future.

Q. What does lambda mean?

A. Lambda is the symbol for wavelength.

Q. Is the 50GbE standard ratified now?

A. IEEE 802.3 just recently started development of a 50GbE standard based upon a single-lane 50 Gb/s physical layer interface. That standard is probably about 2 years away from ratification. The 25G Ethernet Consortium has a ratified specification for 50GbE based upon a dual-lane 25 Gb/s physical layer interface.

Q. Are there any parallel options for using 2 or 4 lanes like in 128GFCp?

A. Many Ethernet specifications are based upon parallel options. 10GBASE-T is based upon 4 twisted-pairs of copper cabling. 100GBASE-SR4 is based upon 4 lanes (8 fibers) of multimode fiber. Even the industry MSA for 100G over CWDM4 is based upon four wavelengths on a duplex single-mode fiber. In some instances, the parallel option is based upon the additional medium (extra wires or fibers) but with fiber optics, parallel can be created by using different wavelengths that don’t interfere with each other.

Leave a Reply

Containers, Docker and Storage – An Expert Q&A

Dec 19, 2016

Containers continue to be a hot topic today as is evidenced by the more than 2,000 people who have already viewed our SNIA Cloud webcasts, “Intro to Containers, Container Storage and Docker“ and “Containers: Best Practices and Data Management Services.” In this blog, our experts, Keith Hudgins of Docker and Andrew Sullivan of NetApp, address questions from our most recent live event.

Q. What is the major challenge for storage in containerized environment?

A. Containers move fast. Users can spin up and spin down containers extremely quickly. The biggest challenge in production-bound container environments is simply keeping up with the movement of data.

Docker Engine does not delete base container images when the container is shut down. Likewise, Registry assumes you’ve got unlimited storage on hand. For containers that push frequent revisions (as would be the case in a continuous delivery environment), that leads to a lot of orphaned container images that can fill up all available storage if left unchecked.

There are some community-led scripts that will help to keep things in control. That’s the beauty of community-led technology.

Q. What about the speed of retrieving the data from storage?

A. That’s where being a solid storage architect comes in. Every storage system has different strengths and weaknesses, so it’s important to engineer your solution to fit your performance goals. Docker containers are running on the main kernel of the host system. IO is not constrained by abstraction, as in the case of virtual machines. Rather, it is constrained more by density – hundreds of containers on a host can push massive IOPS, so you want your pipes fat and data sources close to the host systems.

Q. Can you expand on moving Docker Volumes from On-Premise bare metal to Cloud Service Providers? Data Migration? Encryption?

A. None of these capabilities are built-in to Docker Engine. We rely on external storage systems to provide those features. Private-to-cloud replication is primarily a feature of software-based companies, like Portworx, Blockbridge, or Hedvig. Encryption and migration are both common features across other companies as well. Flocker from ClusterHQ is a service broker system that provides many bolt-on features for storage systems they support. You can also use community-supplied services like Ceph to get you there.

Q. Are you familiar with “Flocker” that apparently is able to copy persistent data to another container? Can share your thoughts?

A. Yes. ClusterHQ (makers of Flocker) provide an API broker that sits between storage engines and Docker (and other dynamic infrastructure providers, like OpenStack), and they also provide some bolt-on features like replication and encryption.

Q. Is there any sort of feature in the volume plugins that allows a persistent volume to re-connect to a container if the container is moved across multiple hosts?

A. There’s no feature in plugins to cover that specifically. The plugin API is very simple. In practice, what you would do is write your plugin to expose volumes to Docker Engine on every host that it’s possible to mount that volume. In your container specification, whether it’s a Compose file, DAB file, or what have you, specify the name of your volume. Wherever that unique name is encountered, it will be mounted and attached to the container when it’s re-launched.

If you have more questions on containers, Docker and storage, check out our first Q&A blog: Containers: No Shortage of Interest or Questions.

I also encourage you to join our Containers opt-in email list. It will be a good way to keep up with all the SNIA Cloud is doing on this important technology.

Leave a Reply

Ethernet Networked Storage – FAQ

Dec 8, 2016

At our SNIA Ethernet Storage Forum (ESF) webcast “Re-Introduction to Ethernet Networked Storage,” we provided a solid foundation on Ethernet networked storage, the move to higher speeds, challenges, use cases and benefits. Here are answers to the questions we received during the live event.

Q. Within the iWARP protocol there is a layer called MPA (Marker PDU Aligned Framing for TCP) inserted for storage applications. What is the point of this protocol?

A. MPA is an adaptation layer between the iWARP Direct Data Placement Protocol and TCP/IP. It provides framing and CRC protection for Protocol Data Units. MPA enables packing of multiple small RDMA messages into a single Ethernet frame. It also enables an iWARP NIC to place frames received out-of-order (instead of dropping them), which can be beneficial on best-effort networks. More detail can be found in IETF RFC 5044 and IETF RFC 5041.

Q. What is the API for RDMA network IPC?

The general API for RDMA is called verbs. The OpenFabrics Verbs Working Group oversees the development of verbs definition and functionality in the OpenFabrics Software (OFS) code. You can find the training content from OpenFabrics Alliance here. General information about RDMA for Ethernet (RoCE) is available at the InfiniBand Trade Association website. Information about Internet Wide Area RDMA Protocol (iWARP) can be found at IETF: RFC 5040, RFC 5041, RFC 5042, RFC 5043, RFC 5044.

Q. RDMA requires TCP/IP (iWARP), InfiniBand, or RoCE to operate on with respect to NVMe over Fabrics. Therefore, what are the advantages of disadvantages of iWARP vs. RoCE?

A. Both RoCE and iWARP support RDMA over Ethernet. iWARP uses TCP/IP while RoCE uses UDP/IP. Debating which one is better is beyond the scope of this webcast, but you can learn more by watching the SNIA ESF webcast, “How Ethernet RDMA Protocols iWARP and RoCE Support NVMe over Fabrics.”

Q. 100Gb Ethernet Optical Data Center solution?

A. 100Gb Ethernet optical interconnect products were first available around 2011 or 2012 in a 10x10Gb/s design (100GBASE-CR10 for copper, 100GBASE-SR10 for optical) which required thick cables and a CXP and a CFP MSA housing. These were generally used only for switch-to-switch links. Starting in late 2015, the more compact 4x25Gb/s design (using the QSFP28 form factor) became available in copper (DAC), optical cabling (AOC), and transceivers (100GBASE-SR4, 100GBASE-LR4, 100GBASE-PSM4, etc.). The optical transceivers allow 100GbE connectivity up to 100m, or 2km and 10km distances, depending on the type of transceiver and fiber used.

Q. Where is FCoE being used today?

A. FCoE is primarily used in blade server deployments where there could be contention for PCI slots and only one built-in NIC. These NICs typically support FCoE at 10Gb/s speeds, passing both FC and Ethernet traffic via connect to a Top-of-Rack FCoE switch which parses traffic to the respective fabrics (FC and Ethernet). However, it has not gained much acceptance outside of the blade server use case.

Q. Why did iSCSI start out mostly in lower-cost SAN markets?

A. When it first debuted, iSCSI packets were processed by software initiators which consumed CPU cycles and showed higher latency than Fibre Channel. Achieving high performance with iSCSI required expensive NICs with iSCSI hardware acceleration, and iSCSI networks were typically limited to 100Mb/s or 1Gb/s while Fibre Channel was running at 4Gb/s. Fibre Channel is also a lossless protocol, while TCP/IP is lossey, which caused concerns for storage administrators. Now however, iSCSI can run on 25, 40, 50 or 100Gb/s Ethernet with various types of TCP/IP acceleration or RDMA offloads available on the NICs.

Q. What are some of the differences between iSCSI and FCoE?

A. iSCSI runs SCSI protocol commands over TCP/IP (except iSER which is iSCSI over RDMA) while FCoE runs Fibre Channel protocol over Ethernet. iSCSI can run over layer 2 and 3 networks while FCoE is Layer 2 only. FCoE requires a lossless network, typically implemented using DCB (Data Center Bridging) Ethernet and specialized switches.

Q. You pointed out that at least twice that people incorrectly predicted the end of Fibre Channel, but it didn’t happen. What makes you say Fibre Channel is actually going to decline this time?

A. Several things are different this time. First, Ethernet is now much faster than Fibre Channel instead of the other way around. Second, Ethernet networks now support lossless and RDMA options that were not previously available. Third, several new solutions–like big data, hyper-converged infrastructure, object storage, most scale-out storage, and most clustered file systems–do not support Fibre Channel. Fourth, none of the hyper-scale cloud implementations use Fibre Channel and most private and public cloud architects do not want a separate Fibre Channel network–they want one converged network, which is usually Ethernet.

Q. Which storage protocols support RDMA over Ethernet?

A. The Ethernet RDMA options for storage protocols are iSER (iSCSI Extensions for RDMA), SMB Direct, NVMe over Fabrics, and NFS over RDMA. There are also storage solutions that use proprietary protocols supporting RDMA over Ethernet.

Leave a Reply

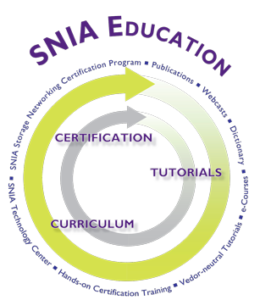

Storage Training Your Way - Education for 21st Century Professional Development

Dec 6, 2016

By Paul Talbut, SNIA Global Education and Regional Affiliate Program Director

It is widely accepted that those who are considered knowledge workers (those who use a screen or the internet as a part of their daily work routine) face constant disruption and distractions. The constant flow of emails, online news feeds, social media, and personal interests tend to draw people away from the need to concentrate on the job at hand.

Recent studies suggest that workers are interrupted on average every five minutes, ironically often by work applications or collaboration tools. If this is happening to the work function, then it is not surprising that the opportunity to undergo training or personal development, where focus and concentration without distraction is key to learning, is severely restricted. The same studies suggest that around 1% of the working week is all that workers have to devote to focus on training and development.

By Paul Talbut, SNIA Global Education and Regional Affiliate Program Director

It is widely accepted that those who are considered knowledge workers (those who use a screen or the internet as a part of their daily work routine) face constant disruption and distractions. The constant flow of emails, online news feeds, social media, and personal interests tend to draw people away from the need to concentrate on the job at hand.

Recent studies suggest that workers are interrupted on average every five minutes, ironically often by work applications or collaboration tools. If this is happening to the work function, then it is not surprising that the opportunity to undergo training or personal development, where focus and concentration without distraction is key to learning, is severely restricted. The same studies suggest that around 1% of the working week is all that workers have to devote to focus on training and development. This has led to a dramatic shift in the way people consume their training content. It is no longer practical or cost effective to lock people away in a training room for five days. Any such educational value is now much more likely to be absorbed on the train or the bus on the way to work, and so the nature of the content delivery has to change.

Educational content needs to be delivered in modules and in a variety of formats to match the plethora of personal devices and platforms available today. Even without the formality of instructional design and a comprehensive curriculum, content such as podcasts, webcasts, and training materials need to be accessible on the move and in such bite-size chunks that we can give people the information they need and make it available at the times that suit them best.

This has led to a dramatic shift in the way people consume their training content. It is no longer practical or cost effective to lock people away in a training room for five days. Any such educational value is now much more likely to be absorbed on the train or the bus on the way to work, and so the nature of the content delivery has to change.

Educational content needs to be delivered in modules and in a variety of formats to match the plethora of personal devices and platforms available today. Even without the formality of instructional design and a comprehensive curriculum, content such as podcasts, webcasts, and training materials need to be accessible on the move and in such bite-size chunks that we can give people the information they need and make it available at the times that suit them best. SNIA is changing the way study guides and materials are made available to our constituents by collaborating with our members and training partners to focus on a wide variety of educational channels. Materials and study guides are now available via e-book, PDF, YouTube, BrightTALK webcasts, podcasts, and online instructor led courses.

The challenge now is to make the content compelling and attractive enough to compete with all the other digital content available for consumption, and provide the opportunity to learn something new about storage rather than watching ice-buckets, mannequins or the latest cute cat.

To date, SNIA has certified over 12,000 storage professionals worldwide, and our vendor-neutral certification program continues to be the industry leader in the independent assessment of storage technology skills.

SNIA is changing the way study guides and materials are made available to our constituents by collaborating with our members and training partners to focus on a wide variety of educational channels. Materials and study guides are now available via e-book, PDF, YouTube, BrightTALK webcasts, podcasts, and online instructor led courses.

The challenge now is to make the content compelling and attractive enough to compete with all the other digital content available for consumption, and provide the opportunity to learn something new about storage rather than watching ice-buckets, mannequins or the latest cute cat.

To date, SNIA has certified over 12,000 storage professionals worldwide, and our vendor-neutral certification program continues to be the industry leader in the independent assessment of storage technology skills.

Find a similar article by tags

StorageLeave a Reply

No Shortage of Container Storage Questions

Nov 29, 2016

Find a similar article by tags

Cloud StorageLeave a Reply

No Shortage of Container Storage Questions

Nov 29, 2016

We covered a lot of ground in out recent SNIA Ethernet Storage Forum webcast, “Current State of Storage in the Container World.” We had a technical discussion on why containers are so compelling, how Docker containers work, persistent shared storage and future considerations for container storage. We received some great questions during the live event, and as promised, here are answers to them all.

Q. Docker cannot be installed on bare metal and requires a base OS to operate upon right?

A. That is correct.

Q. Does the application code need to be changed so that it can “fit and operate” in a container?

A. No, the application code does not need to change. The challenge most people face when migrating an application to a container is how to maintain the application’s state. One of the motivations for this webcast was to explain how to allow applications within containers to persist data. Hopefully the Docker Volume construct will meet your needs.

Q. Seems like containers share one OS/kernel… That suggests that there is just one OS in the “containerized” server… And yet there is still mention of hypervisor (or at least Hyper-V)… Can you clarify? If the containers share an OS, is a hypervisor needed?

A. You are correct, containers are designed to share a single kernel; therefore a hypervisor is not required to run containers. Having said that, VMware and Microsoft both offer options that run a single container in its own virtual machine (running a minimal operating system).

Q. Can the Docker Hub be compared to something like the GitHub?

A. Yes, that is a great analogy. Docker Hub (hub.docker.com) is to container images as GitHub (github.com) is to source code.

Q. What are the differences between the base and the host image?

A. If you’re referring to the webcast slides; the box labeled “Base Image” is the first layer in an image. The box labeled “Host OS” is not a layer, but represents the hosting operating system (kernel) that is shared by the containers.

Q. So there is a separate root per container?

A. In most cases the image will provide a root, therefore each container will have a separate root. This is made possible by a kernel feature called namespaces. Alternatively, Docker does allow you to share a directory between the host operating system and any number of containers though.

Q. If Deduplication is enabled on the storage LUNs, won’t that affect the performance of the containers?

A. Well implemented data reduction features (compression and deduplication) should have little to no effect on performance and should provide significant benefit by reducing the space required to store containers.

Q. Can you please quickly review the concept of copy-on-write with one or two sentences to boil it down?

A. How the copy-on-write works depends on whether the driver is file or block based. For the sake of simplicity, let’s assume a file-based implementation. Since the image layers are read-only, we need an area to store the changes that the container has made. This area is the copy-on-write layer. When a process reads a file that has not been modified, the file is read from one of the read only layers. When that file is modified and needs to be written back to disk, the new file is written to the copy-on-write layer as is the metadata that describes the file. The next time this file is read, it is read from copy-on-write layer. The graph driver is responsible for this functionality and varies by implementation.

Q. Can network locations be used for /data? If yes, how does the Docker Engine manage network authentication for the driver?

A. Yes, network locations can be used. The best practice is to use the Local Volume Driver, where you can pass in the required authentication via the options (see slide 15). Alternatively, the network location can be mounted on the host operating system and exposed to containers (see slides 21 & 22).

Q. Is this where VAAI like primitives would get implemented?

A. VAAI defines several in-band primitives. The Docker Volume plug-in framework is completely out-of-band. There can be some overlap in features though. For example, the XCOPY primitive can be used to offload ‘copy jobs’ to an array. If the vendor chooses to do so, a ‘copy job’ can be offloaded through the Docker Volume plug-in as well. For example, a plug-in might implement a “clone” option that provides this service.

Q. Could you share some details about Kubernetes storage ? Persistent volumes and the difference from Docker volumes? Also, what is your perspective of Flocker?

A. Kubernetes has the concept of persistent storage. This abstraction is also called a volume. In addition, Kubernetes provides a plug-in option as well. The Kubernetes implementation predates the Docker Volume and is currently not compatible.

Q. Comment on mainframe: IBM runs Linux on zSeries, therefore can run Linux Docker containers.

A. Thanks, that’s good to know.

Q. How many operating systems changes on the x86 platform? How many on the mainframe platform? Can x86 architecture run the same code/OS from 40 years ago? Docker on mainframe?

A. The mainframe architecture has been very solid and consistent for many years.

Q. What is a big challenge for storage in container environment?

A. I don’t think storage has a challenge in the container environment. I think, with a properly implemented Docker Volume Plug-in, storage provides a solution to the persistent shared storage need in a container environment.

Q. Do you ever look into RexRay or VMDK storage drivers?

A. Yes, these are both examples of Docker Volume plug-in implementations.

Leave a Reply

Learn How to Develop Interoperable Cloud Encryption and Access Control

Nov 21, 2016

- New CDMI features (Encrypted Objects and Delegated Access Control)

- Implementation experiences with new features

- A live demo of a healthcare-based example

Leave a Reply

Learn How to Develop Interoperable Cloud Encryption and Access Control

Nov 21, 2016

SNIA Cloud is hosting a live webcast on December 20th, “Developing Interoperable Cloud Encryption and Access Control,” to discuss and demonstrate encrypted objects and delegated access control. For the data protection needs of sharing health and other data across different cloud services, this webcast will explore the capabilities of the Cloud Data Management Interface (CDMI) in addressing these requirements and show implementations of CDMI extensions for a health care example.

See it in action! This webcast will include a demonstration by Peter van Liesdonk of Philips who will share the results of testing at the SDC 2016 Cloud Plugfest for Encrypted Objects and Delegated Access Control extensions to CDMI 1.1.1.

You’ll will see and learn:

- New CDMI features (Encrypted Objects and Delegated Access Control)

- Implementation experiences with new features

- A live demo of a healthcare-based example

Register today. My colleagues, Peter van Liesdonk, David Slik and I will be on-hand to answer any questions you may have. We hope to see you there.

Leave a Reply

Common Questions on Clustered File Systems

Nov 18, 2016

Leave a Reply