Understanding How Data Privacy, Data Governance, and Data Security Differ

Mar 2, 2022

Find a similar article by tags

UncategorizedUnderstanding How Data Privacy, Data Governance, and Data Security Differ

Mar 2, 2022

Ever wonder what’s the difference between data privacy, data governance and data security? All of these terms are frequently (and mistakenly) used interchangeably. They are indeed related, particularly when it comes to keeping data in the cloud protected, private and secure, but the definitions and mechanics of executing on each are all quite different.

Join us on March 30, 2022 for another SNIA Cloud Storage Technologies Initiative (CSTI) “15 Minutes in the Cloud” session for an overview of what each of these terms means, how and where they intersect, and why each one demands adequate attention or you risk threatening the overall security of your data.

Presenting will be Thomas Rivera, CISSP, CIPP/US, CDPSE and Strategic Success Manager at VMware Black Carbon together with Eric Hibbard, CISSP-ISSAP, ISSMP, ISSEP, CIPP/US, CIPT, CISA, CDPSE, CCSK and Director, Product Planning – Storage Networking & Security, Samsung Semiconductor. As you see, our security experts have more credentials than the alphabet!

Register today for “15 Minutes in the Cloud: Data Privacy vs. Governance vs. Security.” We look forward to seeing you on March 30th.

Find a similar article by tags

Leave a Reply

Understanding How Data Privacy, Data Governance, and Data Security Differ

Mar 2, 2022

Find a similar article by tags

Cloud Storage Data Governance data privacy Data Protection data security StorageLeave a Reply

Scaling Storage to New Heights

Feb 28, 2022

- Is HPC a rich man’s game? The scale appears to have increased dramatically over the last few years. Is the cost increasing to the point where this has only for wealthy organizations or has the cost decreased to the point where small to medium-sized enterprises might be able to indulge in HPC activities?

- [Torben] I would say the answer is both. To build these really super big systems you

- [Michael] We are seeing the same thing. We like to say that these types of HPC systems are more like a time machine that show you what will show up in the general enterprise world a few years after. The cloud space is a prime example. All of the large HPC parallel file systems are now being adopted in the cloud so we get a combination of the deployment mechanisms coming from the cloud world with the scale and robustness of the storage software infrastructure. Those are married together in very efficient ways. So, while not everybody will

- [Glyn] Being the consultant of the group, I guess I should say it depends. It depends on how you want to define HPC. So, I've got a device on my desk in front of me at the moment that I can fit in the palm of my hand it has more than a thousand graphics GPU cores in it and so

Find a similar article by tags

Cloud StorageLeave a Reply

Scaling Storage to New Heights

Feb 28, 2022

Earlier this month, the SNIA Cloud Storage Technologies Initiative (CSTI) presented a live webcast called “High Performance Storage at Exascale” where our HPC experts, Glyn Bowden, Torben Kling Petersen and Michael Hennecke talked about processing and storing data in shockingly huge numbers. The session raises some interesting points on how scale is quickly being redefined and what was cost compute prohibitive a few years ago for most, may be in reach for all sooner than expected.

- Is HPC a rich man’s game? The scale appears to have increased dramatically over the last few years. Is the cost increasing to the point where this has only for wealthy organizations or has the cost decreased to the point where small to medium-sized enterprises might be able to indulge in HPC activities?

- [Torben] I would say the answer is both. To build these really super big systems you

need you need hundreds of millions of dollars because the sheer cost of infrastructure goes beyond anything that we’ve seen in the past, but on the other hand you also see HPC systems in the most unlikely places, like a web retailer that mainly sells shoes. They had a Lustre system driving their back end and HPC out-competed a standard NFS solution. So, we see this going

in different directions. Price has definitely gone down significantly; essentially the cost of a large storage system now is the same as it was 10 years ago. It’s just that now it’s 100 times faster and 50 times larger. That said, it’s not it’s not cheap to do any of these things because of the amount of hardware you need.

- [Michael] We are seeing the same thing. We like to say that these types of HPC systems are more like a time machine that show you what will show up in the general enterprise world a few years after. The cloud space is a prime example. All of the large HPC parallel file systems are now being adopted in the cloud so we get a combination of the deployment mechanisms coming from the cloud world with the scale and robustness of the storage software infrastructure. Those are married together in very efficient ways. So, while not everybody will

build a 200 petabyte or flash system for those types of use cases the same technologies and the same software layers can be used at all scales. I really believe that this is a like the research lab for what will become mainstream pretty quickly. On the cost side, another aspect that we haven’t covered is this old notion that tape is dead, disk is dead, and always the next technology is replacing the old ones. That hasn’t happened, but certainly as new technologies

arrive and cost structures change you get shifts in dollars per terabyte or dollars per terabyte per second which is more the HPC metric. So, how do we get in QLC drives to lower the price of flash and then build larger systems out of that? That’s also technology explorations done at this level and then benefit everybody.

- [Glyn] Being the consultant of the group, I guess I should say it depends. It depends on how you want to define HPC. So, I’ve got a device on my desk in front of me at the moment that I can fit in the palm of my hand it has more than a thousand graphics GPU cores in it and so

that costs under $100. I can build a cluster of 10 of those for under $1,000. If you look back five years, that would absolutely be classified as HPC based on the amount of cores and amount of processing it can do. So, these things are shrinking and becoming far more affordable and far more commodity at the low end meaning that we can put what was traditionally sort of a

an HPC cluster and run it on things like Raspberry Pi’s at the edge somewhere. You can absolutely get the architecture and what was previously seen as that kind of parallel batch processing against many cores for next to nothing. As Michael said it’s really the time machine and this is where we’re catching up with what was an HPC. The big stuff is always going to cost the big bucks, but I think it’s affordable to get something that you can play on and work as an HPC system.

We also had several questions on persistent memory. SNIA covers this topic extensively. You can access a wealth of information here. I also encourage you to register for SNIA’s 2022 Persistent Memory + Computational Storage Summit which will be held virtually May 25-26. There was also interest in CXL (Compute Express Link, a high speed cache-coherent interconnect for processors, memory and accelerators). You can find more information on that in the SNIA Educational Library.

Find a similar article by tags

Cloud StorageLeave a Reply

Scaling Storage to New Heights

Feb 22, 2022

Leave a Reply

Computational Storage – Driving Success, Driving Standards Q&A

Feb 16, 2022

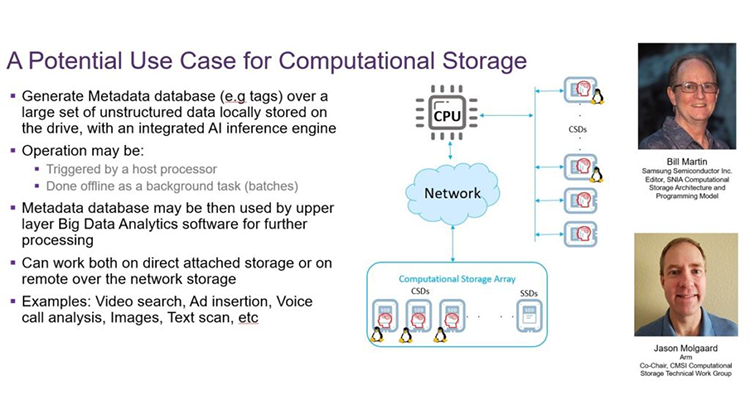

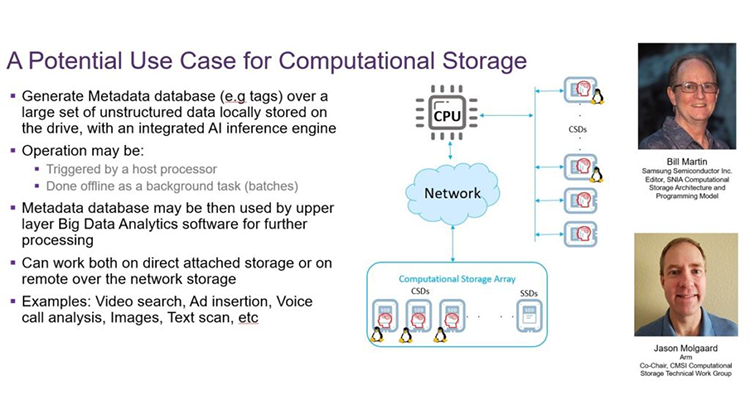

Our recent SNIA Compute, Memory, and Storage Initiative (CMSI) webcast, Computational Storage – Driving Success, Driving Standards, explained the key elements of the SNIA Computational Storage Architecture and Programming Model and the SNIA Computational Storage API . If you missed the live event, you can watch on-demand and view the presentation slides. Our audience asked a number of questions, and Bill Martin, Editor of the Model, and Jason Molgaard, Co-Chair of the SNIA Computational Storage Technical Work Group, teamed up to answer them.

What's being done in SNIA to implement data protection (e.g. RAID) and CSDs? Can data be written/striped to CSDs in such a way that it can be computed on within the drive?

Bill Martin: The challenges of computation on a RAID system are outside the scope of the Computational Storage Architecture and Programming Model. The Model does not address data protection in that it does not specify how data is written nor how computation is done on the data. Section 3 of the Model discusses the Computational Storage Array (CSA), a storage array that is able to execute one or more Computational Storage Functions (CSFs). As a storage array, a CSA contains control software, which provides virtualization to storage services, storage devices, and Computational Storage Resources for the purpose of aggregating, hiding complexity, or adding new capabilities to lower level storage resources. The Computational Storage Resources in the CSA may be centrally located or distributed across CSDs/CSPs within the array.

When will Version 1.0 of the Computational Storage Architecture and Programming Model be available and when is operating system support expected?

Bill Martin: We expect Version 1.0 of the model to be available Q2 2022. The Model is agnostic with regard to operating systems, but we anticipate a publicly available API library for Computational Storage over NVMe.

Will Computational Storage library support CXL accelerators as well? How is the collaboration between these two technology consortiums?

Jason Molgaard: The Computational Storage Architecture and Programming Model is agnostic to the device interface protocol. Computational Storage can work with CXL. SNIA currently has an alliance agreement in place with the CXL Consortium and will interface with that group to help enable the CXL interface with Computational Storage. We anticipate there will be technical work to develop a computational storage library utilizing the CS API that will support CXL in the future.

System memory is required for PCIe/NVMe SSD. How does computational storage bypass system memory?

Bill Martin: The computational storage architecture relies on computation using memory that is local to the Computational Storage Device (CSx).Section B.2.4 of the Model describes the topic of Function Data Memory (FDM) on the CSx and the movement of data from media to FDM and back. Note that a device does not need to access system memory for computation – only to read and write data. Figure B.2.8 from the Model illustrates CSx usage.

Is this CS API Library vendor specific, or is this a generic library which could also be provided for example by an operating system vendor?

Bill Martin: The Computational Storage API is not a library, it is a generic interface definition. It describes the software application interface definitions for a Computational Storage device (CSx).There will be a generic library for a given protocol layer, but there may also be vendor specific additions to that generic library for vendor specific CSx enhancements beyond the standard protocol definition.

Are there additional use cases out there? Where could I see them and get more information?

Jason Molgaard: Section B.2.5 of the Computational Storage Architecture and Programming Model provides an example of application deployment. The API specification will have a library that could be used and/or modified for a specific device. If the CSx does not support everything in NVMe, an individual could write a vendor specific library that supports some host activity.

There are a lot of acronyms and terms used in the discussion. Is there a place where they are defined?

Jason Molgaard: Besides the Model and the API, which provide the definitive definition of the terms and acronyms, there are some great resources. Recent presentations at the SNIA Storage Developer Conference on Computational Storage Moving Forward with an Architecture and API and Computational Storage APIs provide a broad view of how the specifications affect the growing industry computational storage efforts. Additional videos and presentations are available in the SNIA Educational Library, search for “Computational Storage”.

Leave a Reply

Using SNIA Swordfish™ to Manage Storage on Your Network

Feb 16, 2022

Consider how we charge our phones: we can plug them into a computer’s USB port, into a wall outlet using a power adapter, or into an external/portable power bank. We can even place them on top of a Qi-enabled pad for wireless charging. None of these options are complicated, but we routinely charge our phones throughout the day and, thanks to USB and standardized charging interfaces, our decision boils down to what is available and convenient.

Now consider how a storage administrator chooses to add storage capacity to a datacenter. There are so many ways to do it: Add one or more physical drives to a single server; add additional storage nodes to a software-defined storage cluster; add additional storage to a dedicated storage network device that provides storage to be used by other (data) servers.

These options all require consideration as to the data protection methods utilized such as RAID or Erasure Coding, and the performance expectations these entail. Complicating matters further are the many different devices and standards to choose from, including traditional spinning HDDs, SSDs, Flash memory, optical drives, and Persistent Memory.

Each storage instance can also be deployed as file, block, or object storage which can affect performance. Selection of the communication protocol such as iSCSI and FC/FCoE can limit scalability options. And finally, with some vendors adding the requirement of using their management paradigm to control these assets, it’s easy to see how these choices can be daunting.

But… it doesn’t need to be so complicated!

The Storage Network Industry Association (SNIA) has a mission to lead the storage industry in developing and promoting vendor-neutral architectures, standards and education services that facilitate the efficient management, movement and security of information. To that end, the organization created SNIA Swordfish™, a specification that provides a unified approach for the management of storage and servers in hyperscale and cloud infrastructure environments.

Swordfish is an API specification that defines a simplified model that is client-oriented, designed to integrate with the technologies used in cloud data center environments and can be used to accomplish a broad range of simple-to-advanced storage management tasks. These tasks focus on what IT administrators need to do with storage equipment and storage services in a data center. As a result, the API provides functionality that simplifies the way storage can be allocated, monitored, and managed, making it easier for IT administrators to integrate scalable solutions into their data centers.

SNIA Swordfish can provide a stand-alone solution, or act as an extension to the DMTF Redfish® specification, using the same easy-to-use RESTful interface and JavaScript Object Notation (JSON) to seamlessly manage storage equipment and storage services.

REST stands for REpresentational State Transfer. We won’t discuss REST architecture in this article, but we use it to show how complex tasks are simplified. A REST API allows an administrator to retrieve information from, or perform a function on, a computer system. Although the syntax can be challenging, most of the requests and responses are based on JSON, which enables requests in plain language so you can read and understand the messages to determine the state of your networked devices. This article assumes we are not programmers creating object code, rather, administrators that need tools to monitor their network.

To examine a network in a REST/JSON environment, you simply start with a browser. The easiest starting point is to show via an example or a “mockup.” Swordfish is a hypermedia API, which allows access to resources via URLs returned from other APIs. The schema for URLs consist of a node (example: www.snia.org, or an IP address: 127.0.0.1) and a resource identifier (redfish/v1/storage). Hence the starting point, referred to as the ‘service root’ will look like: HTTP://127.0.0.0/redfish/v1/storage.

Redfish objects are mainly ‘systems’ (typically servers), Managers (typically a BMC or enclosure manager), and Chassis (physical components and infrastructure). Swordfish adds another: Storage. These are all collections, which all have properties, and all properties have a name and ID, Actions and Oem. Actions inform the user which actions can be performed and Oem contains vendor-specific extensions.

Let’s look at two brief examples of how Swordfish is used.

Here is the response to a query of objects and properties in a standalone Swordfish installation:

Ignoring the extra characters that are part of REST syntax, the information is easier to read and understand when compared to object code. We can also see the designated Servers, Managers and Chassis with the network paths for each.

Of course, a network diagram is more complicated than a single storage installation, so it is represented by a tree diagram:

Within the context of the network tree, using a simple ‘Get’ command, we can determine the capacity of our target storage device:

GET /redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1

The above command returns all the properties for the selected volume, including the capacity:

{

“@Redfish.Copyright”: “Copyright 2014-2020 SNIA. All rights reserved.”,

“@odata.id”: “/redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1”,

“@odata.type”: “#Volume.v1_5_0.Volume”,

“Id”: “1”,

“Name”: “Namespace 1”,

“LogicalUnitNumber”: 1,

“Capacity”: {

“Data”: {

“ConsumedBytes”: 0,

“AllocatedBytes”: 10737418240,

“ProvisionedBytes”: 10737418240

}

},

…

}

The easy-to-execute query can be done directly from a web browser and returns data that is simple, readable, and informational about our target.

The author is not a programmer; many reading this are not either. But as you can see from the example above, being a programmer is not necessary to successfully use a Swordfish storage management interface.

There is, of course, much more that can be done with Swordfish and REST; the intent of this short article was to show how adding storage and monitoring it can be easily done in a network running Swordfish without being a programmer. Many of the queries (like the one shown above) are already available so you don’t have to create them from scratch.

Leave a Reply

Computational Storage: Driving Success, Driving Standards Q and A

Feb 16, 2022

Leave a Reply

Using SNIA Swordfish™ to Manage Storage on Your Network

Feb 16, 2022

, a specification that provides a unified approach for the management of storage and servers in hyperscale and cloud infrastructure environments.

Swordfish is an API specification that defines a simplified model that is client-oriented, designed to integrate with the technologies used in cloud data center environments and can be used to accomplish a broad range of simple-to-advanced storage management tasks. These tasks focus on what IT administrators need to do with storage equipment and storage services in a data center. As a result, the API provides functionality that simplifies the way storage can be allocated, monitored, and managed, making it easier for IT administrators to integrate scalable solutions into their data centers.

SNIA Swordfish can provide a stand-alone solution, or act as an extension to the DMTF Redfish® specification, using the same easy-to-use RESTful interface and JavaScript Object Notation (JSON) to seamlessly manage storage equipment and storage services.

REST stands for REpresentational State Transfer. We won’t discuss REST architecture in this article, but we use it to show how complex tasks are simplified. A REST API allows an administrator to retrieve information from, or perform a function on, a computer system. Although the syntax can be challenging, most of the requests and responses are based on JSON, which enables requests in plain language so you can read and understand the messages to determine the state of your networked devices. This article assumes we are not programmers creating object code, rather, administrators that need tools to monitor their network.

To examine a network in a REST/JSON environment, you simply start with a browser. The easiest starting point is to show via an example or a “mockup.” Swordfish is a hypermedia API, which allows access to resources via URLs returned from other APIs. The schema for URLs consist of a node (example: www.snia.org, or an IP address: 127.0.0.1) and a resource identifier (redfish/v1/storage). Hence the starting point, referred to as the ‘service root’ will look like: HTTP://127.0.0.0/redfish/v1/storage.

Redfish objects are mainly ‘systems’ (typically servers), Managers (typically a BMC or enclosure manager), and Chassis (physical components and infrastructure). Swordfish adds another: Storage. These are all collections, which all have properties, and all properties have a name and ID, Actions and Oem. Actions inform the user which actions can be performed and Oem contains vendor-specific extensions.

Let’s look at two brief examples of how Swordfish is used.

Here is the response to a query of objects and properties in a standalone Swordfish installation:

Ignoring the extra characters that are part of REST syntax, the information is easier to read and understand when compared to object code. We can also see the designated Servers, Managers and Chassis with the network paths for each.

Of course, a network diagram is more complicated than a single storage installation, so it is represented by a tree diagram:

, a specification that provides a unified approach for the management of storage and servers in hyperscale and cloud infrastructure environments.

Swordfish is an API specification that defines a simplified model that is client-oriented, designed to integrate with the technologies used in cloud data center environments and can be used to accomplish a broad range of simple-to-advanced storage management tasks. These tasks focus on what IT administrators need to do with storage equipment and storage services in a data center. As a result, the API provides functionality that simplifies the way storage can be allocated, monitored, and managed, making it easier for IT administrators to integrate scalable solutions into their data centers.

SNIA Swordfish can provide a stand-alone solution, or act as an extension to the DMTF Redfish® specification, using the same easy-to-use RESTful interface and JavaScript Object Notation (JSON) to seamlessly manage storage equipment and storage services.

REST stands for REpresentational State Transfer. We won’t discuss REST architecture in this article, but we use it to show how complex tasks are simplified. A REST API allows an administrator to retrieve information from, or perform a function on, a computer system. Although the syntax can be challenging, most of the requests and responses are based on JSON, which enables requests in plain language so you can read and understand the messages to determine the state of your networked devices. This article assumes we are not programmers creating object code, rather, administrators that need tools to monitor their network.

To examine a network in a REST/JSON environment, you simply start with a browser. The easiest starting point is to show via an example or a “mockup.” Swordfish is a hypermedia API, which allows access to resources via URLs returned from other APIs. The schema for URLs consist of a node (example: www.snia.org, or an IP address: 127.0.0.1) and a resource identifier (redfish/v1/storage). Hence the starting point, referred to as the ‘service root’ will look like: HTTP://127.0.0.0/redfish/v1/storage.

Redfish objects are mainly ‘systems’ (typically servers), Managers (typically a BMC or enclosure manager), and Chassis (physical components and infrastructure). Swordfish adds another: Storage. These are all collections, which all have properties, and all properties have a name and ID, Actions and Oem. Actions inform the user which actions can be performed and Oem contains vendor-specific extensions.

Let’s look at two brief examples of how Swordfish is used.

Here is the response to a query of objects and properties in a standalone Swordfish installation:

Ignoring the extra characters that are part of REST syntax, the information is easier to read and understand when compared to object code. We can also see the designated Servers, Managers and Chassis with the network paths for each.

Of course, a network diagram is more complicated than a single storage installation, so it is represented by a tree diagram:

Within the context of the network tree, using a simple ‘Get’ command, we can determine the capacity of our target storage device:

GET /redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1

The above command returns all the properties for the selected volume, including the capacity:

{

“@Redfish.Copyright”: “Copyright 2014-2020 SNIA. All rights reserved.”,

“@odata.id”: “/redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1”,

“@odata.type”: “#Volume.v1_5_0.Volume”,

“Id”: “1”,

“Name”: “Namespace 1”,

“LogicalUnitNumber”: 1,

“Capacity”: {

“Data”: {

“ConsumedBytes”: 0,

“AllocatedBytes”: 10737418240,

“ProvisionedBytes”: 10737418240

}

},

…

}

The easy-to-execute query can be done directly from a web browser and returns data that is simple, readable, and informational about our target.

The author is not a programmer; many reading this are not either. But as you can see from the example above, being a programmer is not necessary to successfully use a Swordfish storage management interface.

There is, of course, much more that can be done with Swordfish and REST; the intent of this short article was to show how adding storage and monitoring it can be easily done in a network running Swordfish without being a programmer. Many of the queries (like the one shown above) are already available so you don’t have to create them from scratch.

Within the context of the network tree, using a simple ‘Get’ command, we can determine the capacity of our target storage device:

GET /redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1

The above command returns all the properties for the selected volume, including the capacity:

{

“@Redfish.Copyright”: “Copyright 2014-2020 SNIA. All rights reserved.”,

“@odata.id”: “/redfish/v1/Systems/Sys-1/Storage/NVMeSSD-EG/Volumes/Namespace1”,

“@odata.type”: “#Volume.v1_5_0.Volume”,

“Id”: “1”,

“Name”: “Namespace 1”,

“LogicalUnitNumber”: 1,

“Capacity”: {

“Data”: {

“ConsumedBytes”: 0,

“AllocatedBytes”: 10737418240,

“ProvisionedBytes”: 10737418240

}

},

…

}

The easy-to-execute query can be done directly from a web browser and returns data that is simple, readable, and informational about our target.

The author is not a programmer; many reading this are not either. But as you can see from the example above, being a programmer is not necessary to successfully use a Swordfish storage management interface.

There is, of course, much more that can be done with Swordfish and REST; the intent of this short article was to show how adding storage and monitoring it can be easily done in a network running Swordfish without being a programmer. Many of the queries (like the one shown above) are already available so you don’t have to create them from scratch.

Leave a Reply